Deep Learning is a computational heavy process. Cutting down the costs is one major challenge along with data curation. Their power hungry training processes had garnered such reputation that researchers have published works reporting the carbon footprint of training networks.

As things can only get complicated from here on, heading into a future with a deluge of machine learning applications, we see new strategies being invented to make training neural networks as efficient as problem.

Updating a neural network to change its predictions on a single input can decrease performance across other inputs. Currently, there are two workarounds commonly used by practitioners:

- Re-train the model on the original dataset augmented with samples that account for the mistake

- Use a manual cache (e.g. lookup table) that overrules model predictions on problematic samples

While being simple, this approach is not robust to minor changes in the input. For instance, it will not generalise to a different viewpoint of the same object or paraphrasing in natural language processing tasks. So, in a paper under review at ICLR 2020, authors who haven’t been named, present an alternative approach that we call Editable Training.

Patchwork On Neural Networks

Editable Neural Networks also belong to the meta-learning paradigm, as they basically ”learn to allow effective patching”.

The problem of efficient neural network patching differs from continual learning, as according to the researchers, editable training setup is not sequential in nature.

Editing in this context means changing model’s predictions on a subset of inputs, corresponding to misclassified objects, without affecting other inputs.

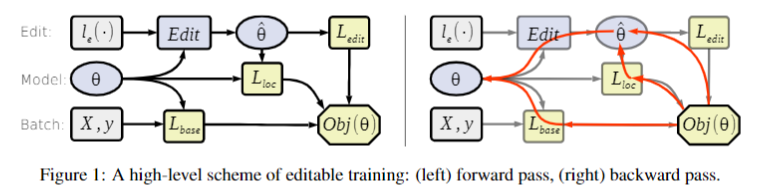

For this purpose, an editor function has been formulated. This function of parameters for a given constraint. In other words, Informally, this is a function that adjusts parameters to satisfy a given constraint whose role is to enforce desired changes in the model’s behavior.

For experiments with image classification, small CIFAR-10 dataset was taken with standard train/test splits. The training dataset is further augmented with random crops and random horizontal flips.

All models trained on this dataset follow the ResNet-18 architecture and use the Adam optimizer with default hyperparameter.

A natural way to implement Edit for deep neural networks is using gradient descent. According to the authors, the standard gradient descent editor can be further augmented with momentum, adaptive learning rates.

However, in many practical scenarios, most of these edits will never occur. For instance, it is far more likely that an image previously classified as ”plane” would require editing into ”bird” than into ”truck”or ”ship”. To address this consideration, the authors employed the Natural Adversarial Examples (NAE) dataset.

This data set contains 7500 natural images that are particularly hard to classify with neural networks. Without edits, a pre-trained model can correctly predict less than 1% of NAEs, but the correct answer is likely to be within top-100 classes ordered by predicted probabilities.

Closing Thoughts

The proposed Editable Training bears some resemblance to the adversarial training, which is the dominant approach of adversarial attack defense. The important difference here is that Editable Training aims to learn models, whose behavior on some samples can be efficiently corrected.

Meanwhile, adversarial training produces models which are robust to certain input perturbations. However, in practice one can use Editable Training to efficiently cover model vulnerabilities against both synthetic and natural adversarial examples.

In many deep learning applications, a single model error can lead to devastating financial, reputational and even life-threatening consequences. Therefore, it is crucially important to correct model mistakes quickly as they appear.

Editable Training, a model-agnostic training technique that encourages fast editing of the trained model and the effectiveness of this method looks promising for large-scale image classification and machine translation tasks as well.