Over the last few years, there have been several innovations in the field of artificial intelligence and machine learning. As technology is expanding into various domains right from academics to cooking robots and others, it is significantly impacting our lives. For instance, a business or finance user is using a machine learning technology as a black box — that means they don’t know what lies within.

Explainability and interpretability are the two words that are used interchangeably. In this article, we take a deeper look at these concepts.

Explainability

Explainability is the extent where the feature values of an instance are related to its model prediction in such a way that humans understand. In basic term, it is the understanding to the question “why is this happening?”.

Important Properties Of Explainability

- Portability: It defines the range of machine learning models where the explanation method can be used.

- Expressive Power: It defines as the structure of an explanation that a method is able to generate.

- Translucency: This describes as to how much the method of explanation depends on the machine learning model. Low translucency methods tend to have higher portability.

- Algorithmic Complexity: It defines the computational complexity of a method where the explanations are generated.

- Fidelity: High fidelity is considered as one of the important properties of an explanation as low fidelity lacks in explaining the machine learning model.

Interpretability

Interpretability is defined as the amount of consistently predicting a model’s result without trying to know the reasons behind the scene. It is easier to know the reason behind certain decisions or predictions if the interpretability of a machine learning model is higher.

Evaluation Of Interpretability

- Application Level Evaluation: This is basically the real-task. It means putting the explanation into the product and the end user will do all the tests.

- Human Level Evaluation: This is a simple task or can be termed as a simplified application level evaluation. In this case, the experiments are carried out by laypersons by making the experiments cheaper and testers can be found easily.

- Function level evaluation: This is an approach where an anonymous person already evaluates the class of model. This approach is also known as a proxy task.

Understanding The Difference

You can distinguish the difference between these two by a simple instance. For instance, a school student doing a little experiment on titration, the result can be interpreted as what will be the next step as far as it can be done until the outcome is found out. This is interpretability. And the chemistry behind this experiment is the definition of explainability.

Methods For Improving Interpretability And Explainability

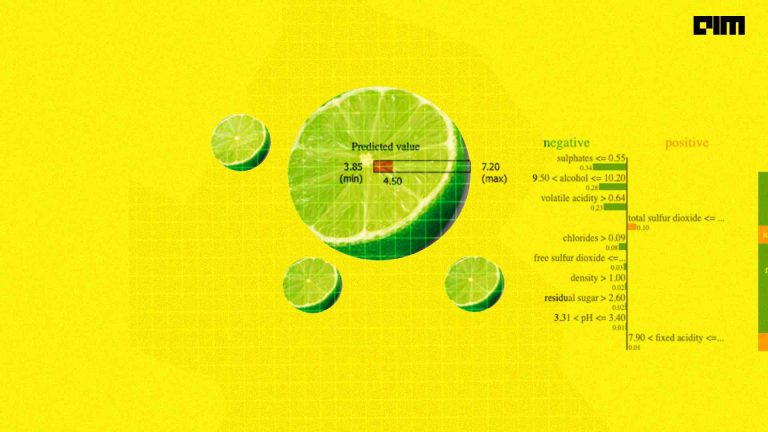

Local Interpretable Model-Agnostic Explanations (LIME): This approach has become common in use nowadays. This is a model agnostic approach, that means it is applicable to any model in order to produce explanations for predictions.

Algorithmic Generalisation: The term generalization may seem easy to hear but deep down it is the complex one. If the way of certain specific working of a machine learning algorithm to get a certain outcome is changed such that the model starts working as the primary element, then it may become possible to improve the interpretability.

DeepLIFT (Deep Learning Important Features): DeepLIFT is basically used in the complex areas deep learning. It is an efficient method for computing in neural networks by comparing the activation of each neuron to its reference activation.

Layer-Wise Relevance Propagation: The objective of relevance and conservation are used by this approach. This approach is quite similar to DeepLIFT.

Modifying Features According To The Need: This may be a negligible part but it plays an important role in improving interpretability as well as explainability.