Last June, MLCommons, an open engineering consortium, released new results for MLPerf Training v1.0, the organisation’s machine learning training performance benchmark suite. The latest version includes vision, language and recommender systems, and reinforcement learning tasks.

MLCommons started with the MLPerf benchmark in 2018 in collaboration with its 50+ founding partners, including global technology providers, academics and researchers. Since then, it has rapidly scaled to measure machine learning performance and promote transparency of machine learning techniques.

MLPerf training measures the time it takes to train ML models to a standard quality target in various tasks, including image classification, NLP, object detection, recommendation, and reinforcement learning. The full system benchmark tests machine learning models, software, and hardware.

NVIDIA has submitted its training results for all eight benchmarks. It has improved up to 2.1x on a chip-to-chip basis and up to 3.5x at scale, compared to its last MLPerf v0.7 submissions. The company set 16 performance records with eight on a per-chip basis and eight at-scale training in the commercially available solutions. Besides NVIDIA which dominates the latest MLPerf results, MLPerf training received submissions from Dell, Fujitsu, Gigabyte, Intel, Lenovo, Nettrix, PCL etc.

Below table shows all the benchmarks submitted by NVIDIA:

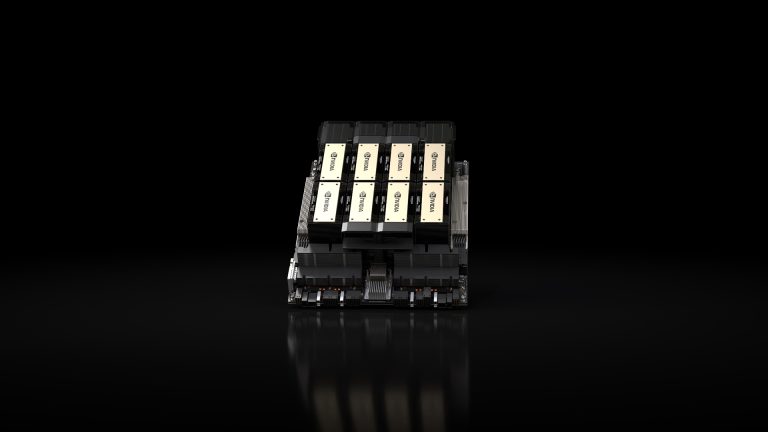

NVIDIA said this is the second MLPerf training round featuring NVIDIA A100 GPUs. NVIDIA engineers have developed a host of innovations to achieve new levels of performance by:

- Extending CUDA Graphs across all benchmarks. CUDA Graphs offers a new model for work submission in CUDA. NVIDIA has launched the entire sequence of kernels as a graph on the GPU in MLPerf v1.0, thereby minimising communication with the CPU.

- Using SHARP to double the adequate interconnect bandwidth between nodes. Scalable Hierarchical Aggregation and Reduction Protocol (SHARP) offloads collective operations from the CPU to the network and removes the need for sending data multiple times between endpoints.

Leveraging CUDA graphs and SHARP, the team increased the scale to record the number of 4096 GPUs used to solve a single ‘AI network.’

Further, spatial parallelism enabled them to split a single image across eight GPUs for massive image segmentation networks (3D U-Net) and use more GPUs for higher throughput. In terms of hardware, the new HBM2e GPU memory on the NVIDIA A100 GPU increased memory bandwidth by nearly 30 percent to 2 TeraBytes per second (TBps).

All the software used for NVIDIA submissions is currently available from the MLPerfr repository.

In its blog post, NVIDIA offered insights into many of the optimisations used to deliver outstanding scale and performance.

At scale training

To support large-scale training, NVIDIA made advances on both software as well as hardware performance.

On the hardware front, the key building block of its at-scale training is the NVIDIA DGX SuperPOD. On the software side, the team has released v 21.05 enhances to enable several capabilities, including distributed optimiser support enhancement; improved communication efficiency with Mellanox HDR Infiniband and NCCL 2.9.9; and added SHARP support.

Workloads

NVIDIA highlighted several optimisations for selected individual MLPerf workloads, including:

- Recommendation (DLRM)

- NLP (BERT)

- Image classification (ResNet-50 v1.5)

- Image segmentation (3D U-Net)

- Optimized CuDNN kernels

- Object detection (lightweight – SSD)

- Object detection (heavyweight – Mask R-CNN)

- Speech recognition (RNN-T)

Recommendation

Today, recommendation is arguably the most pervasive AI workload in data centres. NVIDIA MLPerf DLRM submission was based on ‘HugeCTR,’ a GPU-accelerated recommendation framework part of the NVIDIA Merlin open beta framework.

HugeCTR v3.1 beta version added the following optimisations: Hybrid embedding, optimised collectives, optimised data reader, overlapping MLP with embedding and whole-iteration CUDA graph.

NLP

Currently, BERT is one of the most important workloads in the NLP domain. In the latest version of the MLPerf round, NVIDIA improved their v0.7 submission with the following optimisations: fused multihead attention, distributed LAMB, synchronisation-free training, CUDA graphs in PyTorch and one-shot all-reduce with SHARP.

Image classification

In this edition of MLPerf, the team continue to optimise ResNet by improving Conv+BN+ReLu fusion kernels in CuDNN, along with DALI optimisations, MXNet fused BN+ReLu and improved MXNET dependency engine improvements.

Image segmentation

In this round of MLPerf training, U-Net3D is one of the two new workloads. For this, NVIDIA used the following optimisations: spatial parallelism, asynchronous evaluation, data loader, and channels-last layout support.

Optimized CuDNN kernels

3D U-Net has multiple ‘encoder’ and ‘decoder’ layers with small channel counts. Leveraging a typical tile size of 256×64 for kernels used in these operations results in significant tile-size quantisation effects. CuDNN added kernels optimised for smaller tiles sizes with better cache reuse, thereby helping 3D U-Net achieve better compute utilisation.

Also, 3D U-Net benefited from the optimised BatchNorm + ReLu activation kernel. The asynchronous dependency engine implemented in CuDA Graphs, MXNet, SHARP helped performance significantly. “With the array of optimisations made for 3D U-Net, we scaled to 100 DGX A100 nodes (800 GPUs), with training running on 80 nodes (640 GPUs) and evaluation running on 20 nodes (160 GPUs),” said the NVIDIA team.

Compared to the single-node configuration, the max-scale configuration of 100 nodes got over 9.7x speedup.

Object detection (lightweight)

Lightweight SSD has been featured fourth time in MLPerf. In the latest round, NVIDIA said the evaluation schedule is changed to happen every fifth epoch, starting from the first. However, in the previous version, the evaluation schedule started from the 40th epoch. “Even with the extra computational requirement, we sped up our submissions time by more than x1.6,” said NVIDIA researchers.

SSD consists of many smaller convolution layers. NVIDIA said the benchmark was particularly affected by the improvements to the MXNet dependency engine, CUDA graphs, and the enablement of SHARP via more efficient configurations and optimised evaluation.

Object detection (heavyweight)

Here, NVIDIA optimised object detection with the following methods: CUDA Graphs in Pytorch, removing synchronisation points, asynchronous evaluation, dataloader optimisation, and better fusion of ResNet layers with CUBNN v8.

Speech recognition

In this round of MLPerf, speech recognition with RNN-T is another new workload after U-Net 3D. For this, NVIDIA used the following optimisations, including Apex transducer loss, Apex transducer joint, sequence splitting, batch splitting, batch evaluation with CUDA Graphs, and more optimised LSTMs is cuDNN v8.

Wrapping up

MLPerf v1.0 highlights the continuous innovation happening in the artificial intelligence and machine learning space. Since the MLPerf training benchmark was launched two-and-a-half years ago, NVIDIA performance has increased by nearly 7x.