Facebook AI Research has announced the development of a new chatbot — Blender, which they claim to be the largest-ever, state-of-the-art open-domain chatbot model. The company said that the chatbot can outperform other models in terms of engagement and can also feel more human.

In a recent tweet, Facebook AI confirmed the news stating — “Today we’re announcing that Facebook AI has built and open-sourced Blender, the largest-ever open-domain chatbot. It outperforms others in terms of engagement and also feels more human, according to human evaluators.”

Today we’re announcing that Facebook AI has built and open-sourced Blender, the largest-ever open-domain chatbot. It outperforms others in terms of engagement and also feels more human, according to human evaluators. https://t.co/TdNxJpL0JI pic.twitter.com/1wo3ZxwDeU

— Meta AI (@MetaAI) April 29, 2020

The company has also released a blog post stating where it stated — “This is the first time a chatbot has learned to blend several conversational skills — including the ability to assume a persona, discuss nearly any topic, and show empathy — in natural, 14-turn conversation flows.”

It further stated — “Our new recipe incorporates not just large-scale neural models, with up to 9.4 billion parameters — or 3.6x more than the largest existing system — but also equally important techniques for blending skills and detailed generation.”

According to the blog, Blender is a result of a culmination of years of research in conversational AI, where it includes empathy, knowledge, and personality — together in one system. The company included improved decoding techniques, the novel blending of skills, and a model with 9.4 billion parameters, which is 3.6x more than the largest existing system, in order to build this chatbot model.

The company released its complete model code, and evaluation set-up so that other AI researchers can reproduce this work and continue to advance conversational AI research.

According to researchers, Blender comes with diverse skills. The company has done a large scale training for creating the chatbot by involving 1.5 billion training examples of extracted conversations. It further utilised column-wise model parallelism, which allowed the researchers to split the neural network into smaller, more manageable pieces while maintaining maximum efficiency.

Blender also learned blending skills for training and evaluating these desirable BST skills, which includes, engaging use of personality engaging use of knowledge, display of empathy, and ability to blend all three seamlessly. According to the blog, “Blending these skills is a difficult challenge because systems must be able to switch between different tasks when appropriate, like adjusting tone if a person changes from joking to serious. Our new BST data set provides a way to build systems that blend and exhibit these behaviours. We found that fine-tuning the model with BST has a dramatic effect on human evaluations of the bot’s conversational ability.”

The researchers further found that the length of the agent’s utterances is important in achieving better results with human evaluators. The company showed that a careful choice of search hyperparameters could give strong results by controlling this trade-off. In particular, tuning the minimum beam length gives important control over the “dull versus spicy” spectrum of responses.

To evaluate the model, the researchers benchmarked its performance against Google’s latest Meena chatbot through pairwise human evaluations. Since their model has not been released, the researchers used the roughly 100 publicly released and randomised logs for this evaluation. Using the ACUTE-Eval method, human evaluators were shown a series of dialogues between humans paired with each respective chatbot.

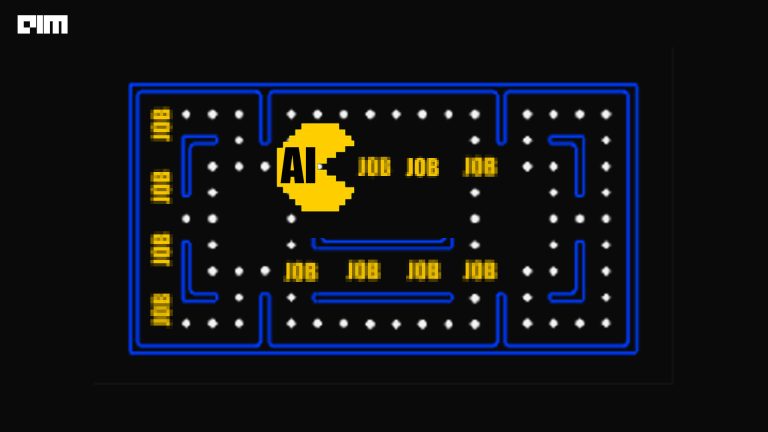

Facebook AI is excited about the progress that has been made in improving open-domain chatbots. Although they are still far from achieving human-level intelligence in dialogue systems, the researchers are currently exploring ways to further improve the conversational quality of our models in longer conversations with new architectures and different loss functions.

“True progress in the field depends on reproducibility — the opportunity to build upon the best technology possible. We believe that releasing models is essential to enable full, reliable insights into their capabilities. That’s why we’ve made our state of the art open-domain chatbot publicly available through our dialogue research platform ParlAI. By open-sourcing code for fine-tuning and conducting automatic and human evaluations, we hope that the AI research community can build on this work and collectively push conversational AI forward,” the blog stated.