|

Listen to this story

|

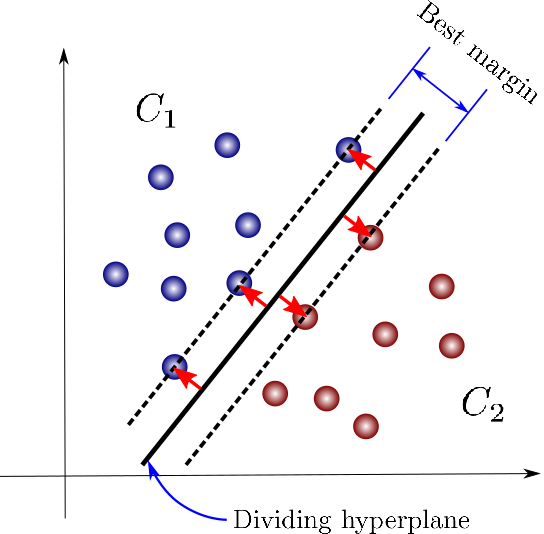

Support Vector Machine (SVM) is one of the supervised machine learning algorithms that can be used for either regression or classification modeling. It is one of the machine learning algorithms that is preferred over various algorithms as it helps yield higher and better accuracies. SVM operates on the principle of the Kernel trick and it is best suitable for binary classification tasks as the SVM will have minimal classes to operate on near the hyperplane and it also converges faster. So in this article let us see how to extract the best set of features from the data using SVM using various feature selection techniques.

Table of Contents

- Introduction to SVM

- Forward Feature Selection using SVM

- Backward Feature Selection using SVM

- Recursive Feature selection using SVM

- Model building for the features selected by the recursive technique

- Summary

Introduction to SVM

Support Vector Machine (SVM) is one of the supervised machine learning algorithms which can be used for either classification or regression. Among the various supervised learning algorithms, SVM is one such algorithm that is very robust and helps in yielding higher model accuracies when compared to other algorithms.

The SVM operates on the underlying principle of the Kernel trick where the algorithm will be responsible for finding the optimal hyperplane for running on the entire features present in the dataset and the features close to the hyperplane will be classified into the respective classes. SVM algorithm is best suited for classification problems.

Are you looking for a complete repository of Python libraries used in data science, check out here.

Now let us see how to implement an SVM classifier and select the best features of the dataset using SVM among the various features that will be present in the dataset.

Before proceeding ahead with the feature selection techniques let us see the correlation present in the dataset we are working with through a heatmap.

plt.figure(figsize=(15,5)) sns.heatmap(df.corr()) plt.show()

Processing the data before implementing SVM

For SVM model building the data should be standardized on a common scale. So here at first, the data was split into train and test and the data was standardized using the StandardScaler module of the Scikit Learn Preprocessing package.

from sklearn.model_selection import train_test_split

X=df.drop('Class',axis=1)

y=df['Class']

X_train,X_test,Y_train,Y_test=train_test_split(X,y,test_size=0.2,random_state=42)

from sklearn.preprocessing import StandardScaler

ss=StandardScaler()

X_trains=ss.fit_transform(X_train)

X_tests=ss.fit_transform(X_test)

So now as we have standardized the dependent features of the dataset let’s proceed ahead with model building.

Building an SVM Model

Here the main moto is to select important features from the dataset using SVM so let us see how to import the required libraries for SVM classification and fit the SVM classifier model on the split data.

from sklearn.svm import SVC svc=SVC() svc.fit(X_trains,Y_train)

Forward Feature Selection using SVM

The Forward feature selection technique works in a way wherein at first a single feature is selected from the dataset and later all the features are added to the feature selection instance and later this instance object can be used to evaluate the model parameters. The mlxtend module was used for the feature selection wherein an instance was created for Forward feature selection and later that instance object was used to fit on the split data and later the R-squared value was used to evaluate the features selected by the forward feature selection technique.

!pip install mlxtend import joblib import sys sys.modules['sklearn.externals.joblib'] = joblib from mlxtend.feature_selection import SequentialFeatureSelector as sfs forward_fs_best=sfs(estimator = svc, k_features = 'best', forward = True,verbose = 1, scoring = 'r2') sfs_forward_best=forward_fs_best.fit(X_trains,Y_train)

So here we can see that the feature selection technique has iterated through all the 30 features present in the dataset and later that instance can be used to evaluate the variation explained by the features being selected.

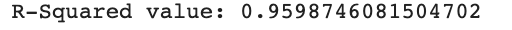

print('R-Squared value:', sfs_forward.k_score_)

Here we can see that the forward feature selection technique is responsible for explaining 96% of the variation in the data. In the same way, let us see how to implement the backward feature selection technique.

Backward Feature Selection using SVM

The backward feature selection technique at the first considers all the features of the dataset and later at each instance one feature of the dataset is dropped and the features present in that instance are evaluated for optimal feature selection. Now let us see how to implement the backward feature selection technique.

backward_fs_best=sfs(estimator = svc, k_features = 'best', forward = False,verbose =1, scoring = 'r2') sfs_backward_best=backward_fs_best.fit(X_trains,Y_train)

print('R-Squared value:', sfs_backward_best.k_score_)

So here we can see that the backward feature selection technique is explaining 88.62% of the variation in the data. This might be due to the higher features selected in the first instance. So for a better choice let us see the ability of the Recursive Feature Selection technique.

Recursive Feature selection using SVM

The recursive feature selection technique is a replica of the backward selection technique but it uses the principle of feature ranking for selecting the optimal features and due to this it is observed that this feature selection technique performs better than the forward feature selection technique. Let us see how to implement this feature selection technique.

X_trains_df=pd.DataFrame(X_trains,columns=X_train.columns)

from sklearn.feature_selection import RFE

svc_lin=SVC(kernel='linear')

svm_rfe_model=RFE(estimator=svc_lin)

svm_rfe_model_fit=svm_rfe_model.fit(X_trains_df,Y_train)

feat_index = pd.Series(data = svm_rfe_model_fit.ranking_, index = X_train.columns)

signi_feat_rfe = feat_index[feat_index==1].index

print('Significant features from RFE',signi_feat_rfe)

Now let us compare the original number of features that were present in the dataset and the number of features that are selected as optimal features by the recursive feature selection technique.

print('Original number of features present in the dataset : {}'.format(df.shape[1]))

print()

print('Number of features selected by the Recursive feature selection technique is : {}'.format(len(signi_feat_rfe)))

So here we can clearly see that almost only 50% of the features present in the dataset are useful when compared to the entire dataset. So now let us build a model using these selected features by the Recursive feature selection technique.

Model building for the features selected by the recursive technique

Using the features selected by the recursive feature technique let us create a subset of the training data and create a new model instance of SVM and fit it on the subset of the features selected and let us observe the R-squared value for the optimal set of features being selected by the recursive technique.

X_trains_new=X_train[['V1', 'V2', 'V4', 'V6', 'V9', 'V10', 'V12', 'V14', 'V17', 'V18', 'V19',

'V20', 'V25', 'V26', 'V28']]

rfe_svm=SVC(kernel='linear')

rfe_fit=rfe_svm.fit(X_trains_new, Y_train)

print('R2 squared value for RFE',rfe_fit.k_score_)

Summary

So this is how the best set of features are selected using SVM by employing various feature selection techniques and reducing the concerns associated with working under higher dimensionality of data. This technique reduces the dimensions of the dataset where the optimal set of features can be used as a subset for respective model building and yield reliable model parameters and performance as the model operates with an optimal set of features selected by the feature selection techniques.