|

Listen to this story

|

A bunch of large language models burst into the scene this year, with applications ranging from automated code generation to text to image generation. However, these LLMs have come up short on the quantitative reasoning front.

Google has broken this barrier with their latest language model, Minerva. Named after the Roman goddess of wisdom, it is trained on a high-quality scientific and mathematical dataset.

Minerva in a nutshell

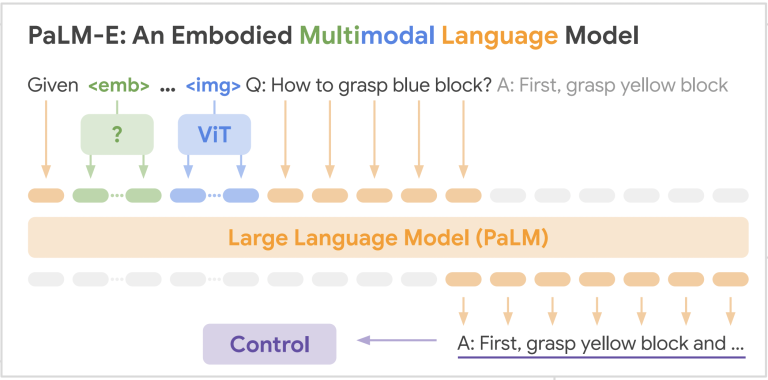

Minerva is built on Pathways Language Model (PaLM) with extended training on a 118GB dataset of scientific papers from arXiv and 38.5B tokens of mathematical data derived from web pages. The model processes scientific and mathematical questions formed in natural language and generates a step-by-step solution with the help of Latex Notations, MathJax, or other mathematical typesetting formats.

The model is developed in 3 baseline forms: 8B, 62B, and 540B parameter pretrained models. Along with an extended pretraining on mathematical data, Minerva also incorporates prompting and evaluation techniques like chain-of-thought, scratchpad and majority voting to provide a step-by-step evaluation process and choose the optimal result out from a sample of multiple solutions.

STEM benchmarks

Minerva’s quantitative reasoning capabilities were tested on STEM benchmarks, the standard of assessment in the United States education system. The level of difficulty ranges from grade school level to graduate-level coursework.

- MATH: A dataset of 12K middle school and high school math problems written in LATEX format. The models are prompted with a fixed four-shot prompt consisting of four random examples whose ground truth targets are not too long.

- MMLU-STEM: Focused on STEM, this subset of the Massive Multitask Language Understanding benchmark covers topics such as engineering, chemistry, math, and physics at the high school and college levels. In this test, a combination of five-shot, chain-of-thought and a multiple-choice version of the MATH prompt is used to tackle various problems.

- GSM8k: Grade school-level math problems involving basic arithmetic operations that should all be solvable by a talented middle school student. The model is evaluated using chain-of-thought prompting. However, in this test, no external tools are used for calculations.

- OCWCourses: A set of problems covering STEM topics ranging from differential equations, solid-state chemistry, astronomy, special relativity, etc., at an undergraduate level. The dataset was created using publicly-available course materials offered by MIT (OpenCourseWare). Only problems with automatically-verifiable solutions (either numeric or symbolically verifiable via SymPy) from various courses were included.

Minerva outperformed SOTA results by a wide margin.

Source: arxiv.org

Image: Accuracy on LMs on MATH and MMLU

The Minerva model was also tested on the National Math exam in Poland in 2021. While the 62B baseline model achieved 57%, which was the national average that year, the 540B baseline variant achieved 65%.

What’s the big deal?

Quantitative reasoning is the ability to use mathematics and information to solve real-world problems. OpenAI’s GPT-3 could only complete 2.9% to 6.9% of problems from a dataset of over 12,500 in the year 2021. Later, they launched GPT-f. The automated prover and proof assistant for the Metamath formalization language was the first machine learning-based system that contributed proofs that were adopted by a formal mathematics community.

Guillaume Lample and François Charton at Facebook AI Research have come up with a trained neural network capable of symbolic reasoning for differential and integral equations.

Minerva is trained on a large dataset that combines natural language understanding with the correct use of formal mathematical language (equations and diagrams). The model establishes a new baseline for quantitative reasoning benchmarks by increasing data quality and model size.

Source: arxiv.org

The researchers said one of the direct applications could be the creation of an accessible and affordable AI-based math tutor.

Not a perfect model

Despite training on an extensive dataset of mathematical data, Minerva is far from a perfect problem solver. Upon analyzing the sample of problems that the model got wrong, a pattern was found.

- About half of the problems were calculation errors, while the other half were solution steps that did not follow a logical chain of thought.

- Although the model arrived at the right answer, it did not use the correct reasoning. Such cases were referred to as ‘false positives. However, the rate of false positives was relatively low.

The model doesn’t have access to external tools like a calculator or a Python interpreter, limiting its ability to handle tasks that require complicated numerical calculations. Check out the demo of Minerva explorer.