Automatically generating realistic images is a difficult task, even the most advanced machine learning algorithms struggle to meet this requirement. We will look at one such technique called FuseDream based on a research paper by Xingchao Liu et al. In this article, we will have a close look at this technique which uses the power of GAN to generate an image based on a user query. The main focus of this algorithm is that the user needs not to train it because it is based on a pre-trained model like BigGan. The major points to be discussed in this article are listed below.

Table of Contents

- Understanding Text To Image Generation

- How Does FuseDream Work?

- Implementing FuseDream

Let’s start the discussion by knowing what text-to-image generation is.

Understanding Text to Image Generation

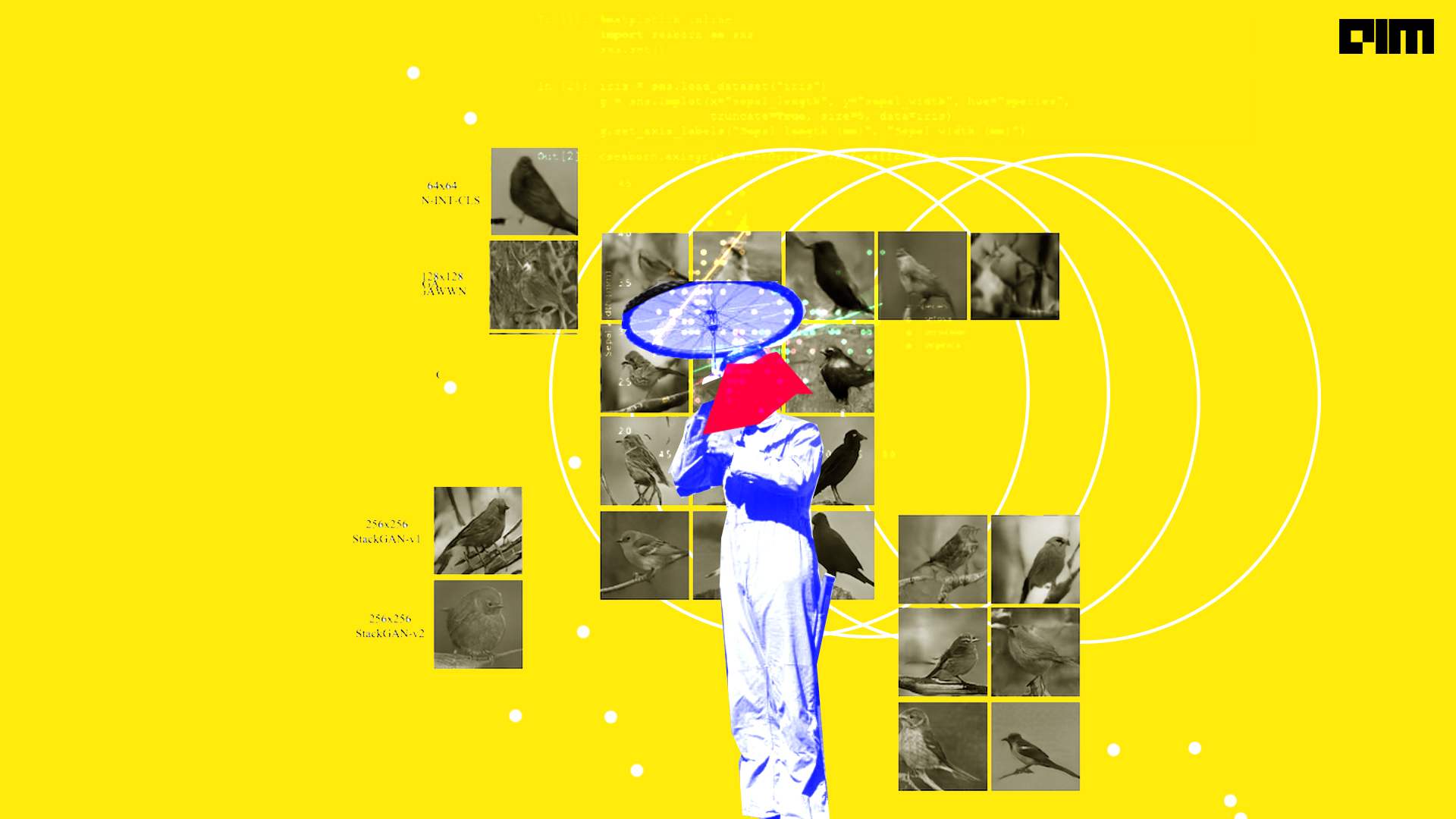

Text-to-image generation, which generates realistic images that are semantically related to a given text input, is a landmark task in multi-modal machine learning. This is a difficult task because the generative model must comprehend the text, image and how they should be semantically related. Models trained with a self-supervised loss on large-scale datasets have recently made significant progress in generating high-quality and semantically relevant images.

A conditional generative model is trained from scratch with a dataset of (text, image) pairs in the traditional approach to text-to-image generation. This procedure, on the other hand, necessitates the collection of a large training dataset, has a high training cost, and is difficult to customize.

The availability of powerful joint text-image encoders (notably the CLIP model) that provide faithful semantic relevance scores of text-image pairs has recently enabled a more flexible text-to-image generation approach. It is now possible to do text-to-image generation using powerful pre-trained GANs by optimizing in the latent space of a GAN to create images with high semantic relevance to the input text.

Methods that combine GAN and CLIP are training-free and zero-shot when compared to traditional benchmarks, requiring no dedicated training dataset or cost. It is also a lot more flexible and modular: a user can easily replace the generator (GAN) or encoder model (CLIP) with more powerful or customized versions that are better suited to their problems and computational budge

How Does FuseDream Work?

Researchers have attempted to analyze the problems in existing CLIP+GAN procedures in order to build an effective text-image generation pipeline. They have identified three key bottlenecks of the existing approach and addressed them with a number of techniques to significantly improve the pipeline, which are as follows.

Robust Score

The original CLIP score is not a good objective function for optimizing in the GAN space because it frequently produces semantically unrelated images that adversarially maximize the CLIP score. We propose an AugCLIP score that improves the CLIP score by averaging it across multiple perturbations or augmentations of the input images.

Improved Optimization Strategy

Maximizing the CLIP score in GAN space is a highly non-convex, multi-modal optimization problem, and off-the-shelf optimization methods are prone to sticking at suboptimal local maxima. We solve this problem by employing a novel initialization and over-parameterization strategy that allows us to more efficiently traverse the non-convex loss landscape.

Composed Generation

The pre-trained GAN that we use limits the image space of the CLIP+GAN approach. This makes it difficult to create images with unusual object combinations that did not appear in the GAN’s training data. They came up with a solution to this problem by proposing a composed generation technique that optimizes two images so that they can be seamlessly combined to produce a natural and semantically relevant image.

They turn a composed generation into a novel bi-level optimization problem that maximizes the AugCLIP score while also including a perceptual consistency score as a secondary goal, which they solve quickly using a new dynamic barrier gradient descent algorithm.

The FuseDream can generate not only simple objects from complex text descriptions but also complex scenes such as those in MS COCO. FuseDream can create images with a variety of backgrounds, textures, locations, artistic styles, and even counterfactual objects thanks to the representation power of CLIP.

FuseDream can create images with novel combinations of objects that do not appear in the original training data of the GAN that we use using composed generation techniques. When compared to directly training large-scale text-to-image generative models, our method is much more computationally efficient while producing comparable or even better results.

In the below section, we are going to implement the FuseDream with query text 1. Long hair dog. 2. A Photo of Blue Dog, 3. Temple in Sunrise

Implementing FuseDream

- Install, setup and Import all the dependencies

!git clone https://github.com/gnobitab/FuseDream.git !pip install ftfy regex tqdm numpy scipy h5py lpips==0.1.4 !pip install git+https://github.com/openai/CLIP.git !pip install gdown !gdown 'https://drive.google.com/uc?id=17ymX6rhsgHDZw_g5XgAFW4xLSDocARCM' !gdown 'https://drive.google.com/uc?id=1sOZ9og9kJLsqMNhaDnPJgzVsBZQ1sjZ5' !ls !cp biggan-256.pth FuseDream/BigGAN_utils/weights/ !cp biggan-512.pth FuseDream/BigGAN_utils/weights/ %cd FuseDream import torch from tqdm import tqdm from torchvision.transforms import Compose, Resize, CenterCrop, ToTensor, Normalize import torchvision import BigGAN_utils.utils as utils import clip import torch.nn.functional as F from DiffAugment_pytorch import DiffAugment import numpy as np from fusedream_utils import FuseDreamBaseGenerator, get_G, save_image

- Setting parameters of FuseDream

Following are the few parameters that need to be initialized in order to do effective generation.

- QUERY TEXT: The text used to generate the image. Note: Adding a period ‘.’ to the end of a sentence improves the quality of the generated images; for example, ‘A photo of a blue dog.’ produces better images than ‘A photo of a blue dog.’

- INIT ITERS: The number of images used for initialization (M in the paper, and M = INIT ITERS*10) is controlled by this variable. The default value of 1000 should suffice.

- OPT ITERS: Sets the number of iterations that will be used to optimize the latent variables. The default value of 1000 should suffice.

- NUM BASIS: The number of basis images used in optimization is controlled by this variable (k in the paper). Choose from 5, 10, or 15, and you should be fine.

- MODELS: ‘biggan-256’ and ‘biggan-512’.

- SEED: This is a random seed. Pick an arbitrary integer that appeals to you.

SENTENCE = "A photo of a blue dog." INIT_ITERS = 1000 OPT_ITERS = 1000 NUM_BASIS = 5 MODEL = "biggan-256" SEED = 0 import sys sys.argv = ['']

- Generating Images

utils.seed_rng(SEED)

sentence = SENTENCE

print('Generating:', sentence)

if MODEL == "biggan-256":

G, config = get_G(256)

elif MODEL == "biggan-512":

G, config = get_G(512)

else:

raise Exception('Model not supported')

generator = FuseDreamBaseGenerator(G, config, 10)

z_cllt, y_cllt = generator.generate_basis(sentence, init_iters=INIT_ITERS, num_basis=NUM_BASIS)

z_cllt_save = torch.cat(z_cllt).cpu().numpy()

y_cllt_save = torch.cat(y_cllt).cpu().numpy()

img, z, y = generator.optimize_clip_score(z_cllt, y_cllt, sentence, latent_noise=False, augment=True, opt_iters=OPT_ITERS, optimize_y=True)

### Set latent_noise = True yields slightly higher AugCLIP score, but slightly lower image quality. We set it to False for dogs.

score = generator.measureAugCLIP(z, y, sentence, augment=True, num_samples=20)

print('AugCLIP score:', score)

import os

if not os.path.exists('./samples'):

os.mkdir('./samples')

save_image(img, 'samples/fusedream_%s_seed_%d_score_%.4f.png'%(sentence, SEED, score))

from IPython import display

display.display(display.Image('samples/fusedream_%s_seed_%d_score_%.4f.png'%(sentence, SEED, score)))

Here is the Result,

- Photo of a blue dog.

- Long hair dog.

- Temple in Sunrise.

Final Words

We have seen FuseDream in this post, which uses CLIP-guided GAN to enable high-quality, state-of-the-art text-to-image generation. This method, in contrast to traditional training-based approaches, is training-free, zero-shot, and easily customizable, making it suitable for users with limited computational resources or GPU requirements. We have also seen how the FuseDream works both theoretically and practically.