Recurrent Neural Networks have shown assuring results in various machine learning tasks. It has been applied in various cases, such as time-series prediction, machine translation, speech recognition, text summarisation, video tagging, language modelling and more.

Recurrent Neural Network or RNN is a popular neural network that is able to memorise arbitrary-length sequences of input patterns by building connections between units form a directed cycle. And because of the memorising feature, this neural network is useful in time series prediction.

Also, recurrent neural networks with sophisticated recurrent hidden units have gained promising success in various applications in the past few years. Among the sophisticated recurrent units, Gated Recurrent Units (GRUs) is one of the closely related variants.

Behind Gated Recurrent Units (GRUs)

As mentioned, the Gated Recurrent Units (GRU) is one of the popular variants of recurrent neural networks and has been widely used in the context of machine translation. GRUs can also be regarded as a simpler version of LSTMs (Long Short-Term Memory). The GRU unit was introduced in 2014 and is claimed to be motivated by the Long Short-Term Memory unit. However, the former is much simpler to compute and implement in models.

According to the researchers at the University of Montreal, a gated recurrent unit (GRU) was introduced to produce each recurrent unit to capture dependencies of various time scales. Similar to the Long Short-Term Memory unit, the GRU includes gating units which modulate the flow of information inside the unit without any separate memory cells.

Gated recurrent unit networks as a variant of the recurrent neural network are able to process memories of sequential data by storing previous inputs in the internal state of networks and plan from the history of previous inputs to target vectors in principle.

How It Works

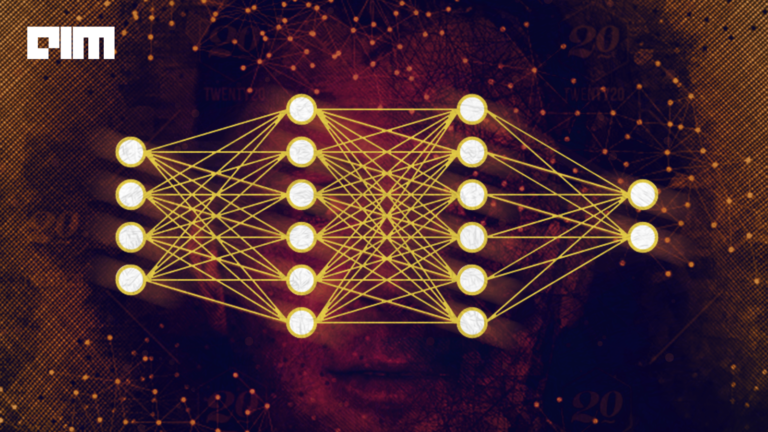

In GRU, two gates including a reset gate that adjusts the incorporation of new input with the previous memory and an update gate that controls the preservation of the precious memory are introduced. The reset gate and the update gate adaptively control how much each hidden unit remembers or forgets while reading/generating a sequence.

In the above figure of the Gated Recurrent Unit, r and z are known to be the reset and update gates, while h and h˜ are the activations as well as the candidate activation respectively. The working of GRU proceeds such that when the reset gate is close to zero, the hidden state is forced to ignore the previous hidden state and is reset with the current input.

This allows the hidden state to discard any data that is found to be irrelevant in the future. This result allows a more compact representation. While the update gate controls how much data from the previous hidden state will be transferred to the current hidden state. This process performs in a similar manner to the memory cell in the Long Short-Term Memory network and helps the RNN to remember long-term information.

The activation of the GRU at a particular time is a linear interpolation between the previous activation and the candidate activation, where an update gate decides how much the unit updates its activation or content.

Advantages of Gated Recurrent Unit

Gated Recurrent Unit can be used to improve the memory capacity of a recurrent neural network as well as provide the ease of training a model. The hidden unit can also be used for settling the vanishing gradient problem in recurrent neural networks. It can be used in various applications, including speech signal modelling, machine translation, handwriting recognition, among others.

How To Learn GRU

In order to learn and understand the Gated Recurrent Unit, you must first understand the concepts of recurrent neural networks and Long Short-Term Memory. You must also understand the difference between sophisticated recurrent units and vanilla recurrent neural networks and how these work. Some of the resources where you can understand these concepts are mentioned below-