Last week, at the Google I/O developer conference, the company announced that it would be adopting a new 10-shade skin tone scale it has created in partnership with Harvard professor and a person of colour himself, Ellis Monk. Known as the Monk Skin Tone Scale, or MST, the scale was designed as a replacement for outdated measures like the Fitzpatrick scale, which has been used by tech companies to classify skin tones in computer vision algorithms. The Fitzpatrick scale originally included only six skin tones and was created by dermatologists in the 1970s.

Image: The 10-point Monk Skin Tone Scale. Source: Ellis Monk / Google

New scale, more diverse datasets

Google has said that the scale will help it build more inclusive datasets and then evaluate whether a model or product is fair and works well for a range of skin tones. The Monk Skin Tone Scale can have a wide range of applications, including health, where apps can be used to detect skin cancer, in facial recognition software and in computer vision systems used by self-driving vehicles.

We’re making the Monk Scale available for anyone to use as a more representative skin-tone guide in research and product development. Our goal is to improve upon the scale over time in partnership with the industry. #GoogleIO ↓ https://t.co/0ZtPoWvW3s

— Google (@Google) May 11, 2022

Monk, an assistant professor of sociology at Harvard, said that the number of colours on the scale had been devised by him after a decade of research with skin tones. The final ten skin tones had been decided to create a balance between diversity and ease of usage. Google has also created a website called skintone.google, and has open-sourced it. Google stated that the Monk Scale would be employed as a standard across the company, from Google Photos to Google Search. Last year, with the Pixel 6, Google introduced a camera feature called ‘Real Tone’ to make skin tones look as accurate as possible. At Google I/O this year, a new set of filters called Real Tone filters were launched. Designed to work well with all skin tones, Real Tone filters were evaluated using the MST.

Source: Google AI

Controversial past

Anyone who has been following Google’s sketchy history with AI racial biases is probably aware of the damage to its reputation in this regard. While initial reports of the tech giant’s bias problem started coming out around 2018, Google AI’s decision to force out Timnit Gebru, the co-lead for Google’s ethical AI team in 2020, has brought the company under constant fire since.

Gebru, along with Margaret Mitchell, another member of Google’s ethical AI team, had written a paper titled, ‘On the Dangers of Stochastic Parrots: Can Language Models be Too Big?’ The study wanted to examine the risks associated with large language models like BERT and GPT-2 or GPT-3 that big tech companies, including Google, were behind. Gebru’s paper found that facial recognition was inaccurate at identifying people and women of colour. The unceremonious firing of the two researchers invited letters of protest from more than 1,400 Google employees.

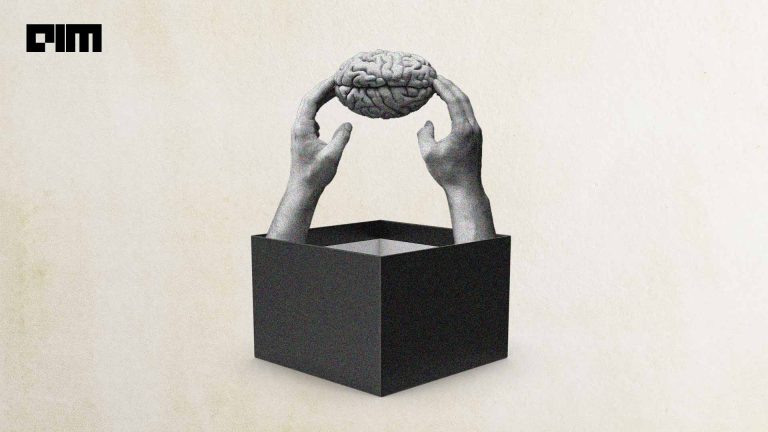

A longer look at the company brought to attention its lack of diversity. In June of last year, a group of senators, including Senators Cory Booker, Ron Wyden and Mark Warner, wrote a letter to CEO Sundar Pichai stating their concerns about bias and discrimination in the workplace.

Source: Google

Google’s diversity report from 2021 echoed a chasm between white and coloured employees in the company. Only 4.4 per cent of Google’s US employees were black. Despite the calls for attention, Google’s improvement in this regard has been painfully slow. In 2020, the diversity report noted only 3.7 per cent of the total workforce were black. While in 2013, the percentage was 2.2.

In March this year, a former employee sued the company, claiming that black employees were discriminated against and given low-level jobs that underpaid them and denied them opportunities to advance within the company. While working in the company from 2014 until 2020, the plaintiff said that black employees weren’t considered ‘qualified’ enough and were asked tougher questions on purpose. The ex-employee was responsible for initiating several programs to recruit more black employees. When the plaintiff began raising these issues in team meetings, she was reprimanded with a pay cut and then eventually fired in September 2020.

The launch of the Monk skin tone scale is but a small step towards being more inclusive of different races. Globally, diversity still remains a challenging issue as Google search results in different parts of the world will have to change according to geography too. Dermatologist Roxana Daneshjou from Stanford Medicine reacted to the announcement, noting, “At some point, maybe our data diversity will improve so much that even a 10 point scale won’t be granular enough. Remember, human skin tones are along a continuum after all.”