|

Listen to this story

|

Google researchers have recently unveiled a new update for their Universal Speech Model (USM), to support 1,000 languages. The researchers said that this model performs better than OpenAI Whisper for all segments of automation speech recognition. In addition, better YouTube captions!

Researchers can request access to the USM API here.

The paper, ‘Google USM: Scaling Automatic Speech Recognition Beyond 100 Languages”, shows that a large unlabelled multilingual dataset used to pre-train the encoder of the model and fine-tuned on a smaller set of labelled data enables recognising under-represented languages. Moreover, the training process effectively adapts new languages and data.

The researchers demonstrated the effectiveness of pre-trained encoder through fine-tuning on YouTube Caption’s multilingual speech data. Despite YouTube’s limited supervised data, the model achieves less than 30% word error rate on average across the 73 languages, a milestone never achieved before. The model has, on average, a 32.7% relative lower WER compared to Whisper (large-v2), which was trained with more than 400k hours of labelled data for these 18 languages. USM also outperforms Whisper for all segments of automation speech recognition.

The 1,000 Languages Initiative to build a machine learning model that would support the world’s thousand most-spoken languages for better inclusivity globally was launched last November. However, some of these languages are spoken by fewer than twenty million people, so the principle challenge is to figure out a way to support languages with few speakers or limited available data.

The USM is a group of speech models that have two billion parameters and were trained on a vast dataset of 12 million hours of speech and 28 billion sentences of text, covering over 300 languages. The models are used in YouTube (for closed captions) and can perform automatic speech recognition not only on widely-spoken languages, but also on under-resourced languages like Amharic, Cebuano, Assamese, and Azerbaijani to name a few.

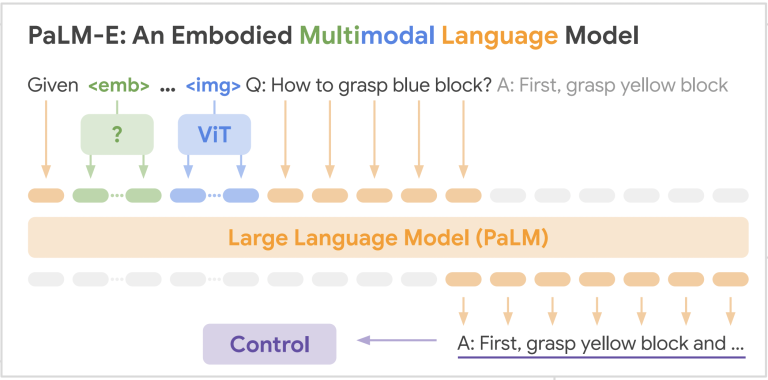

The updated model uses the standard encoder-decoder architecture. The Conformer, or convolution-augmented transformer, is used as an encoder. The important factor is the Conformer block, consisting of attention, feed-forward, and convolutional modules. It takes as input and performs a sampling, after which Conformer blocks along with a projection layer are applied to obtain the final embeddings.

The model’s training starts with self-supervised learning on speech audio covering hundreds of languages. To do so, BEST-RQ, which is efficient on multilingual tasks when using very large amounts of unsupervised audio data, is used.

In the second optional step, the researchers used multi-objective supervised pre-training to incorporate additional text data to improve the model’s quality and language coverage. The decision to incorporate the second step depends on whether text data is available but USM performs best with this step.

In the last stage, the model is fine-tuned on the downstream tasks. With pre-training, it demonstrates quality results with a small amount of supervised data from the tasks.