Using GPUs at scale comes with various challenges due to compute-intensive and memory-intensive components. For instance, GPUs that train state-of-the-art personal recommendation models are largely affected by model architecture configurations such as dense and sparse features or dimensions of a neural network. These models often contain large embedding tables that do not fit into limited GPU memory.

The majority of deep learning recommendation models are trained on CPU servers, unlike language models, which are trained on GPU systems. This is because of the large memory capacity and bandwidth requirement of embedding tables in these models. The memory capacity of embedding tables has increased dramatically from tens of GBs to TBs throughout the industry. At the same time, memory bandwidth usage also increased quickly with the increasing number of embedding tables and the associated lookups.

According to reports, over the last 18-month period, the compute capacity for recommendation model training quadrupled at Facebook’s data center fleet. Among the total AI training cycles at Facebook, more than 50% has been devoted to training deep learning recommendation models.

Leveraging GPUs At Scale

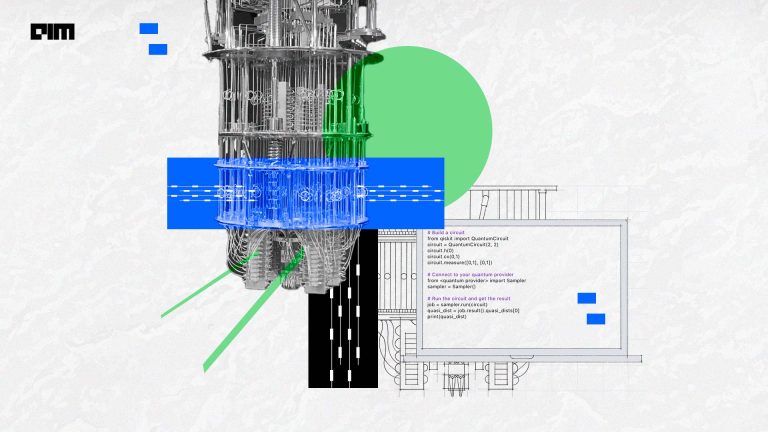

So, how does a large organisation such as Facebook leverage GPUs for recommendation models at scale? In order to understand the underlying training system architectures to develop a better understanding of GPU training performance, the researchers at FAIR investigated various components(shown in red in the picture below) in an architecture that affects the efficiency of the model.

The rationale behind recommendation model configurations is as follows:

- Features categorised into two distinct types: dense and sparse. Dense features are scalar inputs, whereas sparse features often encode categorical traits or relevant IDs.

- The computational cost of each dense feature is roughly the same.

- Sparse features determine how many embedding tables there will be.

- Typically, Hashing is a common method for limiting the size of embedding tables.

(Note: Embeddings make it easier to do machine learning on large inputs like sparse vectors. An embedding captures some of the input’s semantics by placing semantically similar inputs close together in the embedding space, which can then be learned and reused across models.)

For their experiments, the researchers used Facebook’s 8-GPU training systems called the “Big Basin” and a new prototype that goes by the name “Zion”.

The researchers observed that training recommendation models exhibit both data parallelism and model parallelism. Researchers wrote that while training deep learning recommendation models on CPUs offer memory capacity advantage, a large degree of parallelism in the training process, which could be unlocked by utilising accelerators, is left unexploited.

Currently, to exploit data parallelism, in production, each trainer server holds a copy of the model parameters, reads its own mini-batches from reader servers and performs Elastic-Averaging SGD (EASGD) update with the centre, dense parameter server. Within a trainer, HogWild!

Most of the machine learning is about finding the right kind of variables for converging towards reasonable predictions. Introduced a decade ago, Hogwild! is a method that helps find those variables efficiently.

Parallelisation of the basic SGD doesn’t help. Its inherently sequential nature limits stochastic Gradient Descent’s (SGD) scalability; it is difficult to parallelise. There was no way around memory locking, which deteriorated the performance. Memory locking was essential to reduce latency for between processes. Hogwild!, enabled the processors to have equal access to shared memory and update individual components of memory at will.

According to the researchers, an alternative is to train recommendation models on Facebook’s Big Basin GPU servers, initially designed for non-recommendation AI workloads. Big Basin architecture features 8 NVIDIA Tesla GPUs enabling 15.7 teraflops of single-precision floating-point arithmetic per GPU. Whereas, the bandwidth and CPU compute capacity of Zion is much larger, with ∼2 TB system memory and ∼1 TB/s memory bandwidth.

Embedding tables are distributed among GPUs; different partitioning strategies can be used, such as table-wise or row-wise partitioning. For GPU servers like those of Big Basin, storing embedding tables on the GPUs enables offloading all model operations to be done on the GPU, minimising the CPU usage and CPU-GPU copy operations. “Storing the embedding tables on the system memory of the CPUs of the GPU server would be a good option for servers with large system memory,” explained the researchers. They also talk about using a hybrid alternative where some of the embedding tables are stored on the GPUs and some are stored on the system memory. This helps when the embedding tables do not fit on the GPU.

Though building recommendation models is still challenging, the researchers elaborated a fresh perspective by segmenting components that play a crucial role in training efficiencies:

- different levels of CPUs, memory capacity,

- memory and network bandwidth requirements,

- dense and sparse features,

- batch sizes,

- embedding table hash sizes, and

- MLP(neural network) dimensions.

Key Takeaways

- Ever-increasing sizes of deep learning recommendation models, particularly embedding tables, introduce significant system design challenges.

- The most efficient choice of a hardware system depends on the model parameters such as the number of dense and sparse features, embedding table sizes, feature interaction types, and neural networks dimensions.

- Embedding tables placement on different hardware systems (CPU vs GPU) requires different strategies.

- Researchers introduce Zion — a next-gen platform for deploying recommendation models at scale.

With this work, the researchers have tried to explore and offer solutions with Facebook’s production-scale deep learning recommendation models that can be used to guide the design of training infrastructures.

Download the original paper here.