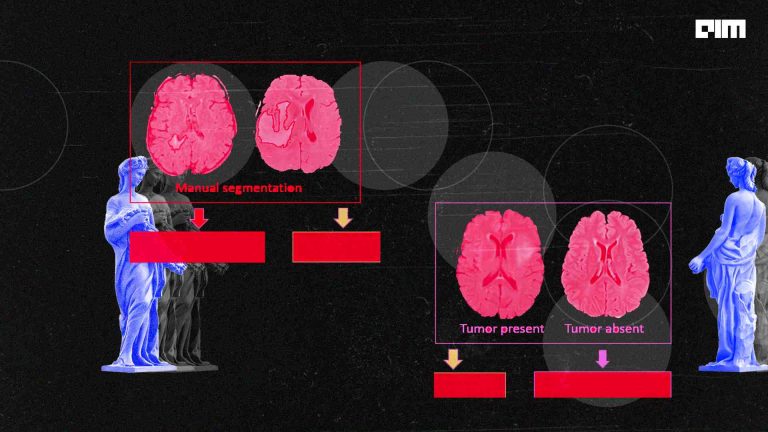

Semantic Segmentation is the process of labelling each pixel of an image with one class label. This challenging labelling task serves to answer the questions: What is in the image? And Where is it located in the image? In this post, let’s explore PaddleSeg, An End to End Image Segmentation Development Toolkit.

PaddleSeg

PaddleSeg is an Image segmentation framework based on Baidu’s PaddlePaddle(Parallel Distributed Deep Learning). It provides high performance and efficiency, SOTA segmentation models optimized for MultiNode and MultiGPU production systems. It’s modular design allows us to conveniently experiment and build complex models through configurations and api calls. Let’s build an Asymmetric Non Local Neural Network to gain an understanding of this library.

Non Local Neural Networks

Convolutional Deep Neural Networks reigned supreme for semantic segmentation, but the lack of non-local dependency capturing capability keeps these Networks’ performance from being satisfactory in performance. To alleviate this problem, Non-Local Filtering approach is used to broaden the receptive fields of the neurons and allow the model to capture non-local dependency patterns. It also helps to reduce the models’ depth and supports multihop dependency modelling by stacking Non-local blocks. Following is the architecture of the Non-local Block.

This looks similar to the self-attention layers. In Fact, this is a more general form of self-attention. Non-local filtering block is mathematically expressed as

Here several functions can be used for f and g. If a Simple Dot product is used with softmax activation, this becomes the self-attention layer.

y = softmax(x T WT θ Wφx)g(x)

Other choices of f include Gaussian Kernel, Embedded Gaussian Kernel, Dot product without softmax, concatenation etc. This block is used in parallel with a residual connection to make it fit into several architectures without breaking the previous schema. All these nice model caveats come at a cost and that cost is the quadratic computational complexity.

Asymmetric Non Local Neural Network

ANN is an image segmentation model with Asymmetric versions of the Nonlocal blocks that reduce the model’s complexity.

The two most prominent components of the ANN architecture are the AFNB and APNB blocks. Let’s take a close look at these blocks.

Asymmetric Pyramid Non-Local Block

Looking at the Non-local block from a calculation standpoint.It boils to the following matrix multiplication operations.

Here N =H X W is the image size while C is the length of each pixel’s representation. The underlined multiplications are of quadratic complexity in terms of N, and N is large in images making the non-local block memory and compute-intensive. By sampling the matrices and performing multiplication on samples instead of all the pixels, we can reduce the complexity a lot while still being better than routine convolution.

The keys and values are sampled while calculating attention. In the original implementation, authors reused parameters between keys and values, making them essentially the same. Now it comes down to choosing a robust sampling strategy. We need the sampling to reduce the dimensions as much as possible while also preserving the performance. Pyramid Pooling is a great strategy that balances this tradeoff.

Pooling is a parameter-free operation; hence this strategy doesn’t require any additional memory. This reduces the number of calculations by up to 300 times on images of dimensions 128X256. Putting all of these things together, the architecture of APNB block is as follows.

Asymmetric Fusion Non-Local Block

AFNB is a variation of APNB. It aims to improve the segmentation algorithms performance by fusing the features from different levels of the model. It achieves fusion by simply using the queries from high level feature maps while extracting keys and values from low level feature maps.

Here Xhis input from higher dimension feature maps and Xl is the input from lower dimensional feature maps.Final Output YF is calculated using the following equations

Pyramid sampling is applied in the same way as in APNB but the parameter sharing is absent here. Final Architecture of AFNB is

PaddleSeg Usage

Installation

PaddleSeg is based on Baidu’s deep learning Framework PaddlePaddle.We need to first install PaddlePaddle from here.

!python -m pip install paddlepaddle-gpu -f https://paddlepaddle.org.cn/whl/mkl/stable.html

To install paddleseg, we can use pip or directly clone the repository.

!pip install paddleseg

Data Loading

Let’s train the ANN model on Pascal VOC dataset.It contains images consisting of 21 classes. paddlseg handles the download and uncompression of images and labels.

from paddleseg.datasets import PascalVOC import paddleseg.transforms as T transforms = [T.Resize(target_size=(200, 200)), T.Normalize()] train_data = PascalVOC(dataset_root='data/pascalvoc',transforms=transforms,mode='train') validation_data = PascalVOC(dataset_root='data/pascalvoc',transforms=transforms,mode='val')

Model Setup

Building a model is very straight forward.

from paddleseg.models import ANN from paddleseg.models.backbones import ResNet101_vd backbone=ResNet101_vd() model = ANN(num_classes=21,backbone=backbone,pretrained=None)

Next we need to select an optimizer and losses.

from paddle import optimizer as optim

from paddleseg.models.losses import CrossEntropyLoss

losses = {'types':[CrossEntropyLoss(),CrossEntropyLoss()],'coef':[1,1]}

# one loss if for output other is for auxillary logits

optimizer = optim.Momentum(optim.lr.PolynomialDecay(0.01,

power=0.9,

decay_steps=1000,

end_lr=0),

parameters=model.parameters(),

momentum=0.9,

weight_decay=4.0e-5)

Training

Training can be done using a single function call.

from paddleseg.core import train train( model=model, train_dataset=train_data, val_dataset=validation_data, optimizer=optimizer, save_dir='output', iters=1000, batch_size=4, save_interval=200, log_iters=10, losses=losses, use_vdl=True)

Use the following Arguments for the train function for finer control.

Inference

from paddleseg.core import predict

import os

model = ANN(num_classes=21,backbone=ResNet50_vd())

predict(

model,

model_path='/content/model.pdparams',

transforms=transforms,

image_list=['data/pascalvoc/VOC2012/JPEGImages/'+i for i in os.listdir('data/pascalvoc/VOC2012/JPEGImages/')],

image_dir='data/pascalvoc/VOC2012/JPEGImages',

save_dir='output/results'

)