In machine learning after acquiring the data, we enquire what the data is trying to tell us and how we can make its features into a simpler form to fit in the model. Feature engineering is the most crucial and critical phase in building a good machine learning model. Manipulating the required features and selecting accurate ones to give us the best results is quite a challenging task, especially for large datasets. This needs proper knowledge and continuous experimenting, turning out to be memory intensive and time-consuming.

AutoFeat is a python library that provides automated feature engineering and feature selection along with models such as AutoFeatRegressor and AutoFeatClassifier. These are built with many scientific calculations and need good computational power.

In this article, I’ll be discussing the aspects of using AutoFeat, steps involved and its implementation with a real-world dataset.

Properties

- Works similar to scikit learn models using functions such as fit(), fit_transform(), predict(), and score().

- Can handle categorical features with One hot encoding.

- Until now works only on Supervised Learning(Regression and Classification)

- Feature Selector class for selecting suitable features.

- Physical units of features can be passed and relatable features will be computed.

- Buckingham Pi theorem – used for computing dimensionless quantities.

- Only used for tabular data

Advantages

- Simpler to understand

- Easy to use

- Good for non-statisticians

Disadvantages

- Could miss out on some important features

- Features need to be scaled before using AutoFeat

- Cannot handle missing values

Steps in AutoFeat

Feature Engineering -The input feature vector is transformed to non-linear transformations(logarithmic, exponential, reciprocal, square root, etc) then combining those transformed or normal features to create more complex features and again apply non-linear transformations. This could be repeated as many times as wanted but the feature space grows dynamically so conventionally it is preferred to stop after two or three iterations by which much information about the features is gathered.

For generating new features SymPy library from python is used which simplifies and removes redundant features. To operate and manipulate physical quantities, a Pint library from python is used which also computes the dimensionless features using Buckingham Pi theorem.

Feature Selection – Out of the large feature vectors, the optimal features are to be picked. There are two types of feature selection approaches – Univariate(one feature at a time) and multivariate(multiple features at a time). Multivariate is preferred over univariate as univariate feature selection could add redundant features and also miss out on important features. For this purpose, AutoFeat has integrated the FeatureSelector class. The LassolarsCV model is used to choose features based on sparse weights. It uses a ‘noise filtering approach’ by training the model on original features.

Implementation

Installation – pip install autofeat

AutoFeat in Regression

For regression, AutoFeatRegressor module is used.

Parameters:

- categorical_cols=None, list of categorical columns that will be one hot encoded.

- feateng_cols=None, list of columns that will be used for feature engineering.

- units=None, all columns are dimensionless otherwise the measurement unit is converted to pint unit.

- feateng_steps=2, iteration for feature engineering steps

- featsel_runs=5, number of times to perform feature selection with a fraction of data.

- max_gb=None, maximum gigabytes to be used in feature engineering

- transformations=(“1/”, “exp”, “log”, “abs”, “sqrt”, “^2”, “^3”), list of transformations to be applied.

- apply_pi_theorem=True, whether to or not to apply pi theorem.

- always_return_numpy=False, whether to return numpy.ndarray or pandas.DataFrame.

- n_jobs=1, parallel jobs to be run.

- verbose=0, verbosity level.

The dataset used for demonstration is Boston housing price dataset from scikit learn library.

Performance metrics used for regression is R-squared error present within model.score().

import pandas as pd

from AutoFeat import AutoFeatRegressor

from sklearn.model_selection import train_test_split

from sklearn.datasets import load_boston

import matplotlib.pyplot as plt

data = load_boston()

X = data.data

y= data.target

X_train, X_test, y_train, y_test = train_test_split(X, y,test_size=.3,random_state =0)

model = AutoFeatRegressor()

df = model.fit_transform(X, y)

pred = model.predict(X_test)

print("Final R^2: %.4f" % model.score(df, y))

OUTPUT - Final R^2: 0.9074

Originally X.shape = (506,13) and after transformation df.shape = (506,32)

The column names show the newly formed features.

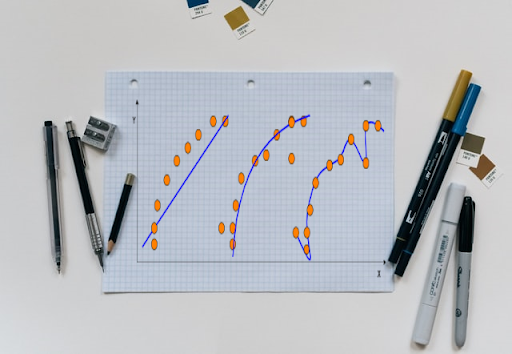

plt.figure() plt.scatter(model.predict(df), y, s=2)

AutoFeat in Classification

For classification, AutoFeatClassifier module is used. Parameters are the same as a regressor. The dataset used for demonstration is wine classification dataset from scikit learn library. Performance metrics used for classification is Accuracy present within model.score().

from AutoFeat import AutoFeatClassifier

from sklearn.datasets import load_wine

X,y = load_wine(True)

X_train, X_test, y_train, y_test = train_test_split(X, y,test_size=.3,random_state =0)

model = AutoFeatClassifier()

df = model.fit_transform(X, y)

y_pred = model.predict(X_test)

print("Final Accuracy: %.4f" % model.score(df, y))

OUTPUT - Final Accuracy: 0.9944

AutoFeatModel

Instead of using Regressor or Classifier separately, they could be used as an argument to AutoFeatModel class. By default, it’s set to regression.

model = AutoFeatModel(problem_type='regression')

df = model.fit_transform(X, y)

y_pred = model.predict(X_test)

print("Final R^2: %.4f" % model.score(df, y))

OUTPUT - Final R^2: 0.8908

Feature Selector

FeatureSelector class provides automatic feature selection. The selected features are returned as a dataframe.

Parameters

- problem_type=”regression”, by default regression otherwise could be set to classification.

- featsel_runs=5, number of iterations to be performed for feature selection.

- keep=None, a list of features that are to be kept.

- n_jobs=1, number of parallel jobs to be run.

- verbose=0, verbosity level.

from AutoFeat import FeatureSelector X,y = load_wine(True) fsel = FeatureSelector(verbose=1) new_X = fsel.fit_transform(pd.DataFrame(X), pd.DataFrame(y))

[featsel] Scaling data...done. [featsel] Feature selection run 1/5 [featsel] Feature selection run 2/5 [featsel] Feature selection run 3/5 [featsel] Feature selection run 4/5 [featsel] Feature selection run 5/5 [featsel] 11 features after 5 feature selection runs [featsel] 10 features after correlation filtering [featsel] 8 features after noise filtering

Training the prediction model with additional noisy features and selecting only those which have a higher coefficient than other noisy features.

Conclusion

As we progress towards Auto ML so these ready to use libraries can prove to be very handy in the production of business decision making. Though AutoFeat does not achieve the complete state-of-art and has certain limitations, it does perform a fairly good job.

The complete code of the above implementation is available at the AIM’s GitHub repository. Please visit this link to find the notebook of this code.