In the present scenario of Artificial Intelligence, Facebook AI Research (FAIR) is one of the leading contributors of open-source tools, libraries and architectures. There are many examples of contributions by Facebook like Flashlight, Opacus, Detectron2, Fasttext etc., to learn more about the contribution by FAIR, refer to this link. Fasttext is one of the open-source libraries for text classification and word representation, Contributed by FAIR.

Introduction

FastText is an open-source library for efficient text classification and word representation. Therefore, we can consider it an extension of normal text classification methods. In conventional methods, we convert the words or texts into vectors that contain numeric values to make a machine learning algorithm understand the text. Wherein fastText breaks words into several sub-word.

Text Classification is a process to assign information (such as emails, posts, messages, tweets etc.) to one or multiple labels. Labels can be the score of review, non-spam or spam messages or categories in which information about the document is available.

Word Representation is used to improve the performance of text classifiers. An idea admired by many people in the current machine learning scenario is to represent words by a vector; vectors contain analogies or sentiment about a language or document.

This post is a tutorial to introduce the fastText library using google colab and tell how the whole pipeline looks like. First, we will install the fastText library and afterwards import the data, suggested by the fasttext to practice, preprocess it to predict the model’s response, and improve the model results.

This article will make a text classification model using fastText is an open-source library and google colab as our IDE.

Also Read:

- 10 Machine Learning Projects Every Tech Aficionado Must Work On In 2019

- Facebook Introduces New Model For Word Embeddings Which Are Resilient To Misspellings

- All The Machine Learning Libraries Open-Sourced By Facebook Ever

- Robots Will Assist Doctors In Half Of All Surgeries By 2025

- 5 Object Detection Evaluation Metrics That Data Scientists Should Know

Let’s start from the installation of fasttext:

The following code implementation is in reference to the official implementation.

Installing the FastText is easy on UNIX(Linux) operating system. To do this in google colab we will build the binary of fastText, which is required to act.

To build it, we require the following codes in a cell and run it.

Input:

!git clone https://github.com/facebookresearch/fastText.git %cd fastText !make !cp fasttext ../ %cd ..

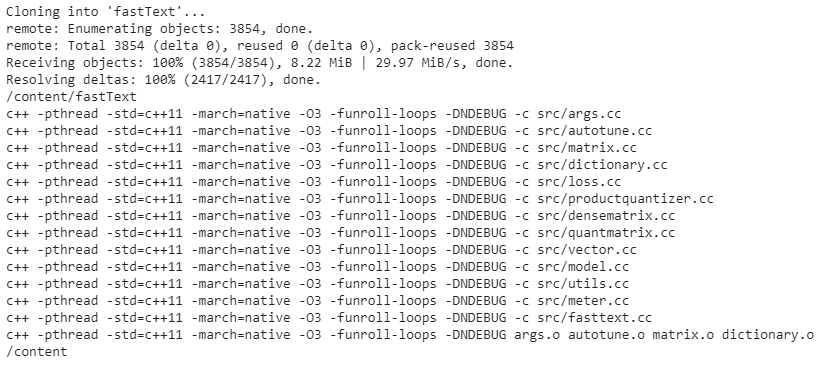

Output:

FastText has its bindings for python, which allows us to install it using pip directly.

Input :

!pip install fasttext

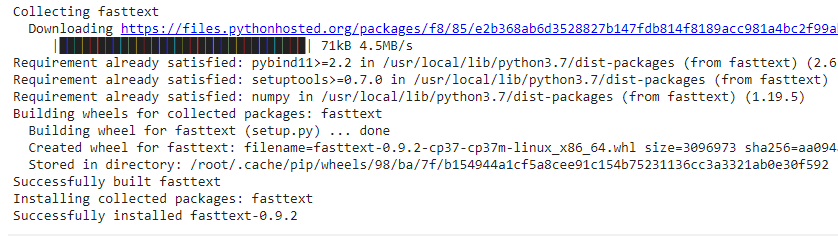

Output :

Gathering the data

After successfully installing fastText, we will gather the data. But, before it, let’s just take a look at the dataset.

The data set consists of thousands of questions asked on Cooking StackExchange, which have various assigned levels and are already present in FastText format. Here we require data where each line contains text information that is classified or levels.

For example:

__level__apple A apple in a day keep the doctor away.

In the next following steps, we are downloading the dataset and describing information about the data.

Input :

!wget https://dl.fbaipublicfiles.com/fasttext/data/cooking.stackexchange.tar.gz && tar xvzf cooking.stackexchange.tar.gz

Output:

Input:

!head cooking.stackexchange.txt

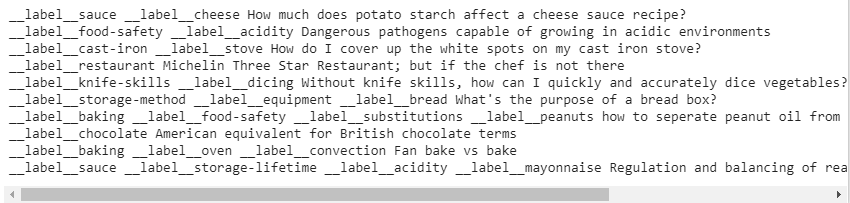

Output:

Input:

!wc cooking.stackexchange.txt

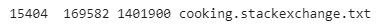

Output:

Here in the above outputs, we can see the format of the dataset and the number of rows in the data set, which is 15404.

In the following step, we are splitting the dataset into training and validation data.

Input:

!head -n 12404 cooking.stackexchange.txt > cooking.train

!wc cooking.train

!tail -n 3000 cooking.stackexchange.txt > cooking.valid

!wc cooking.valid

Output:

Here we have split 12404 rows of the cooking.stackexchange.txt set into cooking.train data and 3000 rows of cooking.stackexchange.text into cooking.valid data.

Training of a fastText model

Next, we are ready to train our model with cooking.train data using the following command.

Input :

!./fasttext supervised -input ./cooking.train -output ./cooking_model1

Or

import fasttext model = fasttext.train_supervised(input='cooking.train')

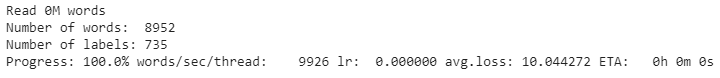

Output:

It takes very little time in training. In the output, we can see the details about the models; in the above code, the input argument takes the data for training. So here cooking.train is our training data.

Now we can check the training of our classifier by:

Input :

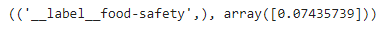

model.predict("Which baking pan is best for bake amulet ?")

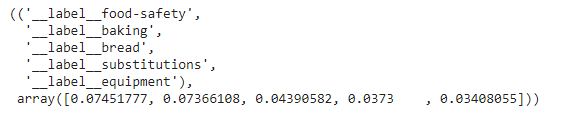

Output:

In the output, we can see the label predicted by the model is baking; here it is working fine; let’s check for another label :

Input :

model.predict("Why not put glasses in the dishwasher?")

Output:

Here we can see that the label predicted by the model is wrong, and the model failed in a simple example. We will test it with our validation data (cooking.valid).

Input:

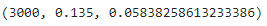

model.test("cooking.valid",k=1)

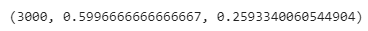

Output:

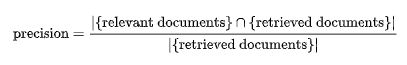

Looking at the result, they are not that good, as output shows that there are 3000 rows in the validation data set and the second value is precision, mathematically representation of precision is :

For text search in a document, precision can be the number or correct result divided by all the results.

The third value is recall; the mathematical representation of recall is:

In a similar example, recall is the number of correct answers divided by the number of required answers. Both precision and recall vary between 0 to 1.

In the test result, we can see that results are not that satisfactory. Therefore, to improve the results, we will predict with the top 3 labels of the dataset.

Input:

model.test("cooking.valid",k=3)

Output:

Input:

model.predict("Why not put knives in the dishwasher?", k=5)

Output:

Here we can see that the model predicted one label correctly out of 5 labels, and only one label is giving 0.035 recall value.

Next, we will discuss improving the above model.

Making fastText model better

Before changing any model parameters, let’s just see which parameter we can set according to the requirement.

Input :

!./fasttext supervised

Output:

To improve our model, we will preprocess the data as we can see some words and levels containing uppercase letters or some of them contain punctuations, so we try to remove or change those things.

Input:

!cat cooking.stackexchange.txt | sed -e "s/\([.\!?,'/()]\)/ \1 /g" | tr "[:upper:]" "[:lower:]" > cooking.preprocessed.txt!head -n 12404 cooking.preprocessed.txt > cooking.train!tail -n 3000 cooking.preprocessed.txt > cooking.valid

After cleaning and splitting the data, we will fit the model again for training.

Input :

!./fasttext supervised -input ./cooking.train -output ./model

Output:

After cleaning the data, we can observe the words count goes down to 8952, and the number of the label is increased by 1.

Let’s check the accuracy of the model.

Input:

model.test("cooking.valid")

Output:

(3000, 0.16433333333333333, 0.07106818509442121)

We can compare the results; here, the precision is also starting to go up.

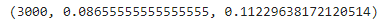

Now we will increase the number of epochs.

Input :

model = fasttext.train_supervised(input="cooking.train", epoch=25)

Let’s check the new model.

Input:

model.test("cooking.valid")

Output:

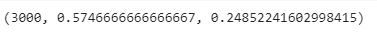

After testing the model, we can see that the model is much better. However, there are some more ways to improve the model. First, we can increase the learning rate. For this, we need to change the lr argument in the model. The suggested good value of the learning rate is between 0.1-1.0.

Input:

model = fasttext.train_supervised(input="cooking.train", lr=1.0)

Let’s check for the results or the new model.

Input:

model.test("cooking.valid")

Output:

This time the model is quite improved by precision and recall value, now we will try to put both epoch and learning rate together in the training of the model, and then we will check for the results.

Input :

model = fasttext.train_supervised(input="cooking.train", lr=1.0, epoch=25)

Let’s check test the model.

Input :

model.test("cooking.valid")

Output:

(3000, 0.5843333333333334, 0.25270289750612657)

We have improved our model precision from 16% to 58.4% in the above steps, changing the epochs and learning rate. Next, we will take one more step to improve the model’s performance where we will use the bigram instead of just using unigram. Before doing this, let’s go through the word n-gram.

Word n-gram – the basic idea of word n-gram is the sequence of n words. Like ‘apple’ is a unigram, ‘eating apple’ is a bigram and ‘eating two apples’ is trigram or 3-gram. The fasText is capable of making word n-gram when preparing for word vectors. For example, there is a word banana; we will use bigram in our next model to train it. Atthe time of training model, we provide it with an argument n-gram = 2; For example, the n-gram=2 for the word banana is

- <b

- Ba

- An

- Na

- An

- Na

- n>

Here < and > shows the start and end of the word; this is a quite useful technique. Now it is very important to know why it is useful; what if we had the word ban as a vocabulary word? It will be represented as <ba> and <na>, which make this word (ban) to be identified as we can extract word from banana.

Let’s try to improve the model performance using word bigrams.

Input :

model = fasttext.train_supervised(input="cooking.train", lr=1.0, epoch=25, wordNgrams=2)

Output:

Here we can see it has again improved by 1%. Now the model’s precision is almost 60%.

Till now, in this article, we were using regular softmax because the dataset was small, and we have seen that it took only a few seconds to fit the dataset. But if the size of the dataset is enormous, or we can say having several values in millions or billions, then the suggested advice is to use hierarchical softmax.

Hierarchical softmax is a loss function that computes an approximation of softmax faster. For a detailed explanation, you can go through this video.

Let’s put the hierarchical softmax as a loss function to train the model and test the model.

Input:

model = fasttext.train_supervised(input="cooking.train", lr=0.5, epoch=25, wordNgrams=2, loss='hs')

Testing the model :

Input:

model.test("cooking.valid")

Output:

As we can see with the result, our precision has decreased by almost 3%. This might be happening because of data size, which is very little for training a model using a hierarchical softmax loss function.

Now we will be providing a bucket list and dimension of words to model. Finally, we are changing the loss function to ova, whose primary meaning is one vs all and decreasing the learning rate to 0.5.

Input :

model = fasttext.train_supervised(input="cooking.train", lr=0.5, epoch=25, wordNgrams=2, bucket=200000, dim=50, loss='ova')

Let’s test the model on cooking.valid data.

Input:

model.test("cooking.valid")

Output :

Here again, we can reach 60% of the precision for our model. It keeps increasing as we are going into depth and changing the relevant parameters.

Now let’s see the predictions made by the model. In this prediction, we ask the model to make predictions as many as it can over the 0.5 level of probability.

Input :

model.predict("Which baking dish is best to bake a banana bread ?", k=-1, threshold=0.5)

Output:

As we can see in our final results model has predicted all the values correctly, where when we started building, it was not even producing a single successful result. Making this model is very easy and less time-consuming. We discussed how to use fastText and why and where to use its different models using different parameters like the epochs, learning rate, dimensions of words, bucket value, and changing loss function.

References

All the information written in this article is gathered from: