The challenges of handling explosive growth of data volume and complexity cause the increasing need for semantic queries. The semantic query can be interpreted as the correlation-aware retrieval while containing approximate results. Although the true value of data heavily depends on how efficiently semantic search can be carried out on the data in real-time, this large data is significantly reduced due to data deterioration.

The word semantic is a linguistic term. It means something related to meaning in language or logic. Understanding how semantic search works- in natural language, semantic analysis relates the structure and occurrence of words, phrases, clauses, paragraphs, etc., and understanding what’s written in the text. The challenge we face in a technologically advanced world is to make machines understand the language of logic as human beings do.

Semantic matching works for the rules which are to be defined for the system. This rule is the same as we think about the language, and we ask the machines to imitate. E.g. ‘This is the white car’ is a simple sentence so that we humans can easily understand the terms like: a car with the color white. Whereas for machines, this is something we humans do not understand. The concept of linguistics in nothing but the sentence which has a unique structure, i.e. Subject-Predicate-Object S-P-O in short. Where ‘Car’ is the subject ‘is’ is a predicate and ‘white’ is the object.

When dealing with large textual data, it is impossible to perform semantic matching by scanning the whole textual input data manually or by traditional NLP techniques; thus, we are discussing the approximate similarity matching algorithm, which allows us to trade-off between little of accuracy and nearest neighbor matches.

This article will discuss one such approach made to address the semantic search result using the Approximate Nearest Neighbor (ANN) index using the extracted embeddings. The below code implementation is a reference from the official code implementation.

Code implementation of Semantic Search

Apache beam generates the embeddings from the Tensorflow Hub model and ANNOY library to generate the nearest neighbor index. Annoy (Approximate Nearest Neighbor Oh Yeah) is a C++ library with Python bindings to search for points near in the space for the given query. It also creates large read-only file-based data structures mapped into memory; many processes may share the same data.

Install & import all dependencies:

!pip install apache_beam !pip install 'scikit_learn~=0.23.0' # For gaussian_random_matrix. !pip install annoy

import os import sys import pickle from collections import namedtuple from datetime import datetime import numpy as np import apache_beam as beam from apache_beam.transforms import util import tensorflow as tf import tensorflow_hub as hub import annoy from sklearn.random_projection import gaussian_random_matrix

Prepare the data:

A million news headline dataset is used here, which contains news headlines published over 15 years sourced from Australian Broadcasting Corp. This dataset is more focused on the historical records of noteworthy events from 2003 to 2017. The dataset has two columns, one date published and the other is the main text. Later we remove the first column as it is not necessary.

!wget 'https://dataverse.harvard.edu/api/access/datafile/3450625?format=tab&gbrecs=true' -O raw.tsv

!wc -l raw.tsv !rm -r corpus !mkdir corpus

with open('corpus/text.txt', 'w') as out_file:

with open('raw.tsv', 'r') as in_file:

for lines in in_file:

headline = lines.split('\t')[1].strip().strip('"')

out_file.write(headline+"\n")

!tail corpus/text.txt

Output:

Generate Embeddings for the data:

The Neural Network Language model is used to generate embeddings for the headlines, and later these embeddings is used to compute the sentence level semantic meaning.

Embedding Extraction method:

embed = None def generate_embeddings(text, model_url, random_projection_matrix=None): global embed if embed is None: embed = hub.load(model_url) embedding = embed(text).numpy() if random_projection_matrix is not None: embedding = embedding.dot(random_projection_matrix) return text, embedding

def to_tf_example(entries):

example = []

text_lis, embedding_lis = entries

for i in range(len(text_lis)):

text = text_lis[i]

embedding = embedding_lis[i]

features = {

'text': tf.train.Feature(

bytes_list=tf.train.BytesList(value=[text.encode('utf-8')])),

'embedding': tf.train.Feature(

float_list=tf.train.FloatList(value=embedding.tolist()))

}

example = tf.train.Example(

features=tf.train.Features(

feature=features)).SerializeToString(deterministic=True)

example.append(example)

return example

Beam Pipeline:

def run_hub2emb(args):

options = beam.options.pipeline_options.PipelineOptions(**args)

args = namedtuple("options", args.keys())(*args.values())

with beam.Pipeline(args.runner, options=options) as pipeline:

(pipeline

| 'Read sentences from files' >> beam.io.ReadFromText(

file_pattern=args.data_dir)

| 'Batch elements' >> util.BatchElements(

min_batch_size=args.batch_size, max_batch_size=args.batch_size)

| 'Generate embeddings' >> beam.Map(

generate_embeddings, args.model_url, args.random_projection_matrix)

| 'Encode to tf example' >> beam.FlatMap(to_tf_example)

| 'Write to TFRecords files' >> beam.io.WriteToTFRecord(

file_path_prefix='{}/emb'.format(args.output_dir),

file_name_suffix='.tfrecords'))

Generate Random projection weight matrix:

def generate_random_projection_weights(original, projected):

random_projection_matrix = None

random_projection_matrix = gaussian_random_matrix(

n_components=projected, n_features=original).T

print("A Gaussian random weight matrix shape of {}".format(random_projection_matrix.shape))

print('Storing projection matrix...')

with open('random_projection_matrix', 'wb') as handle:

pickle.dump(random_projection_matrix,

handle, protocol=pickle.HIGHEST_PROTOCOL)

return random_projection_matrix

Set the parameters:

model_url = 'https://tfhub.dev/google/nnlm-en-dim128/2' projected_dim = 64

Run the pipeline:

import tempfile

dir_output = tempfile.mkdtemp()

dim_original = hub.load(model_url)(['']).shape[1]

random_projection_matrix = None

if projected_dim:

random_projection_matrix = generate_random_projection_weights(

dim_original, projected_dim)

args = {

'job_name': 'hub2emb-{}'.format(datetime.utcnow().strftime('%y%m%d-%H%M%S')),

'runner': 'DirectRunner',

'batch_size': 1024,

'data_dir': 'corpus/*.txt',

'output_dir': dir_output,

'model_url': model_url,

'random_projection_matrix': random_projection_matrix,}

print("Pipeline args are set.")

args

Build the ANN index for Embeddings:

def build_index(embedding_files_pattern, index_filename, vector_length,

metric='angular', num_trees=100):

annoy_index = annoy.AnnoyIndex(vector_length, metric=metric)

mapping = {}

embed_files = tf.io.gfile.glob(embedding_files_pattern)

num_files = len(embed_files)

print('Found {} embedding file(s).'.format(num_files))

item_counter = 0

for i, embed_file in enumerate(embed_files):

print('Loading embeddings in file {} of {}...'.format(i+1, num_files))

dataset = tf.data.TFRecordDataset(embed_file)

for record in dataset.map(_parse_example):

text = record['text'].numpy().decode("utf-8")

embedding = record['embedding'].numpy()

mapping[item_counter] = text

annoy_index.add_item(item_counter, embedding)

item_counter += 1

if item_counter % 100000 == 0:

print('{} items loaded to the index'.format(item_counter))

print('A total of {} items added to the index'.format(item_counter))

print('index with {} trees...'.format(num_trees))

annoy_index.build(n_trees=num_trees)

print('Index is successfully built.')

print('index to disk...')

annoy_index.save(index_filename)

print('saved to disk.')

print("file size: {} GB".format(

round(os.path.getsize(index_filename) / float(1024 ** 3), 2)))

annoy_index.unload()

print('mapping to disk...')

with open(index_filename + '.mapping', 'wb') as handle:

pickle.dump(mapping, handle, protocol=pickle.HIGHEST_PROTOCOL)

print('Mapping saved to disk.')

print("Mapping size: {} MB".format(

round(os.path.getsize(index_filename + '.mapping') / float(1024 ** 2), 2)))

Now thats all we can use the ANN index to find the news headline which are semantic to the input query

Load the index and mapping file:

index = annoy.AnnoyIndex(embedding_dimension)

index.load(index_filename, prefault=True)

print('index is loaded.')

with open(index_filename + '.mapping', 'rb') as handle:

mapping = pickle.load(handle)

print('file is loaded.')

Similarity matching method:

def find_similar_items(embeddings, num_matches=5): ids = index.get_nns_by_vector( embeddings, num_matches, search_k=-1, include_distances=False) items = [mapping[i] for i in ids] return items

Extract embedding from given query:

print("TF-Hub model...")

%time embed_fn = hub.load(model_url)

print("TF-Hub is loaded.")

random_projection_matrix = None

if os.path.exists('random_projection_matrix'):

print("Loading random projection matrix...")

with open('random_projection_matrix', 'rb') as handle:

random_projection_matrix = pickle.load(handle)

print('random projection matrix is loaded.')

def extract_embeddings(query):

query_embedding = embed_fn([query])[0].numpy()

if random_projection_matrix is not None:

query_embedding = query_embedding.dot(random_projection_matrix)

return query_embedding

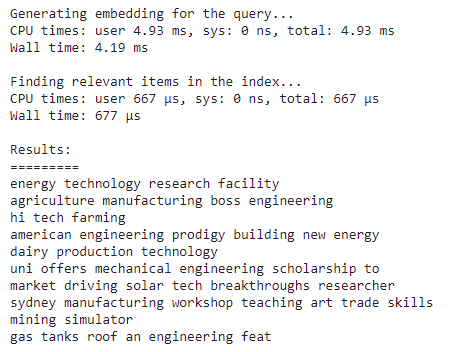

Now the test part:

Enter the random query you want in the query variable defined below on which semantic analysis will be carried out, and the top ten relevant headings will be shown.

query = "engineering"

print("Generating embedding...")

%time query_embedding = extract_embeddings(query)

print("relevant items in the index...")

%time items = find_similar_items(query_embedding, 10)

print("Results:")

print("=========")

for item in items:

print(item)

Output:

Conclusion

This article has seen how semantic analysis can be very effective when it comes to automation-related tasks. The results are very similar as per the query; we queried ‘engineering’, and all the results have engineering references.