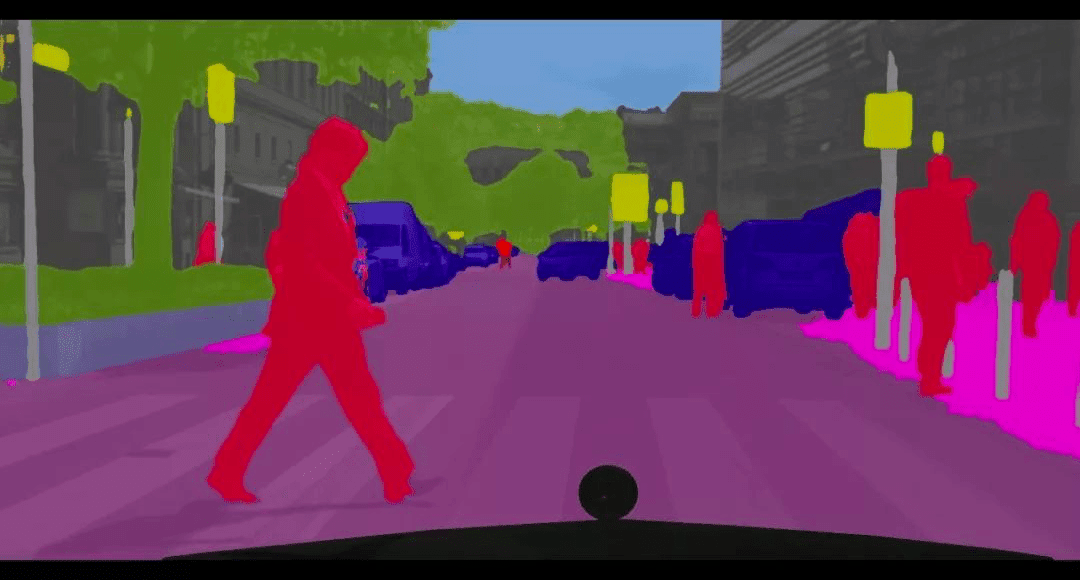

Previously, training deep learning networks for semantic segmentation use cases required large amounts of data(labelled), that was most of the user’s problem that they don’t have a large dataset and still want to get good accuracy from their deep learning models. To solve this issue researchers like Lukas Hoyer, Dengxin Dai, Yuhua Chen, Adrian Koring, Suman Saha, and Luc Van Gool purposed a new way for semi-supervised semantic segmentation, that can be enhanced by monocular depth estimation from the unlabeled dataset(images), their paper named “Three Ways to Improve Semantic Segmentation with Self-Supervised Depth Estimation”(Read here) specifically purposed three methods to really make difference in semantic segmentation outputs.

Three key insights of the paper were:

- They transferred the knowledge from learned features during self-supervised depth estimation.

- Implemented a strong data augmentation technique by integrating images with labels using input image scene structure.

- Utilized depth feature diversity to select the most reliable sets to be labelled for semantic segmentation.

The model is validated on the cityscape dataset, where it achieved significant performance gains, and SOTA results for semantic segmentation and they also open-sourced the technique on GitHub here.

1. Unsupervised Data Selection for Annotation

Firstly, This method uses Self-supervised Depth Estimation(SDE) learned on the K (unlabeled data) sequences to select NA images out of the N images for data annotation (see Algorithm 1 below)

It automatically selects the most useful set to be annotated in order to maximize the gain. The selection is driven by two criteria: diversity and uncertainty. Both of them are conducted by a novel use of SDE in this context.

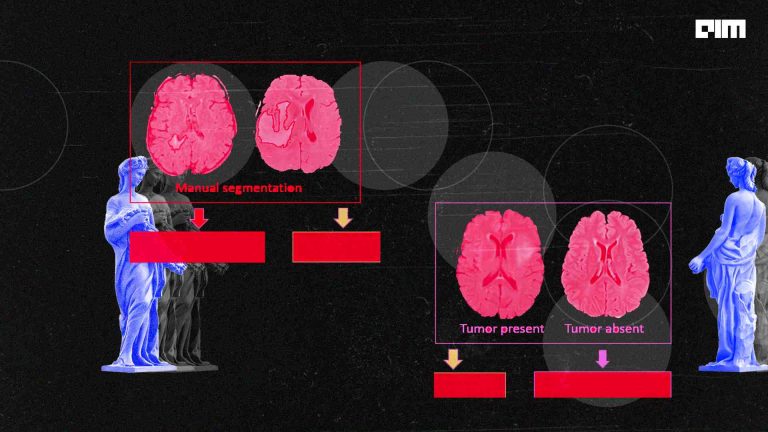

To improve the appearance of semantic segmentation First, researchers learned that if they take depth estimation as an auxiliary task for semantic segmentation and present both transfer learning and multi-task learning, it will precisely improve the performance of the semantic segmentation model.

2. DepthMix Data Augmentation

DepthMix creates virtual training data sets from pairs of labelled images. It blends images as well as their labels according to the structure of the scenes obtained from SDE, DepthMix explicitly considers the geometric structure of the scenes and generates lesser artefacts (see below figure)

3. Semi-Supervised Semantic Segmentation

It trains a semantic segmentation model by utilizing the annotated image dataset, the unlabeled image dataset, and K unlabeled image sequences. It first sees, how to exploit SDE on the image sequences to improve our semantic segmentation. We then show how to improve the performance.

Below is the architecture of semantic segmentation with SDE as an auxiliary task. See that the structure also allows data with a single picture or without segmentation ground truth. That means the SDE or the cross-entropy loss are not enabled.

Network Architecture

The network is trained on a shared ResNet101 encoder and one decoder for segmentation and one for Self-Supervised Depth Estimation(SDE). The decoder consists of an ASPP block to aggregate features from multiple scales and for SDE, the upsampling pieces have a disparity for output at the individual system. For efficient multi-task lore, researchers additionally follow PAD-Net architecture.

Implementation

The source code for the above discussed is open-source here. Let’s see how to reproduce the result and what kind of framework the researcher has used.

Clone and Setup Environment

!git clone https://github.com/lhoyer/improving_segmentation_with_selfsupervised_depth.git

%cd improving_segmentation_with_selfsupervised_depth

!pip install -r requirements.txt -f https://download.pytorch.org/whl/torch_stable.html

Download Dataset and Setup directories

For reproducing the research paper results you have to download the Cityscapes dataset from here:https://www.cityscapes-dataset.com/downloads/, more specifically we need these three files:

- gtFine_trainvaltest.zip

- leftImg8bit_trainvaltest.zip

- leftImg8bit_sequence_trainvaltest.zip

And arrange the dataset as follows:

Note: to download the dataset you must have a work email and after that, you need to wait for a couple of days for approval by the cityscapes dataset website admin.

Train

To train in the two phases of the pretraining (first 300,0000 iterations with frozen encoder and 50,000 iterations with ImageNet feature loss), you can run the below command:

!python train.py --machine ws --config configs/cityscapes_monodepth_highres_dec5_crop.yml

!python train.py --machine ws --config configs/cityscapes_monodepth_highres_dec6_crop.yml

Running Experiments

!python run_experiments.py --machine ws --exp EXP_ID

To learn more about Framework structure you can follow resources here

Conclusion

In this demonstration, we have learned how self-supervised depth estimation (SDE) can be used to improve semantic segmentation, in both semis and fully supervised configuration. We saw the three effective strategies capable of leveraging the knowledge learned from SDE to get State of the art semantic segmentation result on images and video. To learn more about the approach you can follow these resources: