Sense2vec is a neural network model that generates vector space representations of words from large corpora. It is an extension of the infamous word2vec algorithm.Sense2vec creates embeddings for ”senses” rather than tokens of words. A sense is a word combined with a label i.e. the information that represents the context in which the word is used. This label can be a POS Tag, Polarity, Entity Name, Dependency Tag etc.

Word Sense Disambiguation

Despite the ability to capture complex semantic and syntactic relationships amongst the words, Neural word representations obtained using Word2vec fail to encode the context. This context is of extreme importance while disambiguating word senses.Sense2vec aims to solve this problem by generating contextually-keyed word vectors (i.e one vector for each sense of the word.

Ex:

Apple gets two vectors

Apple|PROPER NOUN When It is used as the name of the company

Apple|NOUN When it is used as the name of a fruit.

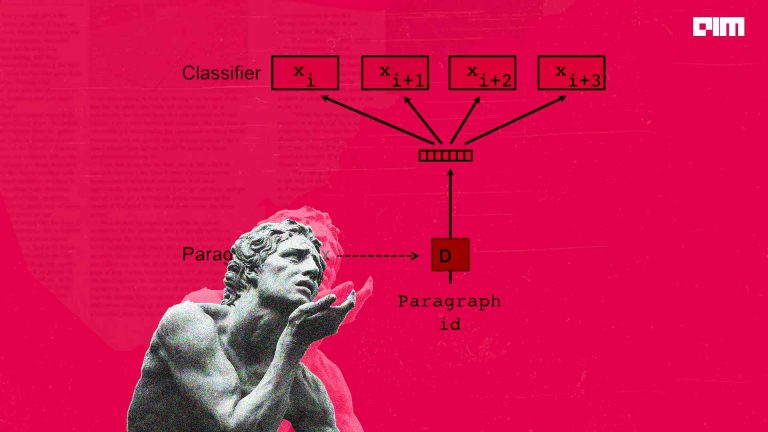

Architecture

We need to either manually or automatically annotate the words prior to training the sense2vec model. Here each word is assigned one or more labels. Each unique word to label a pair is a sense. Now we can train a skip-gram model or CBOW model to learn embedding for each of these senses.

Example

Package

Explosion.ai implemented this model in python and packaged it as a library( github.com/explosion/sense2vec ).

The sense2vec model from this package integrates with spacy seamlessly. Let’s play with this model.

Pip installs this package with a single command

!pip install sense2vec

Getting started with this package is extremely easy. Standalone usage is as follows

from sense2vec import Sense2Vec

# Loading pretrained model

s2v = Sense2Vec().from_disk("s2v_reddit_2015_md/s2v_old")

We can get the embeddings of a sense i.e word along with labels by using “token +’|’+label” as a key.

query = "apple|NOUN" vector = s2v[query] #vector is a dense embedding of size 128

The token can be a unigram or a phrase Examples:

'at_least_two_other_people|NOUN', 'Robert_Baratheon___|PERSON', 'Good_Will_Hunting|WORK_OF_ART', 'a_week_or_so_ago|DATE',

This pre-trained model supports the following POS Tags and Entity names as labels

ADJ adjective NOUN noun ADP adposition NUM numeral ADV adverb PART particle AUX auxiliary SCONJ subordinating conjunction CONJ conjunction SYM symbol DET determiner VERB verb INTJ interjection PRON pronoun PROPN proper noun PUNCT punctuation PERSON People, including fictional. NORP Nationalities or religious or political groups. FACILITY Buildings, airports, highways, bridges, etc. ORG Companies, agencies, institutions, etc. GPE Countries, cities, states. LOC Non-GPE locations, mountain ranges, bodies of water. PRODUCT Objects, vehicles, foods, etc. (Not services.) EVENT Named hurricanes, battles, wars, sports events, etc. WORK_OF_ART Titles of books, songs, etc. LANGUAGE Any named language.

x=s2v['king|NOUN']-s2v['man|NOUN']+s2v['woman|NOUN'] y=s2v['queen|NOUN'] def cosine_similarity(x,y): root_x=np.sqrt(sum([i**2 for i in x])) root_y=np.sqrt(sum([i**2 for i in y])) return sum([i*j for i,j in zip(x,y)])/root_x/root_y cosine_similarity(x,y)#result 0.76

The difference between a king and a man when added to a woman is very close to a woman. These vectors capture the semantic information well. This is not surprising as even word2vec models these relationships. Let’s look at the most similar senses for polysemic words.

| s2v.most_similar(‘apple|NOUN’) | s2v.most_similar(‘Apple|ORG’) |

| [(‘blackberry|NOUN’, 0.8481), (‘apple|ADJ’, 0.7543), (‘banana|NOUN’, 0.751), (‘grape|NOUN’, 0.7432), (‘apple|VERB’, 0.7349), (‘gingerbread|NOUN’, 0.733), (‘jelly_bean|NOUN’, 0.7278), (‘pear|NOUN’, 0.7213), (‘pomegranate|NOUN’, 0.7205), (‘ice_cream_sandwich|NOUN’, 0.7161)] | [(‘BlackBerry|ORG’, 0.9017), (‘>Apple|NOUN’, 0.8947), (‘even_Apple|ORG’, 0.8858), (‘Blackberry|PERSON’, 0.884), (‘_Apple|ORG’, 0.8812), (‘Blackberry|ORG’, 0.8776), (‘Apple|PERSON’, 0.8745), (‘Android|ORG’, 0.8659), (‘OEMs|NOUN’, 0.8608), (‘Samsung|ORG’, 0.8572)] |

These embeddings captured the context very well. But it is the responsibility of the user of these embeddings to provide a label along with a token to select the right vector.

Sense2vec package can infer these labels when provided with spacy’s document object. Following is an example of this kind of usage.

import spacy

nlp = spacy.load("en_core_web_sm")

s2v = nlp.add_pipe("sense2vec")

s2v.from_disk("s2v_old/")

Sense2vec can be added as a component to the spacy pipeline. We can initialize this model with random values and train it or we can load a pre-trained model and update it according to our needs.

doc = nlp('Power resides where men believe it resides. It’s a trick, a shadow on the wall.')

That’s it, we can get embeddings, similar phrases e.t.c for all the supported phrases from this document.

The spacy pipeline has a pos tagger and named entity recognizer components before the sense2vec component.Sense2vec component uses results from these components to create word senses.

for i in doc: try: print(i,i.pos_,'\n',i._.s2v_most_similar(3)) except ValueError as e: #If a token pos tag combination is not in the keyed vectors it raises Error so we need to catch it pass

Comparison with word2vec

Let’s compare these embeddings with word2vec embeddings in the context of classification.

A simple neural network is trained and validated on a toy dataset here are the results.

Sense2vec embeddings performed a little better than word2vec vectors. We should keep in mind that labels used by sense2vec are inferred using spacy’s taggers. Manual annotations can dramatically improve these representations.

Conclusion

Sense2vec is a simple yet powerful variation of word2vec. It improves the performance of algorithms like syntactic dependency parsing while significantly reducing computational overhead for calculating the representations of word senses.

Code snippets in this post can be found at https://colab.research.google.com/drive/1xW3lcE5o_6jQ0L-TdUQ2tinZCnXZ2ITL?usp=sharing