Nowadays, many actions are needed to perform using text classification like hate classification, speech detection, sentiment classification etc. This article’s main focus is to perform text classification and sentiment analysis for three combined datasets amazon review, imdb movie rating and yelp review data sets using . Before going to the coding, let’s just have some basics of text classification and convolutional neural networks.

Introduction to Text Classification

Text classification is a process of providing labels to the set of texts or words in one, zero or predefined labels format, and those labels will tell us about the sentiment of the set of words.

First of all, the human language is nothing but a combination of words. Whenever spoken by the human it comes out with a sentiment that another human can easily understand. Humans easily understand whether a sentence has anger or it has any other mood. Making a machine to understand the human language is called text classification.

To perform text classification, we need already classified data; here in this article, the data used is provided with the labels. So here we are, trying to make a model with three data sets; as I said before, every piece of data has sentences with labels 0 and 1. At the end of the building model, the model will try to classify sentences according to their sentiment.

This model will take text as an input and analyze input information and assign labels to them.

Let’s just make a simple logistic model for understanding it more.

In this article, I am using google colab.

First of all, we will import the data using pandas.

Input:

import pandas as pd

filepath_dire = {'yelp': '/content/drive/MyDrive/Yugesh/TextCNN/yelp_labelled.txt',

'amazon': '/content/drive/MyDrive/Yugesh/TextCNN/amazon_cells_labelled.txt',

'imdb': '/content/drive/MyDrive/Yugesh/TextCNN/imdb_labelled.txt'}

data_list = []

for source, filepath in filepath_dire.items():

data = pd.read_csv(filepath, names=['sentence', 'label'], sep='\t')

data['source'] = source # Add another column filled with the source name

data_list.append(data)

data = pd.concat(data_list)

print(data)

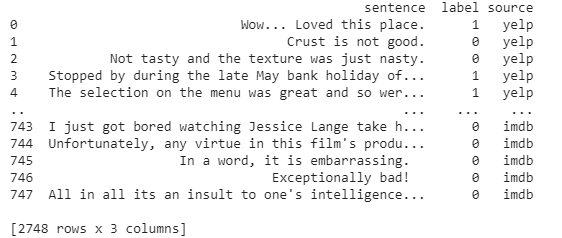

Output:

It seems like we have imported every dataset correctly. So let’s just move towards the model building, but we need to perform some preprocessing before fitting a model to a dataset.

Let’s just think about the process of the model, how it will work internally, how it will calculate the label from the text. Internal things are dependent on mathematical evaluation and calculation. Here also the data, the model’s input needs to be in matrices or numeric values formats that the model can easily calculate.

Transformation of the words can be done in many ways. One of them is to count the number of occurrences of every word in each sentence and provide those counts to the entire set of words in the dataset. This kind of collection of information is called corpus in NLP.

And another method is to make a vocabulary where every word has its special index number. More formally, we can say classifying every word into its tied index.

This can be easily done by using the CountVectorizer provided by the scikit-learn library. Lets look at an example.

Input :

example = ['analytics india magazine is good magazine ', 'analytics india magazine provides good information']

Next, we can vectorize the sentence using Countvectorizer.

Input:

from sklearn.feature_extraction.text import CountVectorizer examplevectorizer = CountVectorizer() examplevectorizer.fit(example) examplevectorizer.vocabulary_

Output:

{'analytics': 0,

'good': 1,

'india': 2,

'information': 3,

'is': 4,

'magazine': 5,

'provides': 6}

This resulting vector is called a feature vector. Each word has its category in the feature vector, which can be represented in numeric terms.

The output in the example has a vocabulary with a special index provided to words. This feature vector later can be converted into an array of occurrence of words which we help to count the frequency of words in the data set.

Input:

examplevectorizer.transform(example).toarray()

Output:

array([[1, 1, 1, 0, 1, 2, 0], [1, 1, 1, 1, 0, 1, 1]])

Let’s perform this to our data set.

First, we need to split our data into train and test.

Input:

from sklearn.model_selection import train_test_split review = data['sentence'].values label = data['label'].values review_train, review_test, label_train, label_test = train_test_split( review, label, test_size=0.25, random_state=1000)

Vectorizing the split data.

Input:

from sklearn.feature_extraction.text import CountVectorizer review_vectorizer = CountVectorizer() review_vectorizer.fit(review_train) Xlr_train = review_vectorizer.transform(review_train) Xlr_test = review_vectorizer.transform(review_test) Xlr_train

Output:

Here we can see that the matrix has 750 feature vectors, and each has 1714 dimensions, which is the size of the vocabulary.

Now the data is almost prepared for fitting in the model, lets perform the logistic regression model building and fitting in the data.

Input:

from sklearn.linear_model import LogisticRegression

LRmodel = LogisticRegression()

LRmodel.fit(Xlr_train, label_train)

score = LRmodel.score(Xlr_test, label_test)

print("Accuracy:", score)

Output:

Accuracy: 0.8195050946142649

Here we have seen the text classification model with very basic levels. There are many methods to perform text classification. TextCNN is also a method that implies neural networks for performing text classification. First, let’s look at CNN; after that, we will use it for text classification.

Introduction to CNN

Convolutional neural networks or CNN are among the most promising methods in developing machine learning models. For example, it performs so well in image classification and computer vision.

CNN is just a kind of neural network; its convolutional layer differs from other neural networks. To perform image classification, CNN goes through every corner, vector and dimension of the pixel matrix. Performing with this all features of a matrix makes CNN more sustainable to data of matrix form.

Convolutional layers consist of multiple features like detecting edges, corners, and multiple textures, making it a special tool for CNN to perform modeling. That layer slides across the image matrix and can detect its all features. This means each convolutional layer in the network can detect more complex features. As the feature expands, we need to expand the dimension of the convolutional layer.

We can consider text data as sequential data like data in time series, a one-dimensional matrix. We need to work with a one-dimensional convolution layer. The idea of the model is almost the same, but the data type and dimension of convolution layers changed. To work with TextCNN, we require a word embedding layer and a one-dimensional convolutional network.

Word Embedding

Word embedding represents the density of the word vector, unlike what we have done with the Countvectorizer. It is a different way to preprocess the data. This embedding can map semantically similar words. It does not consider the text as a human language but maps the structure of sets of words used in the corpus. They aim to map words into a geometric space which is called an embedding space.

If embedding finds a good relationship between works like for an example

Keras provides a couple of methods for text preprocessing and sequence preprocessing. We can use them to make our data a better fit for the TextCNN model.

Let’s prepare word embeddings for the model.

input:

from keras.preprocessing.text import Tokenizer tokenizer = Tokenizer(num_words=5000) tokenizer.fit_on_texts(review_train) Xcnn_train = tokenizer.texts_to_sequences(review_train) Xcnn_test = tokenizer.texts_to_sequences(review_test) vocab_size = len(tokenizer.word_index) + 1 print(review_train[1]) print(Xcnn_train[1])

Output:

Here we can see that most common words do not have a large index in our embedding space. Still, the extremely uncommon words will get a higher index value which will be word count + 1 because they hold some information. Those whose occurrence is moderate will be given a moderate index value. Finally, 0 value is reserved and won’t be provided to any text.

One problem is that in each sequence is the different length of words, and to specify the length of word sequence, we need to provide a mexlen parameter and to solve this, we need to use pad_sequence(), which simply pads the sequence of words with zeros.

Input:

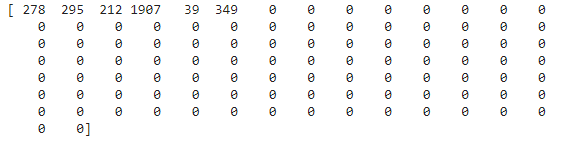

from keras.preprocessing.sequence import pad_sequences maxlen = 100 Xcnn_train = pad_sequences(Xcnn_train, padding='post', maxlen=maxlen) Xcnn_test = pad_sequences(Xcnn_test, padding='post', maxlen=maxlen) print(Xcnn_train[0, :])

Output:

After padding, we have appended zero value to matrices, and now we can use those in a deep learning model. This is how word embedding makes relations between words. In the next step, we will try to fit the TextCNN model.

First of all, we need to import sequential and layers.

Input:

from keras.models import Sequential from keras import layers

Making models using layers in it.

embedding_dim = 200 textcnnmodel = Sequential() textcnnmodel.add(layers.Embedding(vocab_size, embedding_dim, input_length=maxlen)) textcnnmodel.add(layers.Conv1D(128, 5, activation='relu')) textcnnmodel.add(layers.GlobalMaxPooling1D()) textcnnmodel.add(layers.Dense(10, activation='relu')) textcnnmodel.add(layers.Dense(1, activation='sigmoid')) textcnnmodel.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy']) textcnnmodel.summary()

Output:

Model: "sequential_1" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= embedding_1 (Embedding) (None, 100, 200) 920600 _________________________________________________________________ conv1d_1 (Conv1D) (None, 96, 128) 128128 _________________________________________________________________ global_max_pooling1d_1 (Glob (None, 128) 0 _________________________________________________________________ dense_2 (Dense) (None, 10) 1290 _________________________________________________________________ dense_3 (Dense) (None, 1) 11 ================================================================= Total params: 1,050,029 Trainable params: 1,050,029 Non-trainable params: 0 _________________________________________________________________

Let’s fit the model and check for accuracy.

Input :

textcnnmodel.fit(Xcnn_train, label_train,

epochs=10,

verbose=False,

validation_data=(Xcnn_test, label_test),

batch_size=10)

loss, accuracy = textcnnmodel.evaluate(Xcnn_train, label_train, verbose=False)

print("Training Accuracy: {:.4f}".format(accuracy))

loss, accuracy = textcnnmodel.evaluate(Xcnn_test, label_test, verbose=False)

print("Testing Accuracy: {:.4f}".format(accuracy))

Output:

Training Accuracy: 1.0000 Testing Accuracy: 0.8040

Here we can see that our model is overfitted at training, but test accuracy is decent. Hence, there are many ways to improve the model. In this article, we have not performed the cleaning of the data, and the CNN requires a large amount of data to train better, so as the sample will increase, it might perform better. But after overfitting too, it gave quite good results.

There are many cases where we will need to use this for a large and complex data set. It is well suggested that our simple classification model can not perform well. For example, in complex datasets where the making of vocabulary increases the size of matrices, we can use this model because we know that it looks for relationships between words.