3D dense face alignment(3DDFA) is a trending technique for many face tasks, For example, object recognition, animation, tracking, image restoration, and many more. For now, most of the studies in 3DDFA are divided into two categories:

- 3D Morphable Model(3DMM) parameter regression,

- and Dense vertices regression.

Now Existing method of 3D dense face alignment only focuses on accuracy, which tends to limit the scope of their practical applications. In previous implementations of 3DDFA, there was a major problem with accuracy and output inference but the new 3DdFA_V2(version 2nd) came up with a new regression framework that makes a reliable balance between accuracy, speed, and stability. 3DDFA_V2 is published by Jianzhu Guo, Xiangyu Zhu, Yang Yang, Fan Yang, Zhen Lei, and Stan Z. Li in the research paper called Towards Fast, Accurate and Stable

3D Dense Face Alignment The new backbone of this framework is very lightweight and its source code is open-sourced on GitHub here. The repository is owned by Jianzhu Guo. The research has been accepted by ECCV 2020

3DDFA_V2 (3D Dense Face Alignment- version 2)

It is an improved version of previous implementations of 3DDFA now named 3DDFA_V2, it achieved promising speed, accuracy, and stability also incorporating the fast face detector FaceBoxes instead of Dlib. It introduced the new meta-joint optimization strategy to dynamically regress a small set of 3DMM parameters, also to further improve the stability of the model on videos authors virtual synthesis method to convert one image to a short-video which integrates in-plane and out-of-plane face moving.

- It runs over 50fps(19.2ms) on a single CPU

- Can reach up to 130fps(7.2ms) on multiple CPU(i5-8259U) core.

- It is 24x times faster than PRNet

- It is a more dynamically optimized technique leveraging the 3DMM parameter through a novel meta-optimization strategy.

Architecture

3DDFA_V2 architecture consists of four parts:

- Lightweight backbone MobileNet architecture for 3DMM parameter predictions

- Meta joint optimization of fWPDC and VDC.

- Landmark regression regularization

- Short-video synthesis for better training.

To decrease the computation burden the landmarks regression branch is discarded during inference.

Implementation

To see the outputs we will be using Google Colab demonstration, first, we will clone the 3DDFA_V2 repo, and then we will set up the environment. Let’s jump straight to the code:

Cloning and setup up the environment

%cd /content !git clone https://github.com/cleardusk/3DDFA_V2.git %cd 3DDFA_V2 !sh ./build.sh

Importing modules

import cv2 import yaml from FaceBoxes import FaceBoxes from TDDFA import TDDFA from utils.render import render from utils.depth import depth from utils.pncc import pncc from utils.uv import uv_tex from utils.pose import viz_pose from utils.serialization import ser_to_ply, ser_to_obj from utils.functions import draw_landmarks, get_suffix import matplotlib.pyplot as plt from skimage import io

Load 3DDFA configurations

It will enable the ONNX environment to speed up the process, default backbone of the architecture is MobileNet_V1 with input size 120×120, and the default pretrained weight/mb1_120x120.pth, there is also another wider factor is available as this project provide two mobilenet models to choose from.

| Model | Input | #Params | #Macs | Inference (TF) |

| MobileNet | 120×120 | 3.27M | 183.5M | ~6.2ms |

| MobileNet x0.5 | 120×120 | 0.85M | 49.5M | ~2.9ms |

cfg = yaml.load(open('configs/mb1_120x120.yml'), Loader=yaml.SafeLoader)

onnx_flag = True # True to use ONNX to speed up

if onnx_flag:

!pip install onnxruntime

import os

os.environ['KMP_DUPLICATE_LIB_OK'] = 'True'

os.environ['OMP_NUM_THREADS'] = '4'

from FaceBoxes.FaceBoxes_ONNX import FaceBoxes_ONNX

from TDDFA_ONNX import TDDFA_ONNX

face_boxes = FaceBoxes_ONNX()

tddfa = TDDFA_ONNX(**cfg)

else:

face_boxes = FaceBoxes()

tddfa = TDDFA(gpu_mode=False, **cfg)

Testing

Let;s first take any image you want

img_url = 'https://photovideocreative.com/wordpress/wp-content/uploads/2017/12/Angles-de-prise-de-vue-horizontal-contreplong%C3%A9-et-plong%C3%A9.jpg' img = io.imread(img_url) plt.imshow(img) img = img[..., ::-1] # RGB -> BGR

Detecting faces using FaceBoxes

The FaceBoxes module is modified from FaceBoxes.PyTorch. There are some previous work on 3DDFA or reconstruction are available like: 3DDFA, face3d, PRNet.

boxes = face_boxes(img)

print(f'Detect {len(boxes)} faces')

print(boxes)

#Regressing 3DMM params, reconstruction and visualization

param_lst, roi_box_lst = tddfa(img, boxes)

Reconstructing vertices and visualizing sparse landmarks using 3DDFA

dense_flag = False ver_lst = tddfa.recon_vers(param_lst, roi_box_lst, dense_flag=dense_flag) draw_landmarks(img, ver_lst, dense_flag=dense_flag)

Reconstructing vertices and visualizing dense landmarks

dense_flag = True ver_lst = tddfa.recon_vers(param_lst, roi_box_lst, dense_flag=dense_flag) draw_landmarks(img, ver_lst, dense_flag=dense_flag)

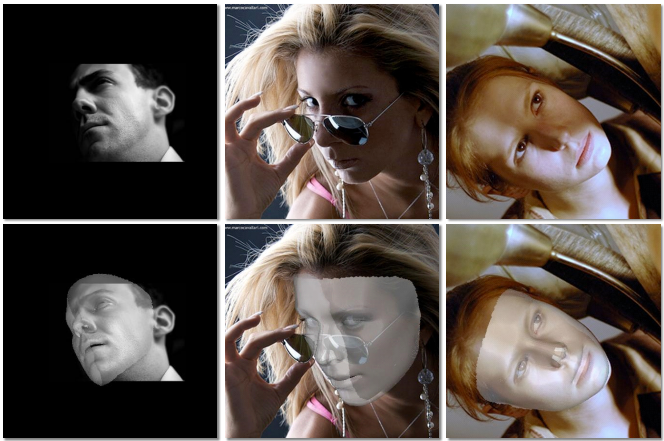

Reconstructing vertices and render

ver_lst = tddfa.recon_vers(param_lst, roi_box_lst, dense_flag=dense_flag) render(img, ver_lst, tddfa.tri, alpha=0.6, show_flag=True);

Reconstructing vertices and render pncc

ver_lst = tddfa.recon_vers(param_lst, roi_box_lst, dense_flag=dense_flag) pncc(img, ver_lst, tddfa.tri, show_flag=True);

Running it on Video

python3 demo_video.py -f examples/inputs/videos/214.avi --onnx

Conclusion

This new approach for more stable, fast, and accurate 3D Dense Face alignment is really a new way of training and inference face data. In Colab the code takes nearly seconds to run. just because of lightweight mobinet architecture, surprisingly the latency of onnxruntime was also much smaller on CPU, for more you can follow the below resources:

- Official GitHub Repository

- Official Research Paper

- Running on windows discussion

- Google Colab demo