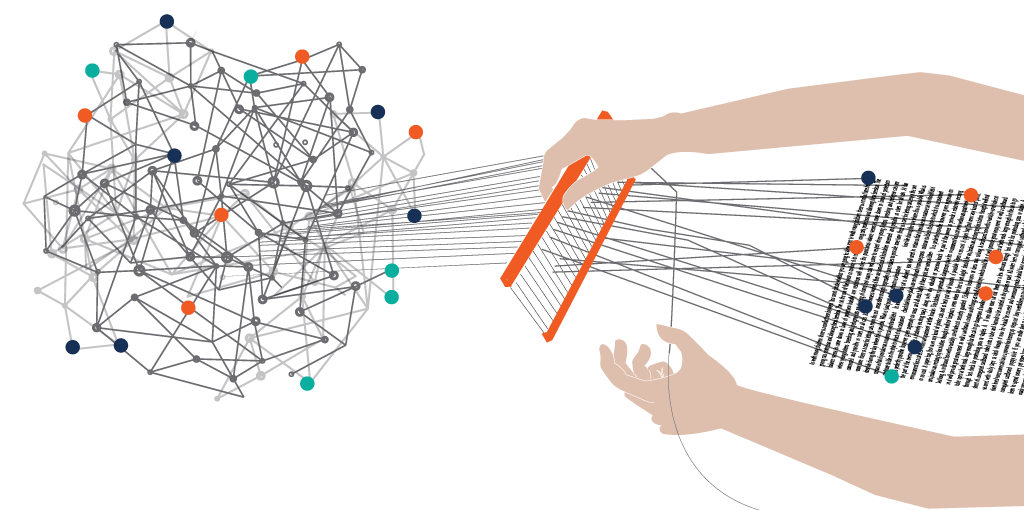

Do you realize you can google up anything today and can be sure to find something related to it on the internet? This comes from the huge amount of text data available freely for us. You must be intrigued enough to use all this data for your machine learning models. The problem is, machines don’t recognize and understand the text as we do. Need to know a way to work around this?

The magnificent trick lies in the wonderful world of Natural Language Processing. The unstructured text data needs to be cleaned first before we can proceed to the modelling stage. Tokenization is used for splitting a phrase or a paragraph into words or sentences.

In this article, we will start with the first step of data pre-processing i.e Tokenization. Further, we will implement different methods in python to perform tokenization of text data.

Tokenize Words Using NLTK

Let’s start with the tokenization of words using the NLTK library. It breaks the given string and returns a list of strings by the white specified separator.

#Tokenize words from nltk.tokenize import word_tokenize text = "Machine learning is a method of data analysis that automates analytical model building" word_tokenize(text)

Here, we tokenize the sentences instead of words by a full stop (.) separator.

#Tokenize Sentence from nltk.tokenize import sent_tokenize text = "Machine learning is a method of data analysis that automates analytical model building. It is a branch of artificial intelligence based on the idea that systems can learn from data, identify patterns and make decisions with minimal human intervention." sent_tokenize(text)

#Tokenize words of different words

import nltk

nltk.download('punkt')

import nltk.data

spanish_tokenizer = nltk.data.load('tokenizers/punkt/PY3/spanish.pickle')

text = 'Hola amigo. Me llamo Ankit.'

spanish_tokenizer.tokenize(text)

Regular Expression

Regex function is used to match or find strings using a sequence of patterns consisting of letters and numbers. We will re library to tokenize words and sentences of a paragraph.

from nltk.tokenize import RegexpTokenizer

tokenizer = RegexpTokenizer("[\w']+")

text = "Machine learning is a method of data analysis that automates analytical model building"

tokenizer.tokenize(text)

#Split Sentences

import re

text = """Machine learning is a method of data analysis that automates analytical model building. It is a branch of artificial intelligence based on the idea that systems can learn from data, identify patterns and make decisions with minimal human intervention."""

sentences = re.compile('[.!?] ').split(text)

sentences

Split()

split() method is used to break the given string in a sentence and return a list of strings by the specified separator.

text = """Machine learning is a method of data analysis that automates analytical model building""" # Splits at space text.split()

#Split Sentence

text = """Machine learning is a method of data analysis that automates analytical model building.It is a branch of artificial intelligence based on the idea that systems can learn from data, identify patterns and make decisions with minimal human intervention."""

# Splits at space

text.split('.')

Spacy

Spacy is an open-source library used for tokenization of words and sentences. We will load en_core_web_sm which supports the English language.

import spacy

sp = spacy.load('en_core_web_sm')

sentence = sp(u'Machine learning is a method of data analysis that automates analytical model building.')

print(sentence)

L=[]

for word in sentence:

L.append(word)

L

#Split Sentences sentence = sp(u'Machine learning is a method of data analysis that automates analytical model building. It is a branch of artificial intelligence based on the idea that systems can learn from data, identify patterns and make decisions with minimal human intervention.') print(sentence) x = [] for sent in sentence.sents: x.append(sent.text) x

Gensim

The last method that we will cover in this article is gensim. It is an open-source python library for topic modelling and similarity retrieval of large datasets.

from gensim.utils import tokenize text = """Artificial intelligence, the ability of a digital computer or computer-controlled robot to perform tasks commonly associated with intelligent beings.""" list(tokenize(text))

#Split Sentence from gensim.summarization.textcleaner import split_sentences text = """Artificial intelligence, the ability of a digital computer or computer-controlled robot to perform tasks commonly associated with intelligent beings. The term is frequently applied to the project of developing systems endowed with the intellectual processes characteristic of humans, such as the ability to reason, discover meaning, generalize, or learn from past experience.""" split1 = split_sentences(text) split1

Final Thoughts

In this article, we implemented different methods of tokenization from a given text. Before starting the model building process, data needs to be cleaned. Tokenization is a crucial step in data cleaning/pre-processing process. We can further extend our research by exploring other data cleaning techniques like part-of-speech-tagging and stop word removal.The complete code of the above implementation is available at the AIM’s GitHub repository. Please visit this link to find the notebook of this code.