Twitter is a big source of news nowadays because it is the most comprehensive source of public conversations around the world. The latest news which may not be available on news channels or websites but it may be trending on twitter among public conversations. Any information, despite being constructive or destructive can be easily spread across the twitter network skipping the editorial cuts or regulations.

Because of this nature of twitter, it is also being popular among data analysts who want to gather some trending information and perform analytics. In this article, we will learn to download and analyze twitter data. We will learn how to get tweets related to an interesting keyword, how to clean, analyze, visualize those tweets and finally how to convert it into a data frame and save it into a CSV file.

Here, we will discuss a hands-on approach to download and analyze twitter data. We will import all the required libraries here. Make sure to install ‘tweepy’, ‘textblob‘ and ‘wordcloud‘ libraries using ‘pip install tweepy’, ‘pip install textblob‘ and ‘pip install wordcloud‘.

#Importing Libraries import tweepy from textblob import TextBlob import pandas as pd import numpy as np import matplotlib.pyplot as plt import re import nltk nltk.download('stopwords') from nltk.corpus import stopwords from nltk.stem.porter import PorterStemmer from wordcloud import WordCloud import json from collections import Counter

Downloading the data from Twitter

To use the ‘tweepy‘ API, you need to create an account with Twitter Developer. After creating the account, go to ‘Get Started’ option and navigate to the ‘Create an app’ option. After you create the app, not down the below-required credentials from there.

#Authorization and Search tweets #Getting authorization consumer_key = 'XXXXXXXXXXXXXXX' consumer_key_secret = 'XXXXXXXXXXXXXXX' access_token = 'XXXXXXXXXXXXXXX' access_token_secret = 'XXXXXXXXXXXXXXX' auth = tweepy.OAuthHandler(consumer_key, consumer_key_secret) auth.set_access_token(access_token, access_token_secret) api = tweepy.API(auth, wait_on_rate_limit=True)

You can pass the keyword of your interest here and the maximum number of tweets to be downloaded through the tweepy API.

#Defining Search keyword and number of tweets and searching tweets query = 'lockdown' max_tweets = 2000 searched_tweets = [status for status in tweepy.Cursor(api.search, q=query).items(max_tweets)]

We will now analyze the sentiments of tweets that we have downloaded and then visualize them here.

Sentiment Analysis

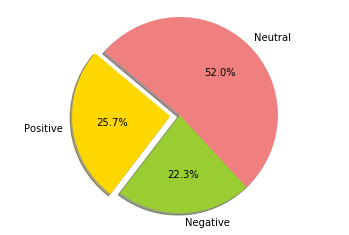

#Sentiment Analysis Report #Finding sentiment analysis (+ve, -ve and neutral) pos = 0 neg = 0 neu = 0 for tweet in searched_tweets: analysis = TextBlob(tweet.text) if analysis.sentiment[0]>0: pos = pos +1 elif analysis.sentiment[0]<0: neg = neg + 1 else: neu = neu + 1 print("Total Positive = ", pos) print("Total Negative = ", neg) print("Total Neutral = ", neu) #Plotting sentiments labels = 'Positive', 'Negative', 'Neutral' sizes = [257, 223, 520] colors = ['gold', 'yellowgreen', 'lightcoral'] explode = (0.1, 0, 0) # explode 1st slice plt.pie(sizes, explode=explode, labels=labels, colors=colors, autopct='%1.1f%%', shadow=True, startangle=140) plt.axis('equal') plt.show()

Here, we will create a data frame of all the tweet data that we have downloaded. Later all the processed data will be saved to a CSV file in the local system. Through this way, we can utilize this tweet data for other experimental purposes.

Creating the Data Frame and Saving into CSV File

#Creating Dataframe of Tweets #Cleaning searched tweets and converting into Dataframe my_list_of_dicts = [] for each_json_tweet in searched_tweets: my_list_of_dicts.append(each_json_tweet._json) with open('tweet_json_Data.txt', 'w') as file: file.write(json.dumps(my_list_of_dicts, indent=4)) my_demo_list = [] with open('tweet_json_Data.txt', encoding='utf-8') as json_file: all_data = json.load(json_file) for each_dictionary in all_data: tweet_id = each_dictionary['id'] text = each_dictionary['text'] favorite_count = each_dictionary['favorite_count'] retweet_count = each_dictionary['retweet_count'] created_at = each_dictionary['created_at'] my_demo_list.append({'tweet_id': str(tweet_id), 'text': str(text), 'favorite_count': int(favorite_count), 'retweet_count': int(retweet_count), 'created_at': created_at, }) tweet_dataset = pd.DataFrame(my_demo_list, columns = ['tweet_id', 'text', 'favorite_count', 'retweet_count', 'created_at']) #Writing tweet dataset ti csv file for future reference tweet_dataset.to_csv('tweet_data.csv')

Cleaning Tweet Texts using NLP Operations

As we are ready now with the tweet data set, we will analyze our dataset and clean this data in the following segments.

tweet_dataset.shapetweet_dataset.head()

#Cleaning Data #Removing @ handle def remove_pattern(input_txt, pattern): r = re.findall(pattern, input_txt) for i in r: input_txt = re.sub(i, '', input_txt) return input_txt tweet_dataset['text'] = np.vectorize(remove_pattern)(tweet_dataset['text'], "@[\w]*") tweet_dataset.head()

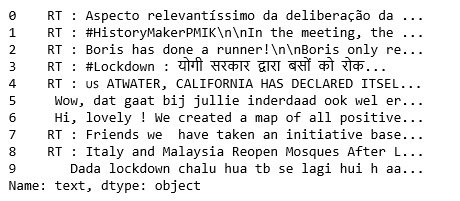

tweet_dataset['text'].head()

Here, as we are ready with the clean tweet data, we will perform NLP operations on the tweet texts including taking only alphabets, converting all to lower cases, tokenization and stemming. As retweets, hypertexts etc. are present in the tweets, we need to remove all those unnecessary information.

#Cleaning Tweets corpus = [] for i in range(0, 1000): tweet = re.sub('[^a-zA-Z0-9]', ' ', tweet_dataset['text'][i]) tweet = tweet.lower() tweet = re.sub('rt', '', tweet) tweet = re.sub('http', '', tweet) tweet = re.sub('https', '', tweet) tweet = tweet.split() ps = PorterStemmer() tweet = [ps.stem(word) for word in tweet if not word in set(stopwords.words('english'))] tweet = ' '.join(tweet) corpus.append(tweet)

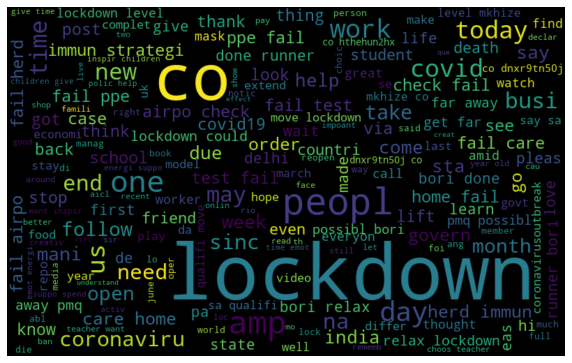

Now, after performing the NLP operations, we visualize the most frequent words in the tweets through a word cloud and using the term frequency.

Visualizing Highest Occurring Words using Word Cloud

#Visualization #Word Cloud all_words = ' '.join([text for text in corpus]) wordcloud = WordCloud(width=800, height=500, random_state=21, max_font_size=110).generate(all_words) plt.figure(figsize=(10, 7)) plt.imshow(wordcloud, interpolation="bilinear") plt.axis('off') plt.show()

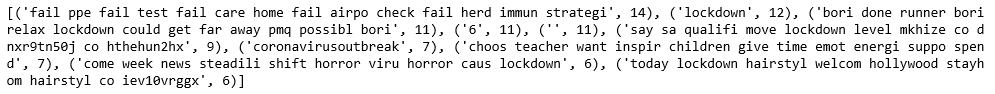

Analyzing Highest Occurring Words Term Frequency

#Term Freuency - TF-IDF from sklearn.feature_extraction.text import TfidfVectorizer tfidf_vectorizer = TfidfVectorizer(max_df=0.90, min_df=2, max_features=1000, stop_words='english') tfidf = tfidf_vectorizer.fit_transform(tweet_dataset['text']) #Count Most Frequent Words Counter = Counter(corpus) most_occur = Counter.most_common(10) print(most_occur)

The above all the most frequent terms appeared in the tweet data. So, this is the way how we can download the tweets, clean those tweets, convert them into data frames, save them into CSV file and finally, analyze those tweets.