With the advent of new research in the state of the art models, more powerful algorithms are being developed that focus on low code environments to make Machine learning accessible for everyone. Analogous to transfer learning models for image classification or face recognition in the field of computer vision, BERT, Roberta, XLNet are highly powerful models that solve problems using natural language processing. These models have dominated the world of NLP by making tasks like POS tagging, sentiment analysis, text summarization etc very easy yet effective.

This article is a demonstration of how simple and powerful transfer learning models are in the field of NLP. We will implement a text summarizer using BERT that can summarize large posts like blogs and news articles using just a few lines of code.

Text summarization

Text summarization is the concept of employing a machine to condense a document or a set of documents into brief paragraphs or statements using mathematical methods. NLP broadly classifies text summarization into 2 groups.

- Extractive text summarization: here, the model summarizes long documents and represents them in smaller simpler sentences.

- Abstractive text summarization: the model has to produce a summary based on a topic without prior content provided.

We will understand and implement the first category here.

Extractive text summarization with BERT(BERTSUM)

Unlike abstractive text summarization, extractive text summarization requires the model to “understand” the complete text, pick out the right keywords and assemble these keywords to make sense. There cannot be a loss of information either. So, how does BERT do all of this with such great speed and accuracy?

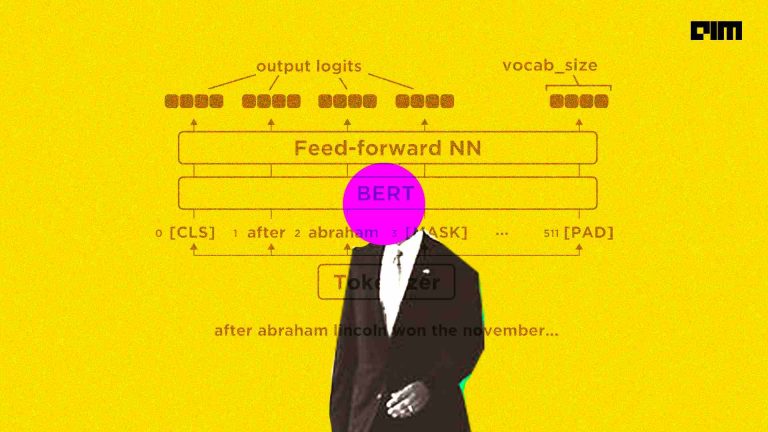

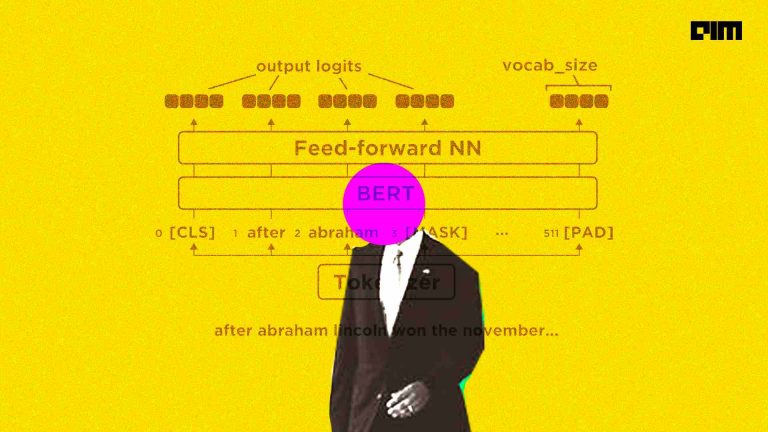

Looking at the image above, you may notice slight differences between the original model and the model used for summarization. The input format of BERTSUM is different when compared to the original model. Here, a [CLS] token is added at the start of each sentence in order to separate multiple sentences and to collect features of the preceding sentence. There is also a difference in segment embeddings. In the case of BERTSUM, each sentence is assigned an embedding of Ea or Eb depending on whether the sentence is even or odd. If the sequence is [s1,s2,s3] then the segment embeddings are [Ea, Eb, Ea]. This way, all sentences are embedded and sent into further layers.

BERTSUM assigns scores to each sentence that represents how much value that sentence adds to the overall document. So, [s1,s2,s3] is assigned [score1, score2, score3]. The sentences with the highest scores are then collected and rearranged to give the overall summary of the article.

Now that we have understood the working of BERTSUM, let us implement them to a custom article.

Loading the custom data

You can select a paragraph or two from any source of your choice. I have selected two paragraphs from the WHO report on coronavirus. To download the article click here. Once you have your data ready, go ahead and install the bert-sum library using the command

pip install bert-extractive-summarizer

After downloading this, let us read our file and summarize it.

get_corona_summary=open('corona.txt','r').read()

BERTSUM has an in-built module called summarizer that takes in our data, accesses it and provided the summary within seconds.

from summarizer import Summarizer model = Summarizer() result = model(get_corona_summary, min_length=20) summary = "".join(result) print(summary)

Thus, our two-paragraph information has been converted into a small brief summary.

Using a Web scaped article

Python makes data loading easy for us by providing a library called newspaper. This library is a web scraper that can extract all textual information from the URL provided. Newspaper can extract and detect languages seamlessly. If no language is specified, Newspaper will attempt to auto-detect a language.

To install this library, use the command

pip install newspaper3k

Once this is done, let us select an article that we need the summary for. I have chosen this article for the purpose of this project. Let us load our dataset.

from newspaper import fulltext import requests article_url="https://analyticsindiamag.com/is-common-sense-common-in-nlp-models/ " article = fulltext(requests.get(article_url).text) print(article)

from summarizer import Summarizer model = Summarizer() result = model(article, min_length=30,max_length=300) summary = "".join(result) print(summary)

Here is a one-paragraph summary of the contents in the article. You can choose to limit the size of the summary as per your needs. Here I have limited it to 300 words.

Conclusion

BERT is undoubtedly a breakthrough in the use of Machine Learning for Natural Language Processing. The fact that it’s approachable and allows fast fine-tuning will likely allow a wide range of practical applications in the future. The research in the field of NLP is trying to reach human-level every day. With models like this, there is a wide range of applications where it finds use. The ease in which these methods can be implemented makes it accessible to not only developers but even business analysts.