Nowadays, most deep learning models are highly optimized for a specific type of dataset. Computer vision and audio analysis can not use architectures that are good at processing textual data. This level of specialization naturally influences the development of models that are highly specialized in one task and unable to adapt to other tasks. So, in contrast to the General Purpose model, we will talk about PerceiverIO, which is designed to address a wide range of tasks with a single architecture. The following are the main points to be discussed in this article.

Table of Contents

- What is Perceiver IO?

- Architecture of PerceiverIO

- Implementing Perceiver IO for Text Classification

Let’s start the discussion by understanding the PerceiverIO.

What is PerceiverIO?

A perceiver is a transformer that can handle non-textual data like images, sounds, and video, as well as spatial data. Other significant systems that came before Perceiver, such as BERT and GPT-3, are based on transformers. It uses an asymmetric attention technique to condense inputs into a latent bottleneck, allowing it to learn from a great amount of disparate data. On classification challenges, Perceiver matches or outperforms specialized models.

The perceiver is free of modality-specific components. It lacks components dedicated to handling photos, text, or audio, for example. It can also handle several associated input streams of varying sorts. It takes advantage of a small number of latent units to create an attention bottleneck through which inputs must pass. One advantage is that it eliminates the quadratic scaling issue that plagued early transformers. For each modality, specialized feature extractors were employed previously.

Perceiver IO can query the model’s latent space in a variety of ways to generate outputs of any size and semantics. It excels at activities that need structured output spaces, such as natural language and visual comprehension and multitasking. Perceiver IO matches a Transformer-based BERT baseline without the need for input tokenization on the GLUE language benchmark and achieves state-of-the-art performance on Sintel optical flow estimation.

The latent array is attended to using a specific output query associated with that particular output to produce outputs. To predict optical flow on a single pixel, for example, a query would use the pixel’s XY coordinates along with an optical flow task embedding to generate a single flow vector. It’s a spin-off of the encoder/decoder architecture seen in other projects.

Architecture of PerceiverIO

The Perceiver IO model is based on the Perceiver architecture, which achieves cross-domain generality by assuming a simple 2D byte array as input: a set of elements (which could be pixels or patches in vision, characters or words in a language or some form of learned or unlearned embedding), each described by a feature vector. The model then uses Transformer-style attention to encode information about the input array using a smaller number of latent feature vectors, followed by iterative processing and a final aggregation down to a category label.

HuggingFace Transformers’ PerceiverModel class serves as the foundation for all Perceiver variants. To initialize a PerceiverModel, three further instances can be specified – a preprocessor, a decoder, and a postprocessor.

A preprocessor is optionally used to preprocess the inputs (which might be any modality or a mix of modalities). The preprocessed inputs are then utilized to execute a cross-attention operation utilizing the latent variables of the Perceiver encoder.

Perceiver IO is a domain-agnostic process that maps arbitrary input arrays to arbitrary output arrays. The majority of the computation takes place in a latent space that is typically smaller than the inputs and outputs, making the process computationally tractable even when the inputs and outputs are very large.

In this technique (Referring to the above architecture), the latent variables create queries (Q), whilst the preprocessed inputs generate keys and values (KV). Following this, the Perceiver encoder updates the latent embeddings with a (repeatable) block of self-attention layers. Finally, the encoder will create a shape tensor (batch size, num latents, d latents) containing the latents’ most recently concealed states. Then there’s an optional decoder, which may be used to turn the final concealed states of the latent into something more helpful, like classification logits. This is performed by a cross-attention operation in which trainable embeddings create queries (Q) and latent generate keys and values (KV).

PerceiverIO for Text Classification

In this section, we will see how perceiver can be used to do the text classification. Now let’s install the Transformer and datasets module of Huggingface.

! pip install -q git+https://github.com/huggingface/transformers.git ! pip install -q datasets

Next, we will prepare the data from the module. The dataset is about IMDB movie reviews and we are using a chunk of it. Later after loading the dataset, we will make it handy when doing the inference.

from datasets import load_dataset

# load the dataset

train_ds, test_ds = load_dataset("imdb", split=['train[:100]+train[-100:]', 'test[:5]+test[-5:]'])

# making the dataset handy

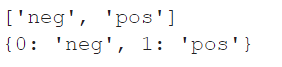

labels = train_ds.features['label'].names

print(labels)

id2label = {idx:label for idx, label in enumerate(labels)}

label2id = {label:idx for idx, label in enumerate(labels)}

print(id2label)

Output

In this step, we will preprocess the dataset for tokenization. For that, we are using PerceiverTokenizer on both train and test datasets.

# Tikenization

from transformers import PerceiverTokenizer

tokenizer = PerceiverTokenizer.from_pretrained("deepmind/language-perceiver")

train_ds = train_ds.map(lambda examples: tokenizer(examples['text'], padding="max_length", truncation=True),

batched=True)

test_ds = test_ds.map(lambda examples: tokenizer(examples['text'], padding="max_length", truncation=True),

batched=True)

We are going to use PyTorch for further modelling and for that we need to set the format of our data compatible with the PyTorch.

# campatible with torch from torch.utils.data import DataLoader train_ds.set_format(type="torch", columns=['input_ids', 'attention_mask', 'label']) test_ds.set_format(type="torch", columns=['input_ids', 'attention_mask', 'label']) train_dataloader = DataLoader(train_ds, batch_size=4, shuffle=True) test_dataloader = DataLoader(test_ds, batch_size=4)

Next, we will define and train the model.

from transformers import PerceiverForSequenceClassification

import torch

from transformers import AdamW

from tqdm.notebook import tqdm

from sklearn.metrics import accuracy_score

# Define model

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model = PerceiverForSequenceClassification.from_pretrained("deepmind/language-perceiver",

num_labels=2,

id2label=id2label,

label2id=label2id)

model.to(device)

# Train the model

optimizer = AdamW(model.parameters(), lr=5e-5)

model.train()

for epoch in range(20): # loop over the dataset multiple times

print("Epoch:", epoch)

for batch in tqdm(train_dataloader):

# get the inputs;

inputs = batch["input_ids"].to(device)

attention_mask = batch["attention_mask"].to(device)

labels = batch["label"].to(device)

# zero the parameter gradients

optimizer.zero_grad()

# forward + backward + optimize

outputs = model(inputs=inputs, attention_mask=attention_mask, labels=labels)

loss = outputs.loss

loss.backward()

optimizer.step()

# evaluate

predictions = outputs.logits.argmax(-1).cpu().detach().numpy()

accuracy = accuracy_score(y_true=batch["label"].numpy(), y_pred=predictions)

print(f"Loss: {loss.item()}, Accuracy: {accuracy}")

Now, let’s do the inference with the model.

text = "I loved this epic movie, the multiverse concept is mind-blowing and a bit confusing."

input_ids = tokenizer(text, return_tensors="pt").input_ids

# Forward pass

outputs = model(inputs=input_ids.to(device))

logits = outputs.logits

predicted_class_idx = logits.argmax(-1).item()

print("Predicted:", model.config.id2label[predicted_class_idx])

Output:

Final Words

Perceiver IO is an architecture that can handle general-purpose inputs and outputs while scaling linearly in both input and output sizes. As we have seen in practice, this architecture produces good results in a wide range of settings. However, we have only seen it for text data, it can also be used for audio, video, and image data as well making it a promising candidate for general-purpose neural network architecture.