Batch normalization is one of the important features we add to our model helps as a Regularizer, normalizing the inputs, in the backpropagation process, and can be adapted to most of the models to converge better. Here, in this article, we are going to discuss the batch normalization technique in detail. We will see the effectiveness of using batch normalization by comparing two models – one without batch normalization and other with batch normalization – in the task of image classification.

Topics we cover in this article

- How Does Batch Normalization work

- Use of Batch Normalization

- Defining a Training a Model without Batch Normalization

- Defining a Training a Model with Batch Normalization

How Does Batch Normalization work?

Through this article, we will discuss how the batch normalization helps in building an efficient model. Batch normalization is a feature that we add between the layers of the neural network and it continuously takes the output from the previous layer and normalizes it before sending it to the next layer. This has the effect of stabilizing the neural network. Batch normalization is also used to maintain the distribution of the data.

The problem we have in neural networks is the internal covariate shift. When we are training our neural network, the distribution of data changes and the model trains slower. This problem is framed as an internal covariate shift. To maintain the similar distribution of data we use batch normalization by normalizing the outputs using mean=0, standard dev=1 (μ=0,σ=1). By using this technique, the model is trained faster and it also increases the accuracy of the model compared to a model that does not use the batch normalization.

When to use Batch Normalization?

We can use Batch Normalization in Convolution Neural Networks, Recurrent Neural Networks, and Artificial Neural Networks. In practical coding, we add Batch Normalization after the activation function of the output layer or before the activation function of the input layer. Mostly researchers found good results in implementing Batch Normalization after the activation layer.

By using Batch Normalization we can set the learning rates high which speeds up the Training process. Due to the flexibility of mean and variance for every mini-batch, it provides better learning and increases the accuracy of the model.

Batch Normalization is also a regularization technique, but that doesn’t fully work like l1, l2, dropout regularizations but by adding Batch Normalization we reduce the internal covariate shift and instability in distributions of layer activations in Deeper networks can reduce the effect of overfitting and works well with generalization data. So we can use Batch Normalization as a Regularization technique.

Implementation of Batch Normalization in Keras

In Batch Normalization we have default parameters, here we can change the values for each parameter and we can give our customized parameters.

keras.layers.BatchNormalization(axis=-1, momentum=0.99, epsilon=0.001, center=True, scale=True, beta_initializer='zeros', gamma_initializer='ones', moving_mean_initializer='zeros', moving_variance_initializer='ones', beta_regularizer=None, gamma_regularizer=None, beta_constraint=None, gamma_constraint=None)

In the below code snippet we will import the required libraries

from keras.datasets import mnist import tensorflow from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense, Flatten from tensorflow.keras.layers import Conv2D, MaxPooling2D from tensorflow.keras.layers import BatchNormalization

In below code snippet we will set the hyperparameters

# Model configuration batch_size = 250 no_epochs = 25 no_classes = 10 validation_split = 0.2 verbosity = 1

Data Pre-Processing

Now we will work on defining a deep learning model for classifying the MNIST Dataset. Here, we will add Batch Normalization between the layers of the deep learning network model. The MNIST dataset taken here has 10 classes with handwritten digits.

Dividing the data into train and test and preprocessing the dataset

# Load MNIST dataset (input_train, target_train), (input_test, target_test) =mnist.load_data() # Shape of the input sets input_train_shape = input_train.shape input_test_shape = input_test.shape # Keras layer input shape input_shape = (input_train_shape[1], input_train_shape[2], 1) # Reshape the training data to include channels input_train = input_train.reshape(input_train_shape[0], input_train_shape[1], input_train_shape[2], 1) input_test = input_test.reshape(input_test_shape[0], input_test_shape[1], input_test_shape[2], 1) # Parse numbers as floats input_train = input_train.astype('float32') input_test = input_test.astype('float32') # Normalize input data input_train = input_train / 255 input_test = input_test / 255

Building a Model Without Batch Normalization

Here we are going to define the deep learning model without adding the batch normalization between the layers.

model = Sequential() model.add(Conv2D(32, kernel_size=(3, 3), activation='relu', input_shape=input_shape)) model.add(MaxPooling2D(pool_size=(2, 2))) model.add(Conv2D(64, kernel_size=(3, 3), activation='relu')) model.add(MaxPooling2D(pool_size=(2, 2))) model.add(Flatten()) model.add(Dense(256, activation='relu')) model.add(Dense(no_classes, activation='softmax')) # Compile the model model.compile(loss=tensorflow.keras.losses.sparse_categorical_crossentropy, optimizer=tensorflow.keras.optimizers.Adam(), metrics=['accuracy']) # Fit data to model history = model.fit(input_train, target_train, batch_size=batch_size, epochs=no_epochs, verbose=verbosity, validation_split=validation_split) # Generate generalization metric s score = model.evaluate(input_test, target_test, verbose=0) print(f'Test loss: {score[0]} / Test accuracy: {score[1]}')

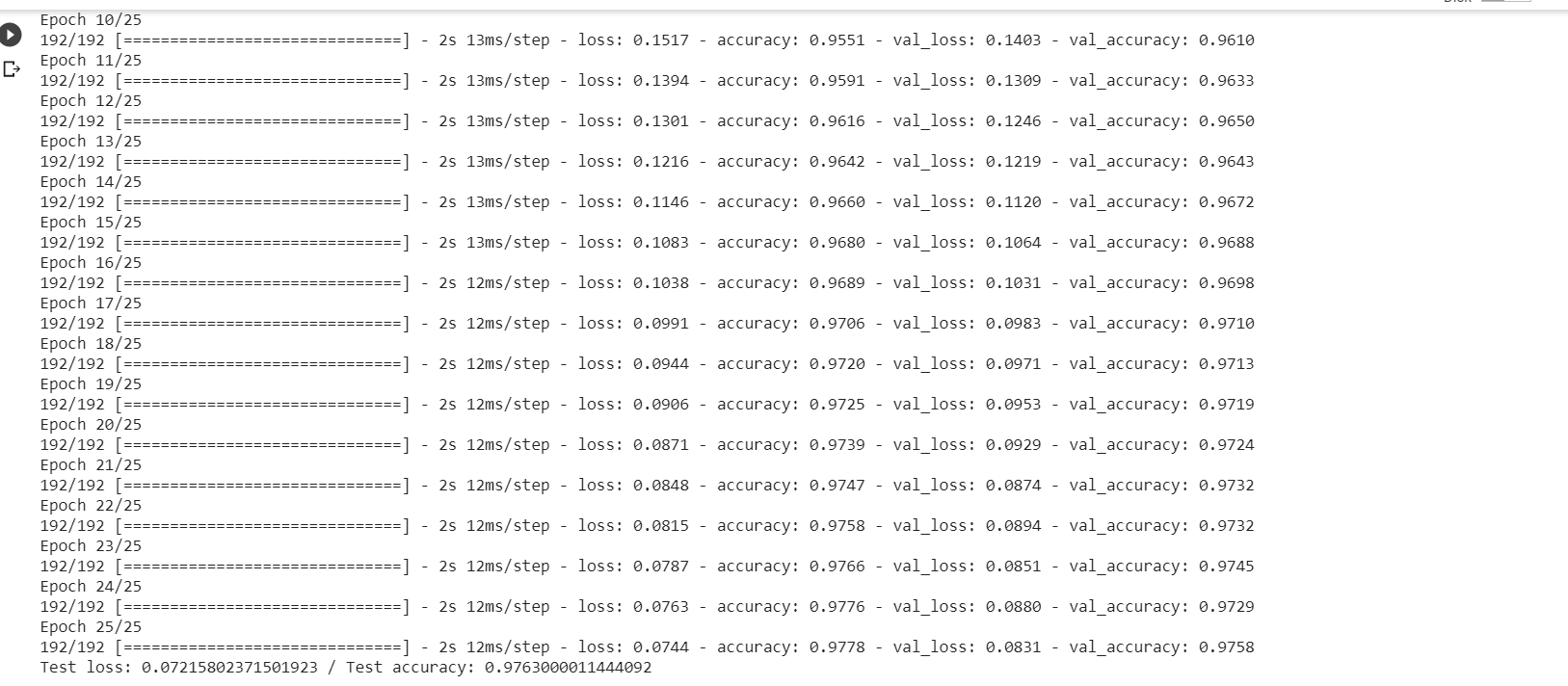

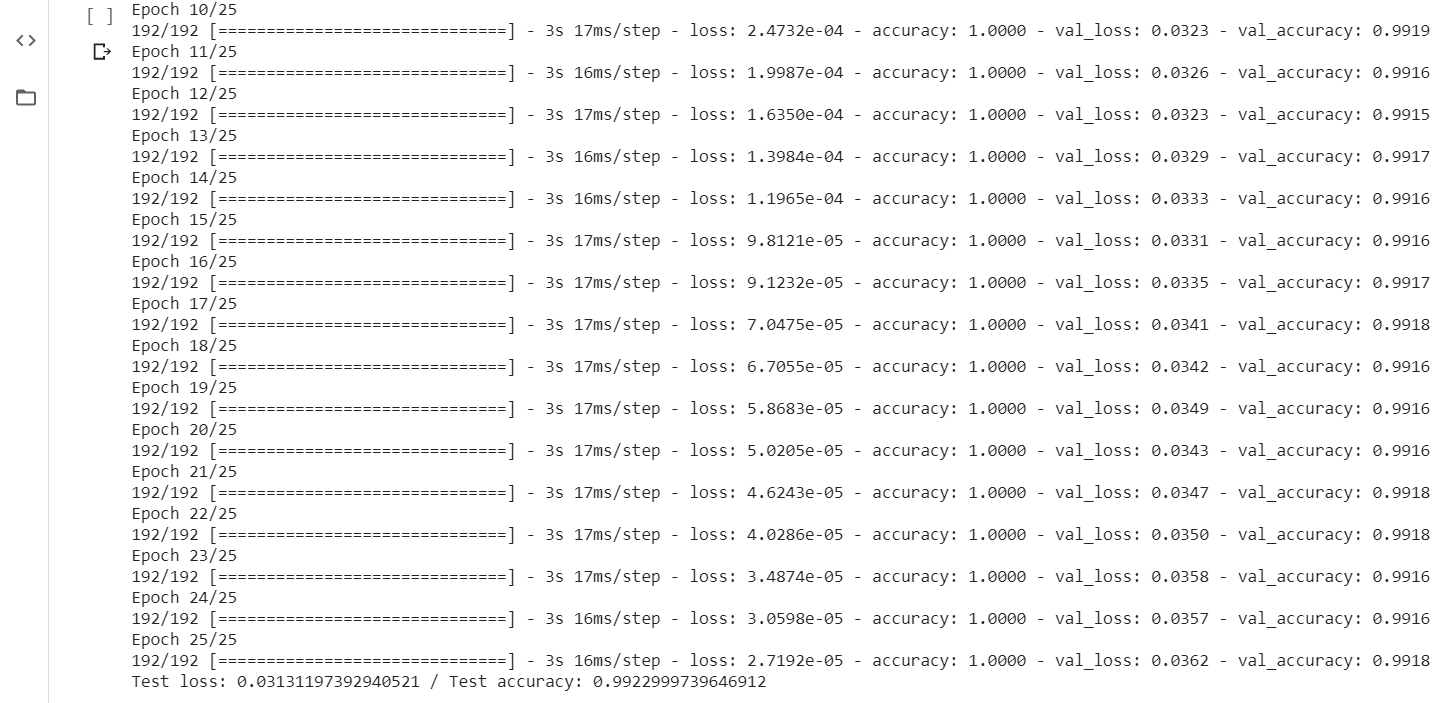

We can see in the above training, we achieved 0.97 accuracies during training when the batch normalization is not added.

Building a Model With Adding Batch Normalization

Now we will define the same model again with adding the batch normalization between the layers.

model = Sequential() model.add(Conv2D(32, kernel_size=(3, 3), activation='relu', input_shape=input_shape)) model.add(BatchNormalization()) model.add(MaxPooling2D(pool_size=(2, 2))) model.add(BatchNormalization()) model.add(Conv2D(64, kernel_size=(3, 3), activation='relu')) model.add(BatchNormalization()) model.add(MaxPooling2D(pool_size=(2, 2))) model.add(BatchNormalization()) model.add(Flatten()) model.add(Dense(256, activation='relu')) model.add(BatchNormalization()) model.add(Dense(no_classes, activation='softmax'))

As we can see above in the training epochs, by using the batch normalization technique, we have achieved 0.992 accuracies. Compared with the previous model without batch normalization, the accuracy has been increased up to 2%.

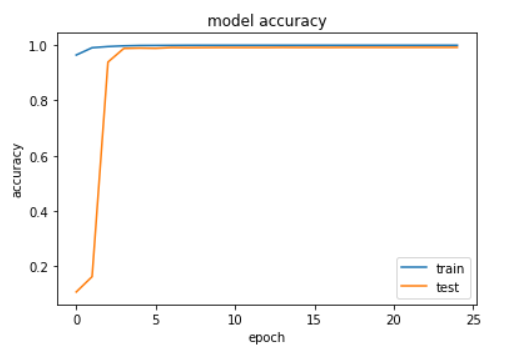

Now, we will compare the accuracy plots of both the training.

import matplotlib.pyplot as plt plt.plot(history.history['accuracy']) plt.plot(history.history['val_accuracy']) plt.title('model accuracy') plt.ylabel('accuracy') plt.xlabel('epoch') plt.legend(['train', 'test'], loc='lower right') plt.show()

Without using Batch Normalization:-With Batch Normalization:-

As we can see in the above training plots, the accuracy is better when we add the batch normalization. The convergence is also better as compared to the once without batch normalization.

Conclusion

By analyzing the training performance and accuracy plots of both the ways – without batch normalization with batch normalization – we can conclude that adding the batch normalization between the layers of the network, id improves the accuracy of the model and avoids overfitting as well. So using batch normalization should always be considered when working with the deep learning models.