The loss function is a method of evaluating how well the algorithm performs on your dataset, most of the people are confused about the difference between loss function and the cost function. We will use the term cost function for a single training example and loss function for the entire training dataset. We always try to reduce the loss function of the models using optimization techniques like Gradient Descent.

Loss functions are categorized into two types of losses

- Regression loss

- Classification loss

So how to choose which loss function to use……….? It depends on the output tensor of the model. In regression problems, the output tensor is continuous data, and the output tensor of classification problems are probability values. Depending on the output variable we need to choose loss function to our model. Sometimes while building optimization models we may use multiple loss functions depending on the output tensor.

In this article, we will discuss the following loss functions.

- Mean Square error

- Mean Absolute error

- Cross-entropy

- Hinge loss

Based on the demonstrated implementations, we can use these loss functions to evaluate the accuracy of any machine learning algorithm.

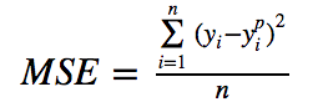

Mean Square Error (MSE) Loss

MSE loss is popularly used loss functions in dealing with regression problems. MSE loss function is an estimator measuring the average of error squares, mathematically it calculates the squared differences between the actual value and the predicted value, it is very easy to understand and implement.

A large MSE value indicates a wider spread of data and a smaller MSE indicated the value is nearest to the mean.

Implementation in python

import numpy as np var1 = np.array([1,2,3]) var2 = np.array([4,5,6]) sub = np.subtract(var1, var2) square_var = np.square(sub) mse = square_var.mean() print(mse) #Output

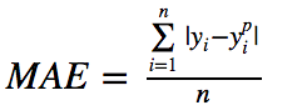

Mean Absolute Error (MAE) Loss

MAE loss is also categorized in the regression problem, MAE loss calculates the average of absolute differences between the actual value and predicted values, when our data includes outliers we use MAE, so what are outliers….? The data points that are too large or too small than the mean. By using MAE loss the outliers don’t affect the model.

Implementation in python

import numpy as np Y_true = [1,2,3,4] Y_pred = [4,5,6,7] MAE = (np.subtract(Y_true,Y_pred)).mean() MAE_arg = (abs(MAE)) print(MAE_arg) #Output

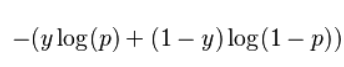

Cross-entropy Loss

Cross entropy is mostly used loss function in dealing with classification problems, cross-entropy measures the classification model whose probability in the range of 0 to 1, cross-entropy loss nearer to 0 results low loss and loss nearer to 1 results high loss. We calculate the individual loss for each class in a multiclass classification problem. When the output is probability distribution we use cross-entropy which uses softmax activation in the output layer.

The above formula is similar to the likelihood function if y=o then y(log(p)) will be 0 if y=1 then (1-y)log(1-p) will be zero.

We use Cross entropy loss in Multi-class classification problems like object detection. Let’s consider the ImageNet dataset in which we have 1000 classes to find the loss.

Implementation in python

from math import log2 p = [0.10, 0.40, 0.50] q = [0.80, 0.15, 0.05] def cross_entropy(p, q): return -sum([p[i]*log2(q[i]) for i in range(len(p))]) cross_entropy(p,q) #Output

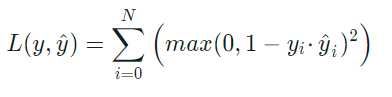

Hinge loss

The hinge loss function is used for a binary classification problem, a loss function is used to evaluate how well the given boundary is separating the given data, hinge loss is mostly used in SVM, this is used in the combination of the activation function in the last layer. We use Hinge loss to classify whether an email is a spam or not.

Implementation in python

def Hinge(yHat, y): return np.max(0, 1 - yHat * y) Hinge(1,1) #Output

Conclusion

In the above demonstration, we discussed various types of the loss function and saw that depending on the output tensor we need to choose the loss function. Choosing the loss function for our model is very important because using the loss function we evaluate our model and it helps in optimizing the model to obtain error and reaching the global minima. Based on the implementations we showed above, these loss functions can be used to evaluate the accuracy of any machine learning algorithm.