After learning how to build different predictive models now it’s time to understand how to use them in real-time to make predictions. You can always check your model ability to generalize when you deploy it in production. For example, if you have built a predictive model that predicts whether a customer will default or not then you will realize how good it is for your model to predict the same when you deploy it in real-time and start predicting for new coming data.

Model Deployment can be defined as the model that is kept in a production environment or a server where it takes input from the user and gives output in real-time. Suppose you have to build a model that predicts whether to approve a loan for a customer or not. The model is trained on features like salary, dependents, loan amount and several other features then in real-time the model will be able to make predictions when you give input of these fields to the model. You have to give entries of features on which the model is trained then only it would be able to make predictions.

The article demonstrates how to deploy a model in real-time using Flask and Rest API through which we would be able to make predictions for the incoming data. We will build a classification model for classifying wine and will deploy it to make real-time class predictions.

What you will learn from this article?

- Working of model deployment.

- Different modes of model deployment.

- Model serialization and pickling

- Real time prediction

1. Working of Model Deployment

After we have built your predictive model we deploy it in production. We give a record of features on which the model is trained and then it gives prediction as an output. The model is placed on a server where it can receive multiple requests for predictions. After you have deployed the model it is capable of taking inputs and giving output to as many different requests made to it for predictions.

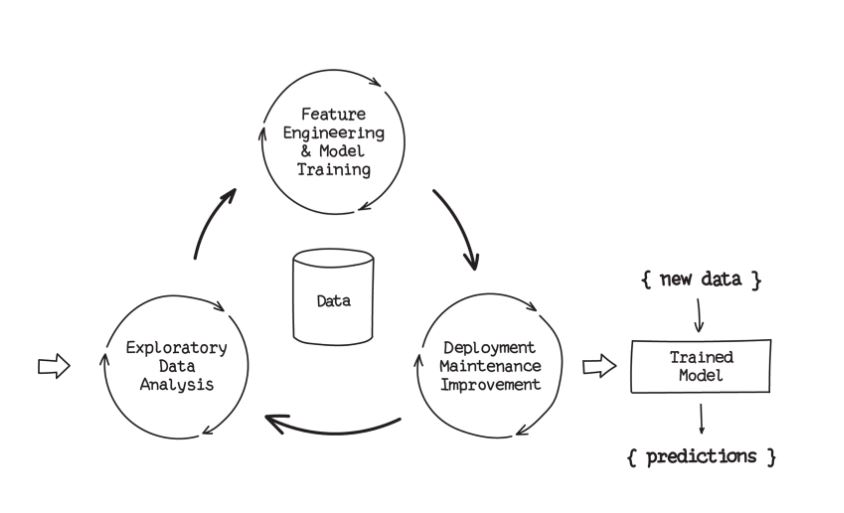

Steps from building a model to deploying it:-

We first understand the data and do exploratory data analysis on it. After that, we do feature engineering and feature selection. Once we are done with that we start doing model building followed by model tuning. Then we evaluate the model and check its performance. Once everything is done and the model gets approval for deployment we then deploy it in real-time and computes prediction in real-time.

2. Different modes of Model Deployment

There are mainly two different models of model deployment that are Batch Mode and Real-time Mode. Let us understand what each mode in model deployment means. Consider a case where a bank has deployed a model that gives a prediction of loan approval for the customers. That model runs multiple times in a day say the timings to this are fixed. So, all the incoming data for the customer is kept in waiting and each time the model runs the predictions are computed. Once it has computed the prediction again the requests it receives are kept on hold for next time. This is called Batch Mode where predictions are made by the model in different batches.

Consider a second case where a model is built to predict the credit score of a customer. A customer fills out all the required fields that are asked as a feature to compute prediction and as soon as the customer hits the submit button at the same moment he receives his credit score. This is called Real-Time Mode where real-time predictions are made. This model is dependent on the infrastructure that is capable of handling the load and able to do processing and prediction in seconds.

3. Model serialization and pickling

To deploy the model in production we first need to save our model. When we build the model on our local system and make predictions till that time the model gives prediction but as soon as we close the python file everything gets destroyed. So, it becomes important to save the model to avoid doing all the steps again. This is called Pickling or Serialization in python. Model saving is important for both the modes of model deployment. To save your model we will make use of a pickle library that allows you to save and load your model.

Classification model

First, we need to import all the required libraries. We will directly use a data set that is already present in the sklearn library for building the model. Use the below code to import the libraries and load the data.

import sklearn import sklearn.datasets import sklearn.ensemble import sklearn.model_selection import pickle import os wine = sklearn.datasets.load_iris()

After loading the dataset we have split the data set into training and testing sets into the ratio of 80:20 respectively. After that, we have initialized our model that will be a random forest classifier. Use the below code to do the same.

training, testing, training_labels, testing_labels = sklearn.model_selection.train_test_split(iris.data, iris.target, train_size=0.80) rfcl = sklearn.ensemble.RandomForestClassifier(n_estimators=500) rfcl.fit(training, traning_labels)

After this, we will save the model we have built using the pickle library. We first specified the path where we want to save our model and then given the model a file name. Then we saved it using the dump command and loaded it. Use the below code to save the model.

os.chdir('/Users/rsdwi/OneDrive/Desktop') filename = 'iris_model' pickle.dump(rfcl, open(filename, 'wb')) load_model = pickle.load(open(filename, 'rb')) result = load_model.score(testing, testing_labels) print(result)

4. Real-time Prediction

Let us understand how to make a real-time prediction by exposing the model to an API using the Flask framework. We will run the model on the server as a rest API that will capture all the incoming requests for prediction and will post the output for every request.

We will now quickly code for the server script. We will first import all the required libraries. If we do not have a flask package installed we first need to install it before executing the below code.

Serve-side Script

import numpy as np from flask import Flask, request, jsonify import pickle import os

We will then define the path where the model is located and load it. After loading the model we will define the flask application and URL for accessing the flask application ends with /api. We have defined the method to be Post. There are two things we can do for communication over the web that gets and posts. We will use post methods to receive the request and post back. Use the below code to the same.

os.chdir('/Users/rsdwi/OneDrive/Desktop') filename = ‘iris_model’ load_model = pickle.load(open(filename, 'rb')) app = Flask(__name__) @app.route('/api',methods=['POST'])

After defining the flask application we now define the function that will be used for getting the data from the user and then making predictions. First, we request for the data from the user that is stored in the predic_request that holds different features on which the model was trained. Then we make predictions using the model we loaded before and store the prediction in the pred variable and at last return the value of pred variable.

def predict() data = request.get_json(force=True) predict_request=[[data['sepal_length'],data['sepal_width'],data['petal_length'],data['petal_width']]] request=np.array(predict_request) print(request) prediction = load_model.predict(predict_request) pred = prediction[0] print(pred) return jsonify(int(pred))

We then defined the main function that initiates where the app will run by definition of the port. Use the below code to the same.

if __name__ == '__main__': from werkzeug.serving import run_simple app.run(port=9000, debug=True)

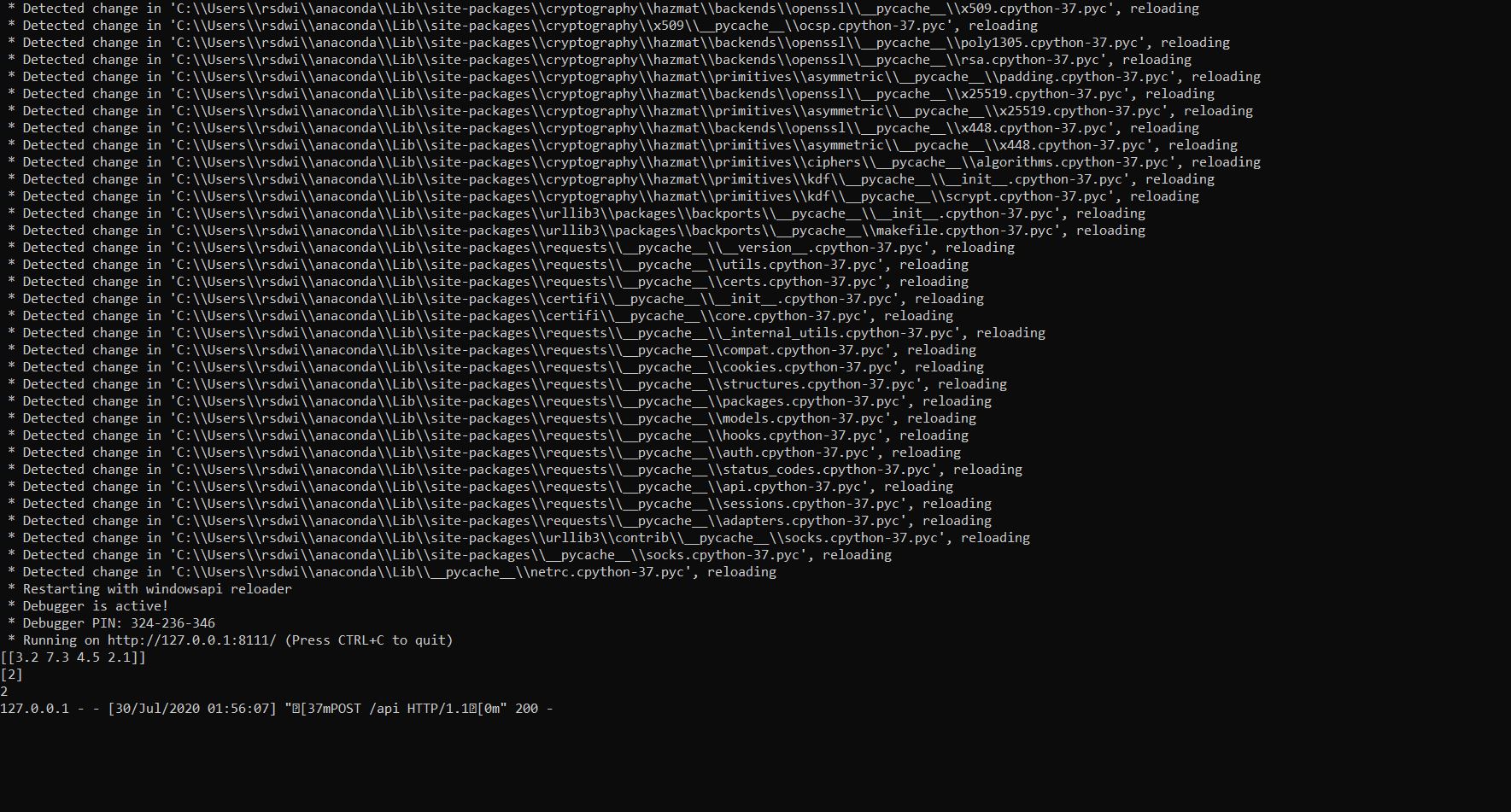

Now let us code the client-side python script. We have first imported the library that is required and then the URL where the server script is running. After that, we have created a dictionary that takes the values from the user which is nothing but the features and stored in a data variable. After that data is sent to the server at the desired URL. The server then makes the prediction and sends it back to the client as JSON that is printed at the client end. You can run server and client python scripts to compute predictions.

Client-side Script

import requests import json url="http://localhost:9000/api" data=json.dumps({'sepal_lenth':3.2,'sepal_width':7.3,'petal_length':4.5,'petal_width':2.1}) r=requests.post(url,data) print(r.json())

First, we need to run the server script and then client script to check the predictions. There will be a request made by the server to which the client will respond by sending the data for prediction. That data will be sent to the server and prediction will be made that will again be sent to the client-side.

Conclusion

I conclude the article by stating that now you have got a fair idea of the model deployment and make predictions in real-time. The three main important things to be kept are the pickle file of the model, server end script, and client end script. Also, you can compute predictions on different machines by using the link where the server is running. There are many other platforms where you can deploy your models like AWS, Microsoft Azure, etc. You can also explore them and estimate your model performance. You can check out this article “My First Kaggle Problem with CNN Model – To Count Fingers And Distinguish Between Left And Right Hand?” and save this built model, deploy it and start predicting.