There are more than millions of news contents published on the internet every day. If we include the tweets from twitter, then this figure will be increased in multiples. Nowadays, the internet is becoming the biggest source of spreading fake news. A mechanism is required to identify fake news published on the internet so that the readers can be warned accordingly. Some researchers have proposed the methods to identify fake news by analyzing the text data of the news based on the machine learning techniques. Here, we will also discuss the machine learning techniques that can identify fake news correctly.

In this article, we will train the machine learning classifiers to predict whether given news is real news or fake news. For this task, we will train three popular classification algorithms – Logistics Regression, Support Vector Classifier and the Naive-Bayes to predict the fake news. After evaluating the performance of all three algorithms, we will conclude which among these three is the best in the task.

The Data Set

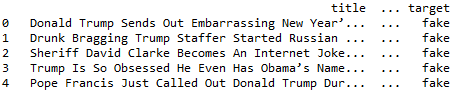

The dataset used in this article is taken from Kaggle that is publically available as the Fake and real news dataset. This data set has two CSV files containing true and fake news. Each having Title, text, subject and date attributes. There are 21417 true news data and 23481 fake news data given in the true and fake CSV files respectively. To train the model for classification, we will add a column in both the datasets as real or fake.

First, we will import all the required libraries.

#Importing Libraries import pandas as pd import numpy as np from sklearn.model_selection import train_test_split from sklearn.pipeline import Pipeline from sklearn.feature_extraction.text import CountVectorizer from sklearn.feature_extraction.text import TfidfTransformer from sklearn.metrics import accuracy_score, confusion_matrix,classification_report from sklearn.linear_model import LogisticRegression from sklearn.svm import LinearSVC from sklearn.naive_bayes import MultinomialNB

After importing the libraries, we will read the CSV files in the program.

#Reading CSV files true = pd.read_csv("True.csv") fake = pd.read_csv("Fake.csv")

Here, we will add fake and true labels as the target attribute with both the datasets and create our main data set that combines both fake and real datasets.

#Specifying fake and real fake['target'] = 'fake' real['target'] = 'true' #News dataset news = pd.concat([fake, true]).reset_index(drop = True) news.head()

After specifying the main dataset, we will define the train and test data set by splitting the main data set. We have kept 20% of the data for testing the classifiers. This can be adjusted accordingly.

#Train-test split x_train,x_test,y_train,y_test = train_test_split(news['text'], news.target, test_size=0.2, random_state=1)

In the next step, we will classify the news texts as fake or true using classification algorithms. We will perform this classification using three algorithms one by one. First, we will obtain the term frequencies and count vectorizer that will be included as input attributes for the classification model and the target attribute that we have defined above will work as the output attribute. To bind the count vectorizer, TF-IDF and classification model together, the concept of the pipeline is used. A machine learning pipeline is used to help automate machine learning workflows. They operate by enabling a sequence of data to be transformed and correlated together in a model that can be tested and evaluated to achieve an outcome, whether positive or negative.

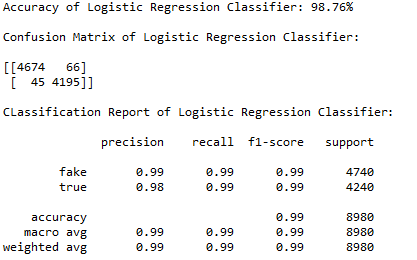

In the first step, we will classify the news text using the Logistic Regression model and evaluate its performance using evaluation matrices.

#Logistic regression classification pipe1 = Pipeline([('vect', CountVectorizer()), ('tfidf', TfidfTransformer()), ('model', LogisticRegression())]) model_lr = pipe1.fit(x_train, y_train) lr_pred = model_lr.predict(x_test) print("Accuracy of Logistic Regression Classifier: {}%".format(round(accuracy_score(y_test, lr_pred)*100,2))) print("\nConfusion Matrix of Logistic Regression Classifier:\n") print(confusion_matrix(y_test, lr_pred)) print("\nCLassification Report of Logistic Regression Classifier:\n") print(classification_report(y_test, lr_pred))

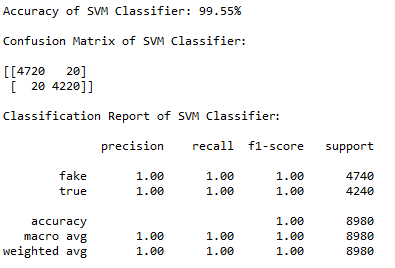

After performing the classification using the logistic regression model, we will classify the news text using the Support Vector Classifier model and evaluate its performance using evaluation matrices.

#Support Vector classification pipe2 = Pipeline([('vect', CountVectorizer()), ('tfidf', TfidfTransformer()), ('model', LinearSVC())]) model_svc = pipe2.fit(x_train, y_train) svc_pred = model_svc.predict(x_test) print("Accuracy of SVM Classifier: {}%".format(round(accuracy_score(y_test, svc_pred)*100,2))) print("\nConfusion Matrix of SVM Classifier:\n") print(confusion_matrix(y_test, svc_pred)) print("\nClassification Report of SVM Classifier:\n") print(classification_report(y_test, svc_pred))

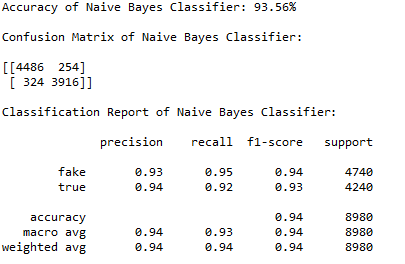

Finally, we will classify the news text using the Naive Bayes Classifier model and evaluate its performance using evaluation matrices.

#Naive-Bayes classification pipe3 = Pipeline([('vect', CountVectorizer()), ('tfidf', TfidfTransformer()), ('model', MultinomialNB())]) model_nb = pipe3.fit(x_train, y_train) nb_pred = model_nb.predict(x_test) print("Accuracy of Naive Bayes Classifier: {}%".format(round(accuracy_score(y_test, nb_pred)*100,2))) print("\nConfusion Matrix of Naive Bayes Classifier:\n") print(confusion_matrix(y_test, nb_pred)) print("\nClassification Report of Naive Bayes Classifier:\n") print(classification_report(y_test, nb_pred))

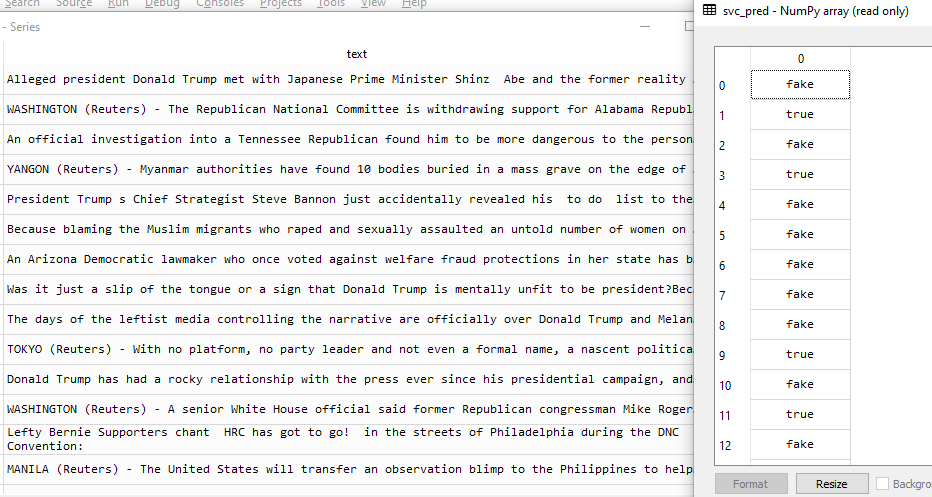

As we can analyze from the accuracy scores, confusion matrices and the classification reports of all the three models, we can conclude that that the Support Vector Classifier has outperformed the Logistic Regression model and the Multinomial Naive-Bayes model in this task. The Support Vector classifier has given about 100% accuracy in classifying the fake news texts. We can see a snapshot of the predicted labels for the news texts by support vector classifier in the below image.