This article introduces pykale, a python library based on PyTorch that leverages knowledge from multiple sources for interpretable and accurate predictions in machine learning. This library consists of three objectives of green machine learning:

- Reduce repetition and redundancy in machine learning libraries

- Reuse existing resources

- Recycle learning model across areas

To complete these objectives, PyKale gives some basic features like:

- To complete objective one: The library refactors the machine learning model, standardizes their workflow, and enforces their style.

- To complete the second objective: The library uses an existing pipeline based on multilinear PCA on different data, including gate video data, CIFAR image data set, cardiac MRI reports data set. Also, it uses the existing libraries for available functionalities.

- To complete the third objective: In the recycling learning model, the library analyzes and identifies the common functionality of models and recycles models for one application to another.

The library has a pipeline-based API that unifies the workflow in several steps that helps to increase the flexibility of the models. These APIs are designed to accomplish the following steps of any machine learning workflow:

- Load data

- Preprocess data

- Embed

- Predict

- Evaluate

- Interpret

The pykale supports graph, images, text and videos data that can be loaded by PyTorch Dataloaders and supports CNN, GCN, transformers modules for machine learning. It also supports the domain adaptation sector of transfer learning. More formally, we can say that it is a library specially focused on multimodal learning and transfer learning.

Next in the tutorial, we will see a demonstration of PyKale on domain adaptation on Digits with Lightning using google colab, an online hosting platform. In domain adaptation, we will take a model trained on one dataset and then we use a digits dataset as the target set and adapt the pre-trained model for the target dataset.

Code Implementation: PyKale

Installing the packages.

Input:

!pip install git+https://github.com/pykale/pykale.git#egg=pykale[extras]Cloning the pykale repository.

Input:

!git clone https://github.com/pykale/pykale.gitOutput:

This Domain Adaptation is constructed based on the digits_dann_lightn example main.py

Directing to the digits_dann_lightn.

Input:

%cd pykale/examples/digits_dann_lightnOutput:

Importing the required modules:

Input:

import logging

import os

import numpy as np

import pytorch_lightning as pl

from torch.utils.data import DataLoader

rom torch.utils.data import SequentialSampler

import torchvision

from model import get_model

from kale.utils.csv_logger import setup_loggerPyKale provides a default configuration file for domain adaptation. We can use it for other similar problems. There is a .yaml for the tutorial which can be tailored with configuration files.

Input:

from config import get_cfg_defaults

gpus = None

PyKale_cfg = get_cfg_defaults()

path = "./configs/tutorial.yaml"

PyKale_cfg.merge_from_file(path)

PyKale_cfg.freeze()

print(PyKale_cfg)Output:

We can see in the dataset we have 1024 samples which are later splitted for training test and validation.

Selecting dataset using DigitDataset.get_source_target for source data and target data and providing a subset of classes 1, 3 and 8.

Input:

from kale.loaddata.digits_access import DigitDataset

from kale.loaddata.multi_domain import MultiDomainDatasets

source, target, num_channels = DigitDataset.get_source_target(

DigitDataset(PyKale_cfg.DATASET.SOURCE.upper()),

DigitDataset(PyKale_cfg.DATASET.TARGET.upper()), PyKale_cfg.DATASET.ROOT

)

dataset = MultiDomainDatasets(

source,

target,

config_weight_type=PyKale_cfg.DATASET.WEIGHT_TYPE,

config_weight_type=PyKale_cfg.DATASET.WEIGHT_TYPE,

val_split_ratio=PyKale_cfg.DATASET.VAL_SPLIT_RATIO,

class_ids=[1, 3, 8],

)Setting seeds for the generation of pseudo-random numbers.

Input:

from kale.utils.seed import set_seed

seed = PyKale_cfg.SOLVER.SEED

set_seed(seed)Setting up the model based on defined configuration and dataset earlier in the article.

Input:

from model import get_model

%time model, train_params = get_model(PyKale_cfg, dataset, num_channels)Output:

Defining the object to process the training further. Under the object store, the parameters define how the model will get trained.

Input:

import pytorch_lightning as pl

adap_trainer = pl.Trainer(

progress_bar_refresh_rate=PyKale_cfg.OUTPUT.PB_FRESH, # in steps

min_epochs=PyKale_cfg.SOLVER.MIN_EPOCHS,

max_epochs=PyKale_cfg.SOLVER.MAX_EPOCHS,

callbacks=[checkpoint_callback],

logger=False,

gpus=gpus)Output:

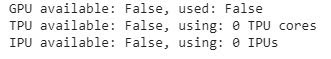

In this step, we can see the reports of the GPU and TPU availability. If you are running this on your local machine, you can set GPU and TPU settings to speed up the training. We can also save the logs of your outputs during or after training using setup_logger.

Input:

from kale.utils.csv_logger import setup_logger

logger, results, checkpoint_callback, test_csv_file = setup_logger(

train_params, PyKale_cfg.OUTPUT.DIR, PyKale_cfg.DAN.METHOD, seed

)Optimizing model parameters using the adap_trainer.

Input:

%time adap_trainer.fit(model)

results.update(

is_validation=True, method_name=PyKale_cfg.DAN.METHOD, seed=seed, metric_values=trainer.callback_metrics,

)Output:

Testing the model with the test data which is not used in the training of the model.

Input:

%time apap_trainer.test()

results.update(

is_validation=False, method_name=PyKale_cfg.DAN.METHOD, seed=seed, metric_values=trainer.callback_metrics,

)Output:

Printing the results of the model performance.

Input:

results.print_scores(pykale_cfg.DAN.METHOD)Output:

Here we can see that we have obtained the results of our model. The article shows how we can use PyKale for domain adaptation using the dan_method provided in the configuration file. This post is just a general introduction to pykale to see how we can perform modeling using PyKale. As discussed before, there are many models that this library supports, like image classification, face detection, video preprocessing, and object detection