LightAutoML (LAMA) is an open-source python framework developed under Sberbak AI Lab AutoML group. It is focussed at Automated Machine Learning providing end-to-end solutions for ML tasks. It is a light-weight and efficient framework for performing binary classification, multiclass classification and regression on tabular and text data. Apart from the predefined pipelines, it gives an option to create easy-to-use custom pipelines using predefined blocks which includes hyperparameter tuning, data processing, advanced feature selection, automatic time utilization, automatic report creation, Graphical profiling system to find bottlenecks and data bugs.

Currently implemented pipelines are:

- BlackBox Preset

- WhiteBox Preset(Interpretable Models)

- NLP Preset

Motivation

The motivation for building LAMA is various demanding tasks in the process of the model building like:

- If the data contains more kinds of data types, the preprocessing task becomes difficult. Hence we require advance preprocessing.

- It is important for developers and data scientists to have the ability to change pipelines steps according to their requirements.

- A huge amount of data – existing open source solutions can train the model very slowly.

- Typical models building (for example, the same task in different client segments). Knowledge about the best pipeline for one can be reused in order models.

- Regular requirements about interpretability for several model types(IRB)

Advantages

Following are the merits of using LAMA.

- Up to 10 times faster implementation.

- The way for transferring state-of-art solutions to industrial developers and Data Scientists.

- Increasing the quality of implemented models.

- LAMA is the solution for building models that are not economically efficient in case of manual implementation.

- Auto validation, bench marking models, fast hypothesis checking.

- Train-test gap elimination – no more need to build models for centuries.

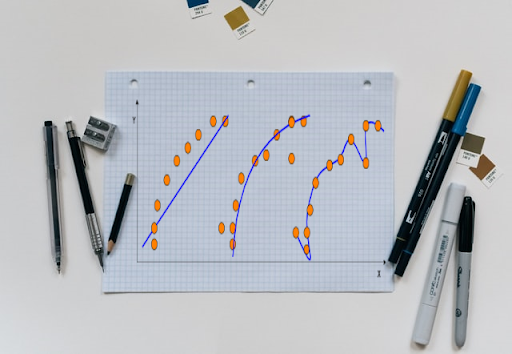

An example of building ML pipeline is shown below:

Requirements

Install LAMA framework via pip from PyPI.

!pip install lightautoml

! pip install albumentations==0.4.6Demo – Create your own pipeline

This demo shows how to create your own pipeline from specified blocks: pipelines for feature generation and feature selection, ML algorithms, hyperparameter optimization etc.

- This is the basic step: importing all the libraries, defining the parameters, reading the dataset, etc.

# Standard python libraries

import os

import time

import logging

# Installed libraries

import numpy as np

import pandas as pd

from sklearn.metrics import roc_auc_score

from sklearn.model_selection import train_test_split

import torch

# Imports from our package

import lightautoml

from lightautoml.automl.base import AutoML

from lightautoml.ml_algo.boost_lgbm import BoostLGBM

from lightautoml.ml_algo.tuning.optuna import OptunaTuner

from lightautoml.pipelines.features.lgb_pipeline import LGBSimpleFeatures

from lightautoml.pipelines.ml.base import MLPipeline

from lightautoml.pipelines.selection.importance_based import ImportanceCutoffSelector, ModelBasedImportanceEstimator

from lightautoml.reader.base import PandasToPandasReader

from lightautoml.tasks import Task

from lightautoml.utils.profiler import Profiler

from lightautoml.automl.blend import WeightedBlender

from lightautoml.dataset.roles import DatetimeRole

from lightautoml.automl.presets.tabular_presets import TabularAutoML, TabularUtilizedAutoML

from lightautoml.report import ReportDecoDefine all the parameters

#define all the parameters

N_THREADS = 8 # threads cnt for lgbm and linear models

N_FOLDS = 5 # folds cnt for AutoML

RANDOM_STATE = 42 # fixed random state for various reasons

TEST_SIZE = 0.2 # Test size for metric check

TARGET_NAME = 'TARGET' # Target column nameInitialize the profiler for report

# Change profiling decorators settings

#By default, profiling decorators are turned off for speed and memory reduction.

#If you want to see profiling report after using LAMA, you need to turn on the decorators using command below:

#create a Profile object to get the profile report

p = Profiler()

p.change_deco_settings({'enabled': True})Load the dataset

#load the dataset

%%time

data = pd.read_csv('https://raw.githubusercontent.com/sberbank-ai-lab/LightAutoML/master/example_data/test_data_files/sampled_app_train.csv')

data.head()Some feature engineering steps as shown below

# (Optional Step)

# This Cell shows some user feature preparations to create task more difficult. Some feature engineering

%%time

#creating a new columns to make the existing features more understandable

data['BIRTH_DATE'] = (np.datetime64('2018-01-01') + data['DAYS_BIRTH'].astype(np.dtype('timedelta64[D]'))).astype(str)

data['EMP_DATE'] = (np.datetime64('2018-01-01') + np.clip(data['DAYS_EMPLOYED'], None, 0).astype(np.dtype('timedelta64[D]'))

).astype(str)

#creating three new columns

data['constant'] = 1

data['allnan'] = np.nan

data['report_dt'] = np.datetime64('2018-01-01')

#drop 'DAYS_BIRTH' and 'DAYS_EMPLOYE' column from dataset

data.drop(['DAYS_BIRTH', 'DAYS_EMPLOYED'], axis=1, inplace=True)Splitting the data into train set and test set.

# (Optional Step) Data splitting for train-test

# Block below can be omitted if you are going to train model only or you have specific train and test files:

%%time

train_data, test_data = train_test_split(data,

test_size=TEST_SIZE,

stratify=data[TARGET_NAME], random_state=RANDOM_STATE)

logging.info('Data splitted. Parts sizes: train_data = {}, test_data = {}'

.format(train_data.shape, test_data.shape))- In this step we are going to implement the pipeline, shown below.

Create a binary classification task and convert pandas dataframe to AutoML’s dataframe.

# Step 1. Create Task and PandasReader

%%time

#We are going to do a binary classification on the given dataset

task = Task('binary')

#PandasToPandasReader convert pd.DataFrame to AutoML's PandasDataset.

reader = PandasToPandasReader(task, cv=N_FOLDS, random_state=RANDOM_STATE)Next step is to create a feature selector. For this, we will create a LGBM model with default parameters and initialize the simple pipeline and simple estimator and then calculate the importance of features via threshold.

# Create feature selector (if necessary)

# We basically achieved that by creating light gbm and letting the feature importance from that to choose the best features.

# Now, I don't know much about lgbm; but as that is not a uniquely better algorithm;

#therefore this may serve as a bottleneck for the performance of the automl model as it depends on that

%%time

#create a lightGBM model with default parameters as shown below

model0 = BoostLGBM(

default_params={'learning_rate': 0.05, 'num_leaves': 64, 'seed': 42, 'num_threads': N_THREADS}

)

#Creates simple pipeline for tree based models.

#Simple but is ok for select features Numeric stay as is, Datetime transforms to numeric,

#Categorical label encoding Maps input to output features exactly one-to-one

pipe0 = LGBSimpleFeatures()

#Base class for performing feature selection using model feature importances.

mbie = ModelBasedImportanceEstimator()

#Selector based on importance threshold.

#It is important that data which passed to .fit should be ok to fit ml_algo or preprocessing pipeline should be defined.

selector = ImportanceCutoffSelector(pipe0, model0, mbie, cutoff=0)Now, create a 1st level ML pipeline for AutoML.

- Simple features for gradient boosting built on selected features (using above step)

- 2 different models:

- LightGBM with params tuning (using OptunaTuner)

- LightGBM with heuristic params

# Create 1st level ML pipeline for AutoML

%%time

#simple feature pipeline

pipe = LGBSimpleFeatures()

#initializing OptunaTuner for hyperparameter optimization

params_tuner1 = OptunaTuner(n_trials=20, timeout=30) # stop after 20 iterations or after 30 seconds

#LGBM model with OptunaTuner

model1 = BoostLGBM(

default_params={'learning_rate': 0.05, 'num_leaves': 128, 'seed': 1, 'num_threads': N_THREADS}

)

#Simple LGBM model with heuristic parameter

model2 = BoostLGBM(

default_params={'learning_rate': 0.025, 'num_leaves': 64, 'seed': 2, 'num_threads': N_THREADS}

)

#Created two layers for the pipeline and then add them together to create the pipe(shown below):

pipeline_lvl1 = MLPipeline([

(model1, params_tuner1),

model2

], pre_selection=selector, features_pipeline=pipe, post_selection=None)Next is to create a 2nd level pipeline as shown in figure above.

- Using simple features as well, but now it will be Out-Of-Fold (OOF) predictions of algos from 1st level

- Only one LGBM model without params tuning.

- Without feature selection on this stage because we want to use all OOFs here.

# Create 2nd level ML pipeline for AutoML

%%time

#creating another simple pipeline for features

pipe1 = LGBSimpleFeatures()

#creating another LGBM without tuning parameters

model = BoostLGBM(

default_params={'learning_rate': 0.05, 'num_leaves': 64, 'max_bin': 1024, 'seed': 3, 'num_threads': N_THREADS},

freeze_defaults=True

)

#Merging above two pipelines into one pipeline

pipeline_lvl2 = MLPipeline([model], pre_selection=None, features_pipeline=pipe1, post_selection=None)Finally, create an AutoML pipeline by calling above pipelines. The automl pipeline contains:

- Reader for data preparation

- First level ML pipeline (as built in step 3.1)

- Second level ML pipeline (as built in step 3.2)

- Skip_conn = False equals here “not to use initial features on the second level pipeline”

%%time

automl = AutoML(reader, [

[pipeline_lvl1],

[pipeline_lvl2],

], skip_conn=False)Now, fit the model on train data with the target column “TARGET” and get OOF predictions.

Note: Might take 2-3 minutes to train.

# Train AutoML on loaded data

%%time

#Now, fit the model on train data with target column as “TARGET” and get OOF predictions.

oof_pred = automl.fit_predict(train_data, roles={'target': TARGET_NAME})

logging.info('oof_pred:\n{}\nShape = {}'.format(oof_pred, oof_pred.shape))After this, we will analyze the feature selection performance of the AutoML model. The code snippet for this is available here. Next, we will predict the output on test data and calculate the scores.

# Predict to test data and check scores

#

%%time

test_pred = automl.predict(test_data)

logging.info('Prediction for test data:\n{}\nShape = {}'

.format(test_pred, test_pred.shape))

logging.info('Check scores...')

logging.info('OOF score: {}'.format(roc_auc_score(train_data[TARGET_NAME].values, oof_pred.data[:, 0])))

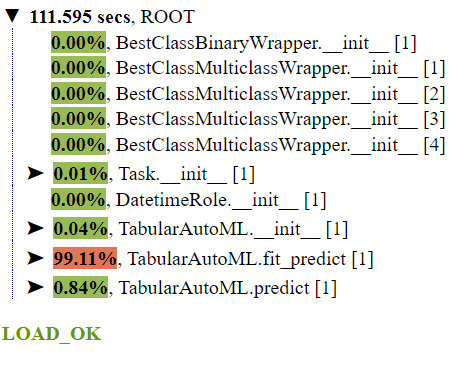

logging.info('TEST score: {}'.format(roc_auc_score(test_data[TARGET_NAME].values, test_pred.data[:, 0])))Since, we have turned on the profile decorator in Step1, we will now build a report called profiling AutoML. The arrows shown in the report are interactive so you can go as deep as you want.

You can check the full demo here.

Demo – AutoML pipeline Preset

This demo shows how to use LightAutoML presets (both standalone and time utilized variants) for solving ML tasks on tabular data. Using presets you can solve binary classification, multiclass classification and regression tasks. Let’s dig in the code part!

- This step is the same as the Step 1 discussed in Create your own pipeline part. The code snippet is available here.

- We are going to implement the following pipeline in this step.

One part of this step is to create a task, set up common rules and create an AutoML instance, fit the model on data, predict on test data and then check scores.

Create the binary classification task.

%%time

#create task for binary classification

task = Task('binary', )Set up common rules as explained below.

%%time

#setup common rules - marking up variables to determine their place in pipeline,

#AutoML can do this automatically for all other variables except for target variables.

roles = {'target': TARGET_NAME,

DatetimeRole(base_date=True, seasonality=(), base_feats=False): 'report_dt',

}Create an AutoML instance of TabularAutoML, fit and predict the model on training data via fit_predict method.

#create an AutoML instance

%%time

#the only required parameter is the "task"

TIMEOUT = 300 # Time in seconds for automl run

automl = TabularAutoML(task = task,

timeout = TIMEOUT,

general_params = {'nested_cv': False, 'use_algos': [['linear_l2', 'lgb', 'lgb_tuned']]},

reader_params = {'cv': N_FOLDS, 'random_state': RANDOM_STATE},

tuning_params = {'max_tuning_iter': 20, 'max_tuning_time': 30},

lgb_params = {'default_params': {'num_threads': N_THREADS}})

oof_pred = automl.fit_predict(train_data, roles = roles)

logging.info('oof_pred:\n{}\nShape = {}'.format(oof_pred, oof_pred.shape))Next step is to predict on test data and check scores. The code is the same as explained above and available here. After this, we will create an AutoML Profiling Report. The code snippet is available here. Download the .html file and check the report. A snap of the report is shown below.

Now, we will repeat the above steps of creating an automl instance but with time utilization then fit and predict the model on training data. Steps are the same as for without time utilization. Predict the output of test data and check scores.

# Create AutoML with time utilization

# Below we are going to create specific AutoML preset for TIMEOUT utilization (try to spend it as much as possible):

%%time

automl = TabularUtilizedAutoML(task = task,

timeout = TIMEOUT,

general_params = {'nested_cv': False, 'use_algos': [['linear_l2', 'lgb', 'lgb_tuned']]},

reader_params = {'cv': N_FOLDS, 'random_state': RANDOM_STATE},

tuning_params = {'max_tuning_iter': 20, 'max_tuning_time': 30},

lgb_params = {'default_params': {'num_threads': N_THREADS}})

oof_pred = automl.fit_predict(train_data, roles = roles)

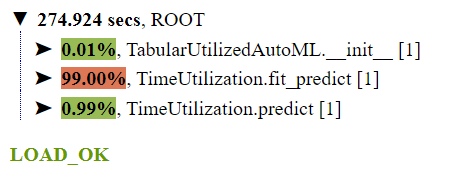

logging.info('oof_pred:\n{}\nShape = {}'.format(oof_pred, oof_pred.shape))The generated profile report is shown below.

You can check the full demo here.

Demo – Multitask Class

This demo shows the AutoML pipeline for the Multiclass Classification. The steps for it are as follows:

- This step is the same as the Step 1 discussed in Create your own pipeline part except that we have to add fake multiclass labels for this demo before splitting the data into training and testing sets.

#create fake multiclass target

data[TARGET_NAME] = np.where(np.random.rand(data.shape[0]) > .5, 2, data[TARGET_NAME].values)

data[TARGET_NAME].value_counts()- In this step we will discuss and implement the whole AutoML multiclass classification pipeline. The pipeline we are going to implement is shown below. It is the same as we discussed in the AutoML Pipeline Preset.

First, create a timer so that the model won’t go out of time limit.

# Create Timer for pipeline

# Here we are going to use strict timer for AutoML pipeline, which helps not to go outside the limit:

%%time

timer = PipelineTimer(600, mode=2)Next, create a feature selector.

# Create feature selector

%%time

timer_gbm = timer.get_task_timer('gbm') # Get task timer from pipeline timer

#creating simple feature pipeline

feat_sel_0 = LGBSimpleFeatures()

#creating a model instance with timer

mod_sel_0 = BoostLGBM(timer=timer_gbm)

#initailzing the feature importance estimator

imp_sel_0 = ModelBasedImportanceEstimator()

#selecting important feature on the basis on threshold

selector_0 = ImportanceCutoffSelector(feat_sel_0, mod_sel_0, imp_sel_0, cutoff=0, )After this, we will create LGBM pipelines. It is the same as we discussed above with a small change i.e., with timer.

##LGBAdvancedPipeline creates advanced pipeline for trees based models.

# Includes:

# different cats and numbers handling according to role params.

# dates handling - extracting seasons and create datediffs.

# create categorical intersections.

%%time

feats_gbm_0 = LGBAdvancedPipeline(top_intersections=4,

output_categories=True,

feats_imp=imp_sel_0)

timer_gbm_0 = timer.get_task_timer('gbm')

timer_gbm_1 = timer.get_task_timer('gbm')

#creating two LGBM model, one with Optune Tuner and another one simple

gbm_0 = BoostLGBM(timer=timer_gbm_0)

gbm_1 = BoostLGBM(timer=timer_gbm_1)

tuner_0 = OptunaTuner(n_trials=20, timeout=30, fit_on_holdout=True)

gbm_lvl0 = MLPipeline([

(gbm_0, tuner_0),

gbm_1

],

pre_selection=selector_0,

features_pipeline=feats_gbm_0,

post_selection=None

)Create a linear pipeline for AutoML. This is also the same as we discussed above.

#LinearFeatures creates pipeline for linear models and nnets.

#https://lightautoml.readthedocs.io/en/latest/pythonapi/lightautoml.pipelines.features.linear_pipeline.html?highlight=LinearFeatures#lightautoml.pipelines.features.linear_pipeline.LinearFeatures

feats_reg_0 = LinearFeatures(output_categories=True,

sparse_ohe='auto')

timer_reg = timer.get_task_timer('reg')

#LBFGS L2 regression based on torch

reg_0 = LinearLBFGS(timer=timer_reg)

#Adding above two pipelines into one

reg_lvl0 = MLPipeline([

reg_0

],

pre_selection=None,

features_pipeline=feats_reg_0,

post_selection=None

)Now create a task of multiclass classification and convert the pandas dataframe to AutoML’s dataframe.

# Create multiclass task and reader

%%time

task = Task('multiclass', metric = 'crossentropy', )

reader = PandasToPandasReader(task = task, samples = None, max_nan_rate = 1, max_constant_rate = 1,

advanced_roles = True, drop_score_co = -1, n_jobs = 4)Combine the prediction of above two models(LGBM pipeline and linear pipeline) with the help of a weight blender.

# Create blender for 2nd level

# To combine predictions from different models into one vector we use WeightedBlender:

%%time

blender = WeightedBlender()Create an autoML instance and pass above pipelines in it and train the model.

# Create AutoML pipeline

%%time

automl = AutoML(reader=reader, levels=[

[gbm_lvl0, reg_lvl0]

], timer=timer, blender=blender, skip_conn=False)Lastly, predict the test data and check scores. Calculate AUC score for each class and build a report using Profile() method, as shown above.

You can check the full demo, here.

Conclusion

In this article, we have discussed about LightAutoML(LAMA) and its demo for

LAMA is an open source framework for developers, data scientists and data analytics. It can work with a dataset, where each row is an instance of data with its features and target variable. The library is under development for dealing with multi table data sets and sequences. Further, automatic creation of interpretable models can be separately done by AutoWoE library.

Resources and Tutorials used above: