CompilerGym is a python toolkit by Facebook. It is a reinforcement learning package for compiler optimization problems. This framework’s motivation is that compilers’ decisions are very risky performance-wise and have to be efficient for the required software. Applying AI to optimize the compiler is growing these days. However, due to the compiler’s dynamic nature, it is not easy for it to do experiments. The key idea is to allow AI researchers to experiment with compiler optimization methods without really getting into the compilers’ details and help compiler developers look into new AI optimization problems.

Vision of CompilerGym

The vision is to ease program optimization, without even writing a single line of code in C/C++. The goals of CompilerGym are mentioned below:

- To create “ImageNet for Compilers” : an open source OpenAI gym environment for experimenting with compiler optimizations using real-world software and datasets.

- Provide a common platform for comparisons of different techniques of compiler optimization.

- Give control to users for all the decisions that the compiler makes.(see Roadmap for details).

- Making CompilerGym ready for easy deployment, creating the production environment more efficient.

Key Concepts in CompilerGym

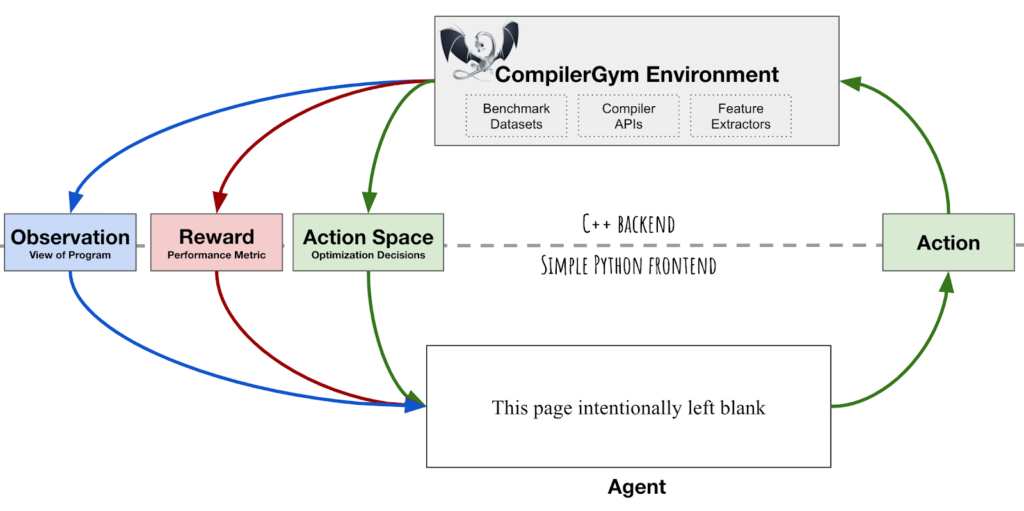

CompilerGym uses compiler optimization problems as an environment for reinforcement learning. It uses OpenAI Gym interface to the agent-environment loop mentioned below:

Here,

- Environment: defines a compiler optimization task. The environment contains an instance of the compiler and the program which is being compiled. Whenever the agent – environment interaction happens, the compiler’s current state can change.

- Action Space: it defines the set of actions that are taken on the current environment state.

- Observation: it defines the view of the current environment state.

- Reward: it defines a metric that indicates the performance of the previous action.

A single instance of the “agent-environment loop” represents the compilation of a particular program to develop an agent that can maximize the cumulative reward from these environments.

Installation

CompilerGym can be installed via PyPI.

!pip install compiler_gym

For installation from other sources, check the official tutorial.

Structure of CompilerGym Code

import gym

import compiler_gym # imports the CompilerGym environments

env = gym.make("llvm-autophase-ic-v0") # starts a new environment

env.require_dataset("npb-v0") # downloads a set of programs

env.reset() # starts a new compilation session with a random program

env.render() # prints the IR of the program

env.step(env.action_space.sample()) # applies a random optimization, updates state/reward/actions

Demo – CompilerGym Basics

- Import the compiler_gym library. Importing compiler_gym automatically registers the compiler environment.

import gym import compiler_gym

Its version can be checked by:

compiler_gym.__version__

We can check all the available environments in CompilerGym with the help of the command mentioned below:

compiler_gym.COMPILER_GYM_ENVS

- Select an environment.

CompilerGym environment are named as one of the following formats:

- <compiler>-<observation>-<reward>-<version>

- <compiler>-<reward>-<version>

- <compiler>-<version>

where,

- <compiler> is the compiler optimization task

- <observation> is the default observation provided and,

- <reward> is the reward signal

Check compiler_gym.views for more details. For the example purpose, the following environment will be used:

- Compiler: LLVM

- Observation Type: Autophase

- Reward Signal: IR Instruction count relative to -Oz

You can create an instance of this environment by using the code below:

env = gym.make("llvm-autophase-ic-v0")

- Installing Benchmarks

In CompilerGym, the input programs are known as benchmarks and a collection of benchmarks are contained into datasets. You can use a pre-defined benchmark or create your own.

The benchmarks(if available to the present environment) can be queried using env.benchmarks. Available benchmarks can be seen as:

env.benchmarks

It will return an empty list if there are no benchmarks available. You can also use predefined programs. For the example purpose, we will use the NAS Parallel Benchmarks dataset :

env.require_dataset("npb-v0")

env.benchmarks

- The compiler environment

- The CompilerGym environment is very similar to the OpenAI Gym environment. You can check the documentation of any method via help() function. For example:

help(env.reset)

- Action Space : CompilerGym defines the action space by env.action_space. You can check the codes here.

- Observation Space: The observation space is described by env.observation_space.

- The upper and lower bounds of the reward signal are described by env.reward_range.

- Before using the other CompilerGym environment we should call env.reset() to reset the environment state.

- Interaction with the environment: It is the same as the interaction with the OpenAI Gym environment.

To print the Intermediate Representation (IR) of the program in the current state, we use :

env.render()

env.step() runs an action. It returns four values: a new observation, a reward, a boolean value to know whether the episode has ended and additional information.

observation, reward, done, info = env.step(0)

An example of optimization is shown below where rewards indicated the reduction in size of the code as compared to the previous action.A cumulative reward greater than one means that the sequence of optimizations performed yields better results than LLVM’s default optimizations. Let’s run 100 random actions and see how close we can get:

env.reset(benchmark="benchmark://npb-v0/50")

episode_reward = 0

for i in range(1, 101):

observation, reward, done, info = env.step(env.action_space.sample())

if done:

break

episode_reward += reward

print(f"Step {i}, quality={episode_reward:.2%}")

The above code can be run with a simple command line command.

env.commandline()

You can also save the program for future purposes, the code is given below:

env.write_bitcode("/tmp/program.bc")

!file /tmp/program.bc

At the end, never forget to close the environment to end that particular compiler instance.

env.close()

Conclusion

In this article, we have covered CompilerGym, a reinforcement learning toolkit for optimizing the compiler. The basic code structure and code usage are mentioned above in different sections. Colab Notebook for Demo is available at:

Official Codes & Docs are available at: