|

Listen to this story

|

‘What Intel giveth, Microsoft taketh away’ is more than just a clever quip – it’s a reflection of the increasing software complexity counteracting the growing pace of hardware.

The spotlight may be on Moore’s Law, but Wirth’s Law provides a contrasting viewpoint on the evolution of technology. The law states that while advanced chips offer extra power and memory, software designed by companies like Microsoft is getting more complex (to make them do more). In the process, the software takes up the available memory space. This is why we haven’t seen a significant increase in the performance of software applications over time, and in some cases, they have even become slower.

Niklaus Wirth believes that one of the important things that contributes to increasing complexity in the software world is the users’ lack of ability to distinguish between necessary and unnecessary functions in certain applications which leads to overly complex and unnecessary designs in software.

For instance, Windows 11, an upgrade to the 10th, offered little-to-no performance gain in real-world use. Outside of the hoopla around the new look and feel given to it, the upgrade only offers supporting capabilities to the more advanced hardware requirements compared to its predecessor. It is like the software world is playing catchup to the up-and-coming hardware releases.

Liam Proven, in writing for The Register, says that there is a symbiotic relationship between the hardware and software. “As a general rule, newer versions of established software products tend to be bigger, which makes them slower and more demanding of hardware resources. That means users will want, or better still need, newer, better-specified hardware to run the software they favour,” he writes.

Integration difficulties

However, Sravan Kundojjala, principal industry analyst at Strategy Analytics, told AIM, “The hardware and software symbiosis is easier said than done. For example, the AI chip landscape has quite a few start-ups but most of them lack software support to take advantage of the platform features.” A good software stack is important for the effectiveness and success of an AI chip. This is because when it comes to AI, compute itself is fundamentally different. AI chip company Graphcore’s Dave Lacey discusses three reasons to why this is the case:

(i) Modern AI and ML technology deals with uncertain information, represented by probability distributions in the model. This requires both detailed precision of fractional numbers and a wide dynamic range of possibilities. From a software perspective, this necessitates the use of various floating-point number techniques and algorithms that manipulate them in a probabilistic way.

(ii) The high-dimensional data, such as images, sentences, video, or abstract concepts, is probabilistic and irregular, making traditional techniques such as buffering, caching and vectorization ineffective.

(iii) Additionally, machine intelligence compute deal with both large amounts of data for training and a significant number of computing operations per data processed, making it a significant processing challenge.

Thus, a co-existence of AI hardware design and software algorithms is essential to improve performance and efficiency. Chip companies provide software development kits (SDKs) to developers, allowing them to access and utilise the platform’s features via application programming interfaces (APIs). An example of this is Qualcomm, which offers an SDK that enables original equipment manufacturers (OEMs) to utilise the AI capabilities of its chips. Companies that utilise these SDKs tend to have an advantage in terms of power efficiency and features.

Similarly, Graphcore’s IPU-Machine M2000, which utilises off-chip DDR memory, doesn’t have hardware-based cache or mechanism to automatically manage the transfer or buffering of data between the external streaming memory and on-chip in-processor memory. It all relies on software control, using the computation graph as a guide.

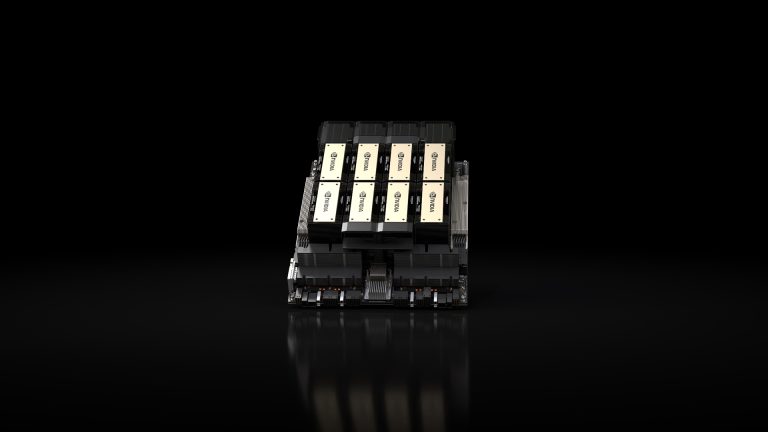

However, as indicated above, this is not entirely easy. Kundojjala said, “Even companies such as AMD and Intel are finding it hard to compete with NVIDIA in AI due to a lack of significant software developer support for their AI chips.” NVIDIA’s CUDA monopoly has been long-known. It dominates the AI chip market offering the best GPUs, with proprietary APIs exclusive for them in CUDA.

GPT-3 and Stable Diffusion are all optimised for NVIDIA’s CUDA platform. Its dominance is therefore difficult to break. As Snir David points out, large businesses may incur additional costs by using non-mainstream solutions. This can include resolving issues related to data delivery, managing code inconsistencies due to the lack of CUDA-enabled NVIDIA cards, and often settling for inferior hardware.

heading into a world where Google, OpenAI, Meta, and Anthropic all have chatGPT-level models available by API, Nvidia seems like the real winner

— Daniel Fein (@DanielFein7) January 13, 2023

RISC-V to the rescue

However, Kundojjala also mentions, “maintaining software compatibility on a hardware platform often comes at a cost”. While software growth propels buying new hardware, when the software matures it actually becomes a burden for hardware companies as they have to support legacy features. But, new architectures like RISC-V are offering a fresh template to companies in order to avoid suffering from legacy software support.

As an open-source alternative to Arm and x86, RISC-V is already backed by companies like Google, Apple, Amazon, Intel, Qualcomm, Samsung, and NVIDIA. RISC-V is often likened to Linux in the sense that it is a collaborative effort among engineers to design, establish, and enhance the architecture. RISC-V International establishes the specifications, which can be licensed for free, and chip designers are able to use it in their processors and system-on-chips in any way they choose. It offers the flexibility to harness generic software solutions from the ecosystem. The open-source ISA allows an extremely customisable and flexible hardware and software ecosystem.

Therefore, while historically there has been an imbalance between hardware and software progress, with open-source architectures, we can see the gap narrowing down a little. But, nevertheless, as Kundojjala says, “It looks like on most occasions, the software is the limiter as it requires more collaboration across the industry whereas hardware can be developed by individual companies.”