To create awareness among the machine learning community, especially the NLP community about the effects of training deep neural networks on the environment, Emma Strubell and her team at the University of Massachusetts Amherst, trained a variety of popular off-the-shelf NLP models, which can be converted to approximate carbon emissions and electricity costs.

NLP models could be trained and developed on a commodity laptop or server, many now require multiple instances of specialized hardware such as GPUs or TPUs

The authors argue that regardless of the availability of renewable energy, high energy demands of training neural networks is still a concern because:

- Energy is not currently derived from carbon-neutral sources in many locations, and

- When renewable energy is available, it is still limited to the equipment we have to produce and store it, and energy spent training a neural network might better be allocated to heating a family’s home.

So, the authors have the following recommendations to the NLP community going ahead:

- Time to retrain and sensitivity to hyperparameters should be reported for NLP machine learning models;

- academic researchers need equitable access to computational resources; and

- researchers should prioritize developing efficient models and hardware.

But How Bad Is Training Really?

The analogy between deep networks and 5 cars has been made popular overnight. This was picked from the paper under discussion where the authors presented a comparison study with numbers indicating deep networks go toe to toe with lifetime emissions of 5 cars.

The following questions might have or have surfaced post publication of this paper:

How big are the neural networks generally and how many parameters are usually involved in popular training models?

In the paper, the authors wrote that a reasonably sized transformer net of 65M parameters trained for 12 hours on 8 GPUs consumed 27 kWh of energy and emitted 26 lbs of carbon dioxide.

A larger BERT model of 110M parameters trained for 80 hours on 64 GPUs consumed 1507 kWh of energy and emitted 1438 lbs of carbon dioxide.

In the paper, it was said that the full architecture search ran for a total of 979M training steps and that the base model requires 10 hours to train for 300k steps on one TPUv2 core. This equates to 32,623 hours of TPU or 274,120 hours on 8 P100 GPUs.

Here the numbers showing more than 200K hours are extrapolated and not experimented. In reality, whenever a neural network shows promising results(predictions/classification), then the hyperparameters and weights are usually saved and are locally updated. This eliminates the need to train the network from scratch.

Recently, Facebook AI has open sourced PyTorch Hub which allows developers to load and explore popular models like BERT, pretrained.

The optimisation of neural network- making them lighter and faster, has been the prime motivator behind almost every paper published in the last couple of years and there is no reason why there won’t be more such works in the future.

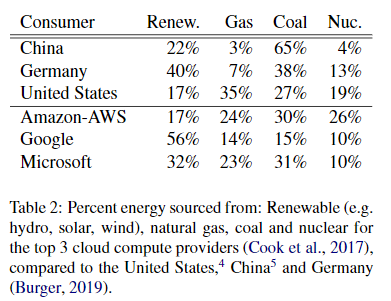

What about renewable sources?

Data centers generate data — server data, power outages report for a particular system and a lot more. Cloud TPUs are designed to run heavy machine learning models. Experts observe that the future of data storage is software defined. Disaggregation and server simulation is already a thing and individual, off the shelf devices, are emulating multiple servers with virtualization.

Tech giants like Google who also have a large presence in the cloud computing sector, manage one of the largest data centres in the world along with its contemporaries like Facebook and Amazon. These companies also spearhead the machine learning development around the world. And, they have shown their commitment towards going 100% renewable over the years. There also have been studies from the likes of Stanford, disputing the same, rightfully.

The pursuit of clean energy has no one-stop solution as think tanks keep producing polar opposite reports. But, why burden the machine learning developers with issues under stalemate?

There were also other significant doubts like:

- Shouldn’t we concentrate on energy generation rather than energy consumption?

- This kind of carbon footprint is a result of ill-informed training practices or does this happen during training in general?

- Who benefits from AI Research and why should a common man suffer from carbon footprints generated for the interests of a few companies?

The answers to these questions, though important, are beyond the scope of this article.

Did The Researchers Succeed In Getting The Message Out?

This study came into light thanks to the news titled “Training a single AI model can emit as much carbon as five cars in their lifetimes -Deep learning has a terrible carbon footprint,” published on the reputed MIT Technology review.

There is no denying the fact that the authors of this paper have made their intentions clear by writing that their objective is to create awareness amongst the machine learning community. But looks like the mainstream media totally missed their point because within a year, the media’s take on AI went from climate-saving tool to

The carbon footprint of training neural networks indeed had to be revealed as it is obvious that data-driven business ideas and applications are an all-time high and will proceed to increase.

But before sensationalising and nitpicking their favourite parts from the study, the media could have gone with the theme of the researchers- awareness.

Though these news outlets have given other details of the paper, the headlines is what looks like to stay with the public. News travels faster but bad news sticks.

The internet has made the world small and catering to the fragile attention spans of the audience has been a popular tactic amongst popular media outlets. And, this is not what a domain like applied AI in its infancy needs right now.

Regulations will demotivate the researchers. In a speculative yet unfortunate scenario in the future, asking researchers to explain their research to the rarely informed lawmakers to get a vote on whether to continue the research or to fetch approval to run a neural network by checking with the emissions committee will be a deterrent to any nascent technology and AI might not be immune to this. It is of utmost injustice to the up and coming researchers to have their work swamped under a deluge of sensationalism served through expediencies.

Read more about the study here.