Reinforcement Learning (RL), a field of machine learning, is based on the principle of trial and error. In easier words, it learns from its own mistakes and corrects the mistake. The aim is simply to build a strategy to guide the intelligent agent to take action in a sequence that leads to fulfilling some ultimate goal. Autonomous Driving (AD) uses Deep Reinforcement Learning (DRL) to make real-time decisions and strategies, not only in AD but also in the field of sales, management and many others. In this article, we will mainly discuss how RL can be used in transportation for better intelligent solutions. Following would be the topics that will be covered in this article.

Table of contents

- About Reinforcement Learning

- The Deep Reinforcement Learning (DRL)

- DRL in controlling Taxi fleet

- Actor-Critic Algorithm

- Case Study

Let’s understand the working of reinforcement learning first.

About Reinforcement Learning

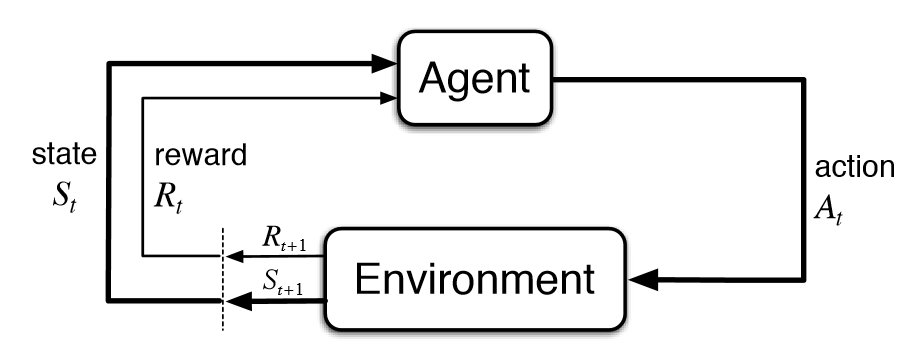

Reinforcement Learning (RL) is a decision making and strategy building technique that uses trial and error methodology to do these operations in real-time. It’s different from the other two machine learning techniques supervised and unsupervised:

- As reinforcement learning uses rewards and punishments methods which serve as signals for positive and negative behaviour of the task whereas supervised learning uses a correct set of actions as input from the user side.

- In unsupervised learning, find similarities and differences between data points to classify them whereas, in reinforcement learning, the goal is to find a suitable action model that would maximize the total cumulative reward of the agent.

The basic architecture of Reinforcement Learning consists of five key terms

- The agent is referred to as the solution to the problem

- The environment which is the physical world in which the agent will operate

- The state is the current situation of the agent.

- The reward is the set of feedback received from the environment after the agent operates.

- The policy is the method to map the state of actions for the agent.

- The value is the future feedback (reward) that an agent would receive by taking an action in a particular state.

Are you looking for for a complete repository of Python libraries used in data science, check out here.

Why was DRL developed?

Sometimes it is complex for RL to make decisions. So, a new technique was developed with the help of neural networks and RL which can handle complex decision making and strategy building known as DRL.

The Deep Reinforcement Learning (DRL)

Deep Reinforcement Learning (DRL) is a machine learning technique that applies the learning from the previous task to an upcoming task with the help of neural networks and reinforcement learning. As it is derived from Reinforcement Learning the basic principle would be the same but neural networks have the computational power to solve complex problems.

This powerful AI tool, which combines the power of tackling large, complex problems with a generic and flexible framework for sequential decision-making, makes deep reinforcement learning a powerful tool that has become increasingly popular in autonomous decision-making and operation control. Let’s see how a DRL is implemented in controlling a taxi fleet.

DRL in controlling Taxi fleet

In the coming decades, ride-sharing companies such as Uber and Ola may aggressively begin to use shared fleets of electric and self-driving cars that could be drafted to pick up passengers and drop them off at their destinations. As cool as it sounds, more complex would be to implement and one major operational challenge which such systems might encounter, however, is the imbalance of supply and demand. Users’ travel patterns are asymmetric both spatially and temporally, thus causing the vehicles to be clustered in certain regions at certain times of day, and customer demand may not be satisfied in time.

So, the model has to focus on parameters such as customer demands and travel times to be optimal. The objective of this dispatching system is to provide the optimal vehicle dispatch strategy at the lowest possible operational costs and on the passenger side, there are costs associated with the waiting time experienced by all the passengers. To solve this problem the Actor-Critic Algorithm is implemented.

Actor-Critic Algorithm

Actor-critic methods combine the advantages of actor-only (policy function only) and critic-only (value function only) methods. Policy gradient methods are reinforcement learning techniques that rely on optimizing parametrized policies concerning the expected return, which is the long-term cumulative reward by gradient descent. They do not suffer from many of the problems, such as the complexity arising from continuous states and actions.

The general idea of policy gradient is that, by generating samples of sequences of tuples of state(trajectories), action and reward from the environment based on the current policy function, it can collect the rewards associated with different trajectories, on which the model could update the parametrized policy function such that high-reward paths are more likely compared to low-reward paths based on their likelihood.

Policy gradient methods have strong convergence properties, which is naturally inherited from gradient descent methods since the sampled rewards usually have very large variances making the vanilla policy gradient method less efficient to learn. The schematic flow of this algorithm is shown below which explain the plan of action of this algorithm in the model.

Let’s see the background process of DRL used for dispatching taxis with the help of a case study.

Case study: How DRL can be used in taxi fleet management?

The objective of this case study is to learn the process by which DRL is dispatching taxis for a particular region. A fully-connected neural network of a total of 8 hidden layers, 4 for each actor function and critical function. And there are 128 units at each hidden layer with a learning rate of 510-5 and 1024 as the trajectory (samples of sequences of tuples of state) batch size for each iteration.

Assume that the travel demand is deterministic for this study, i.e. from day to day there are a fixed number of passengers who need to travel between each pair of zones at a certain time of day. The optimal dispatching strategy is solved based on the formula that consists of the waiting time costs for the passengers and the costs of repositioning empty vehicles; this formulation is known as the integer programming model(IP).

So by tracking the convergence factor of the RL there is a finding that the convergence value was very close to the optimal value calculated by the theoretical method. Now let’s allow some stochasticity in the travel demand realization to check the sturdiness of the model. So for travel demand distribution was divided into two parts: weekdays and weekends. On each day one travel demand profile was picked randomly for the network.

The DRL learner has no idea of this setup and it starts learning without any prior information about the network. Implemented the same process as did for the above scenario. As the travel demand is stochastic and unknown, the actor-critic method, which may not give the theoretical optimal, can still provide satisfying results.

In this case, the proposed model-free reinforcement learning method (i.e., actor-critic) is an efficient alternative way to solve for reliable and close-to-optimal solutions.

Final verdict

A deep reinforcement learning approach is explained for the problem of dispatching autonomous vehicles for taxi services. In particular, a policy-value framework with neural networks as approximations for both the policy and value functions are explained in this article.