SMOTE is a technique in machine learning for dealing with issues that arise when working with an unbalanced data set. In practice, unbalanced data sets are common and most ML algorithms are highly prone to unbalanced data so we need to improve their performance by using techniques like SMOTE. In this article, we will discuss how SMOTE technique can be used to improve the performance of weak learners such as SVM. In the context of it, we’ll discuss the following important points in this article.

Table of contents

- Problems with imbalanced data

- How SMOTE can be used?

- Testing SMOTE on a variety of models

Let’s start the discussion by knowing the problems with imbalanced data.

Problems with imbalanced data

The classifier in binary classification problems is tasked with categorizing each sample into one of two classes. When the majority (larger) class has a significantly larger number of samples than the minority class (smaller), the dataset is considered unbalanced in this class. This asymmetry is difficult because both the classifier training and The most common metrics for evaluating classification quality are: be skewed in favour of the majority class.

Numerous metrics, such as the Brier score, the area under the receiver operating characteristic curve (AUC), and others, have been proposed to address the challenges of imbalanced binary classification. They are all non-symmetric and associate a higher loss with incorrectly classifying a minority sample versus a majority sample.

So in order to counter this problem, various data balancing techniques are used, one of which is SMOTE stands for Synthetic Minority Oversampling Technique. In the further section, we’ll discuss the SMOTE and how it can improve the performance of weak learners like SVM.

How SMOTE can be used

To address this disparity, balancing schemes that augment the data to make it more balanced before training the classifier were proposed. Oversampling the minority class by duplicating minority samples or undersampling the majority class is the simplest balancing method.

The idea of incorporating synthetic minority samples into tabular data was first proposed in SMOTE, where synthetic minority samples are generated by interpolating pairs of original minority points.

SMOTE is a data augmentation algorithm that creates synthetic data points from raw data. SMOTE can be thought of as a more sophisticated version of oversampling or a specific data augmentation algorithm.

SMOTE has the advantage of not creating duplicate data points, but rather synthetic data points that differ slightly from the original data points. SMOTE is a superior oversampling option.

The SMOTE algorithm works like this:

- You select a random sample from the minority group.

- You will determine the k nearest neighbours for the observations in this sample.

- Then, using one of those neighbours, you will determine the vector between the current data point and the chosen neighbour.

- The vector is multiplied by a random number between 0 and 1.

- You add this to the current data point to get the synthetic data point.

This operation is essentially the same as moving the data point slightly in the direction of its neighbour. This ensures that your synthetic data point is not an exact replica of an existing data point, while also ensuring that it is not too dissimilar from known observations in your minority class.

Testing SMOTE on a variety of models

Yotam Elor et al in their research paper To SMOTE, or not to SMOTE? have addressed the effectiveness of SMOTE and other types of oversampling techniques to a variety of ML algorithms like weak learners and strong learners. In this section, we are going to refer to their research in which we’ll evaluate the SMOTE against weak learners like SVM. Before moving to the experiment we’ll see the arrangement made for metrics, hyperparameters, and methodology.

AUC, F1, F2, Brier score, log loss, Jaccard similarity coefficient, and balanced accuracy are used to assess performance. For F1, F2, Jaccard similarity coefficient, and balanced accuracy, we tested non-consistent classifiers with the default decision threshold of 0.5 and consistent classifiers by optimizing the decision threshold on the validation fold. There is no decision threshold for AUC and Brier-score, and log loss optimization is always consistent.

There are some HyperParameters (HPs) that must be set for all balancing methods. One important factor that all balancing techniques have in common is the desired ratio of positive to negative samples. Using the HP set that produces the best results on the testing data is a common HP selection practice.

Each dataset was randomly stratified into training, validation, and testing folds with ratios of 60%, 20%, and 20%, respectively. The training fold was oversampled using each of the oversamples to evaluate the oversampling methods. Each classifier was trained on the augmented training fold, with early stops on the (non-augmented) validation fold whenever possible.

To make the classifiers consistent for the metrics that require a decision threshold, the threshold was optimized on the validation fold. Finally, the metrics for the validation and test folds were computed. The experiment was repeated seven times with different random seeds and data splits for each set of datasets, oversampled, classifier, and HP configuration.

Now we’ll practically see the default and balanced data performance of SVM on an inbuilt dataset from the imblearn dataset. To leverage this test we need to clone this repository and install the dependencies from the requirements.txt file as below.

! git clone https://github.com/aws/to-smote-or-not.git %cd to-smote-or-not/ ! pip install -r requirements.txt

Next, we again need to change the working directory to the src folder where all the code is hosted. For this, we need to import the experiment model from the experiment.py file and the Hyperparameter configuration for various classifiers and upsampling techniques from respective .py files.

%cd /content/to-smote-or-not/src from experiment import experiment # classifier's config map from classifiers import CLASSIFIER_HPS # oversamplers config map from oversamplers import OVERSAMPLER_HPS

As we discussed earlier we are using the inbuilt data from imblearn which holds the information for the mammographic process. And there are two classes to be predicted: -1 and 1 which are 10923 and 260 in samples respectively. That means it is a huge imbalanced data.

import pandas as pd import numpy as np # dataset from imblearn.datasets import fetch_datasets data = fetch_datasets()["mammography"] x = pd.DataFrame(data["data"]) y = np.array(data["target"]).reshape((-1, 1))

Now next we need to set up experiments and this can be done by leveraging the experiment that we have imported earlier. In the below, we just need to change the few settings to the tradeoff between original data and upsampled data I,e we need to change the type of upsamplers to default and params to default. And the below-shown setting is for upsampled SMOTE technique.

results = experiment(

x=x,

y=y,

# oversampling technique to be chosen

oversampler={

"type": "smote",

"ratio": 0.5,

"params": OVERSAMPLER_HPS["smote"][0],

},

# classifier to be chosen

classifier={

"type": "svm", # ["cat", "dt", "xgb", "lgbm", "svm", "mlp"]

"params": CLASSIFIER_HPS["svm"][0]

},

seed=0,

normalize=False,

clean_early_stopping=False,

consistent=True,

repeats=1)

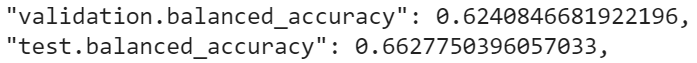

Here is the result of the SVM on original data.

import json # Default result print(json.dumps(results, indent=4))

And the result for 50% upsampling ratio is,

Final words

Through this article, we have discussed the problem with imbalanced data and discussed how SMOTE can help to avoid this problem. In order to improve the performance of weak learners, we have leveraged the experiment from the research paper To SMOTE, or not to SMOTE? and the results we have seen above.