If you have ever had to speak to a client in their language using a human translator or AI applications, you would know that they don’t match the personal touch that pitches have otherwise. Solution? Enter a deep fake video of yourself speaking a different language – that’s sure to deliver the message right across.

Account giant Ernst & Young is slicing up their pitches and presentations by testing AI products for the workplace. In the post covid era of monotonous meetings, this brings a more fun approach with emails and presentations having synthetic head style video clips of the employee’s virtual body doubles. The company is calling their corporate spin on deep fakes ‘virtual double ARIs’.

Personalised Business Pitches

EY is partnering with UK tech startup Synthesia to amplify their communication, create engaging videos, and present pitches in various languages. For instance, one of the partners who can not speak Japanese used Synthesia’s translation function to present his AI avatar speaking the language to a Japanese client. According to an employee, Jared Reeder, this tool helps reinforce creativity and technical assistance.

@synthesiaIO + LMS = 🥇

— Synthesia 🎥 (@synthesiaIO) August 16, 2021

Do you want to learn how to enhance your L&D program with AI-Driven video content?

In our next webinar with @LearnUpon you will learn how AI-powered video, combined with a collaborative LMS, can help scale your L&D programs 📈

👉https://t.co/vCgIrmICH1 pic.twitter.com/UWkjhAwnST

While there is a thin line between deep fakes being an asset and a threat, the company has made it a point to proclaim that these clips are synthetic and not intended to be passed as real videos or to fool people.

Similar to Synthesia, Bangalore based startup, Rephrase.ai is an artificial intelligence-powered synthetic media production platform. The company’s text-to-speech tool helps enterprises create personalised, customisable video content for sales and marketing.

The tool narrates the input text naturally, wherein the user has the control to select the tone of the voice. The machine then syncs this to the lip movements and facial expressions of the human model.

The CEO Ashray Malhotra has claimed that these videos have doubled clickthrough rates for marketing campaigns. “Instead of sending long, boring emails, sales reps can now send out real human videos, scale and nail their sales quotas. The sales reps do not have to record one video after another; instead, they could create one outreach video and send it out to thousands of customers. Each video would be personalised,” he said.

Still Frames Brought to Life

This commercialisation of AI-generated imagery and audio has found various functions. For example, Rosebud.ai helps generate ‘tokkingheads’ – an application bringing portraits to life with the faces shaking, lips speaking, and eyes moving.

Hollywood leveraging AI

Deep fakes are quite popular in the film industry, and this presence is just increasing. For instance, Lucasfilm, a Walt Disney subsidiary production company, recently partnered with a deep fake YouTuber, Shamook. Also, in controversy last month, Morgan Neville’s new documentary on Anthony Bourdain has a few lines of AI-generated deep faked dialogue in Bourdain’s voice. The documentary on the chef’s life and tragic death include three digitally recreated quotes “… and my life is shit now. You are successful, and I am successful, and I’m wondering: Are you happy?”

The latest implementation of deep fakes is in Hollywood publicity and marketing with Warner Bros using the technology in promos for the upcoming film ‘Reminiscence’. The deep fake mechanism turns a photo of any input face into a short video sequence with the movie star. The user can upload a picture of themselves or anyone else and leave it to the ML-powered website to create an animation.

Check out this tool here.

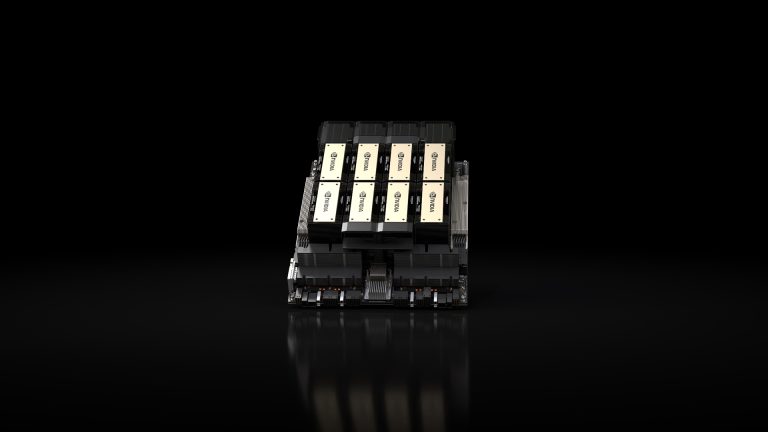

Nvidia’s Video Suspense

Nvidia’s CEO, Jensen Huang, has taken the AI community by surprise after using a deep faked CGI version of himself to deliver parts of his keynote speech. The address had happened back in April, showing Huang suddenly disappearing and his kitchen exploding. While this made the viewers sceptical and wondered if it was real, the company just recently revealed the CGI when announcing its AI tool.

The 13-second video consisted of using a truck full of DSLR cameras, a full face and body scan for the 3D model, and a trained AI tool to mimic Huang’s gestures and expressions. The impressive feat about this is the difficulty it takes for a viewer to recognise the fake parts of the video, which, according to Nvidia, is owing to some other ‘AI magic’ used by them.

How sustainable is this?

While deep fake is a booming field, the novelty of synthetic video as a business tool may be as long-lasting as expected. According to Anita Woolley, an organisational psychologist, while these technology implementations seem fascinating, using them for corporate purposes can make them uncanny. Uncanny valley posits that it is unsettling when humanoid objects like robots and AI models appear almost like humans. Jaime Banks, an associate professor at Texas Tech University, says this feeling of strange familiarity occurs when something is human but not quite human.