Anomaly detection refers to the process of figuring out the abnormalities in a dataset. Also known as outlier detection, it enhances communication around system behaviour, reduces the threat to the software ecosystem, and improves root cause analysis. It is mainly used to detect fraud, identify intrusions, for data cleaning, or figure out ecosystem disturbances across industries.

Traditionally, one-class classification like one-class support vector machine (OC-SVM) or support vector data description (SVDD) have been used for anomaly detection where it assumes that the training data are all normal examples and focuses on identifying examples that belong to the same distribution as the training data. However, these algorithms do not benefit from the representation learning that makes machine learning powerful. Recently, there have been many developments in learning visual representations from unlabeled data using self-supervised learning methods such as rotation prediction and contrastive learning. According to Google researchers, the combination of one-class classifiers with deep representation learning is still an under-explored opportunity for detecting anomalous data.

To address this, Google’s AI team has recently introduced a new framework that makes use of the recent advancements in self-supervised learning.

About Deep One Framework

(Source: Google AI)

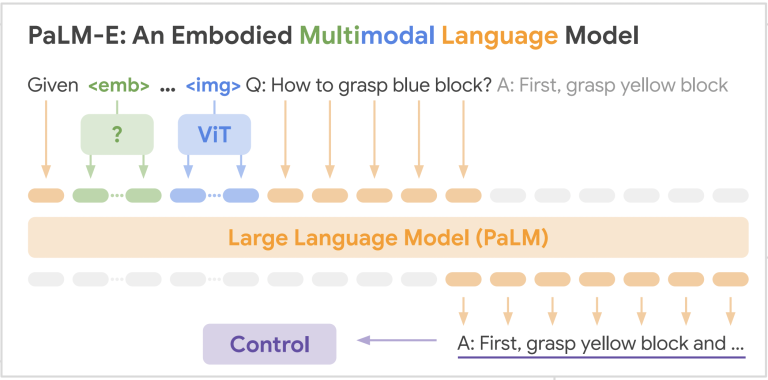

Google researchers first presented a two-stage framework. In the first stage involved the model learning deep representations with self-supervision. In the second stage, the researchers have adopted one-class classification algorithms (the likes of OC-SVM or kernel density estimator), using learned representations from the first stage.

Besides being robust to degeneration, the two-stage algorithm enables the building of accurate one-class classifiers. Additionally, this framework is not just limited to specific representation learning and one-class classification algorithms. It can plug and play various algorithms that can be beneficial for potential advanced approaches.

Google researchers tested the efficacy of the two-stage algorithm by experimenting with two representative self-supervised representation learning algorithms — rotation prediction and contrastive learning.

Rotation prediction is the model’s ability to predict the rotated angles of the input image. Due to its efficiency in other computer vision applications, it has been widely adopted for one-class classification research. However, the existing approach reuses the built-in rotation prediction classifier for learning representations to carry out anomaly detection, which is suboptimal since those built-in classifiers are not trained for one-class classification.

On the contrary, in contrastive learning, the model learns to pull together representations from transformed versions of the same images and pushes representations of different images away. When training the model, images are drawn from the dataset, and each is transformed twice using simple augmentations. Conservative learning converges to a solution where all the representations of normal examples are uniformly distributed on a sphere. This becomes problematic since most of the one-class algorithms determine the outliers by checking the proximity of a tested example to that of the normal training example. However, when all the normal examples are uniformly distributed, outliers will obviously be close to the normal examples.

(Source: Google AI)

The researchers, thus, have proposed distribution augmentation (DA) for one-class learning. In this method, the model learns from the training data and the augmented training examples, where the original and augmented data are different.

In DA, geometric transformations such as rotation or horizontal flips are employed. Thus, the training data is not uniformly distributed because the augmented data specifically occupies some spaces.

Researchers then evaluated the performance of one-class classification in the area under the receiver operating characteristic curve on the commonly used datasets in computer vision — including CIFAR10 and CIFAR-100, Fashion MNIST, and Cat vs Dog. Images from one class were given as inliers, and those from the remaining classes were given as outliers.

(Source: Google AI)

Provided that suboptimal built-in rotation prediction classifiers are usually used for rotation prediction approaches, replacing the built-in rotation classifier– used in the first stage, with a one-class classifier at the second stage of the proposed framework remarkably boosts the performance (91.3 AUC from 86 AUC). Interestingly, with the class OC-SVM (support vector model), the two-stage algorithm results in higher performance than existing works as measured by image-level AUC.

(Source: Google AI)

The Google researchers have also proposed a new self-supervised learning algorithm for texture anomaly detection. It follows the two-stage framework where in the first step, the model learns deep image representations trained to predict if an image is augmented through a CutPaste data augmentation — the image is augmented by randomly cutting a patch and pasting it on a different spot on the same image.

Thus, the approach allows the application of different self-supervised representation learning methods, enabling futuristic performance on different applications of visual one-class classification — from semantic anomaly to texture defect detection. The researchers are further developing anomaly detection methods with unlabeled data.