Over the years the word Google has become synonymous with the word Internet.

Over the years the word Google has become synonymous with the word Internet.

What Google does best is the way it makes life easy by providing most optimised search results. They use state-of-the-art ranking algorithms(built-in house usually) and bring it to the fore considering both ‘what was’ and ‘what could have been’.

For data scientists, especially, Google has been assisting with information regarding latest stock prices and historical data spanning hundreds of years. Not only with information but Google has been contributing to the AI community by open-sourcing its tools and frameworks, providing inexpensive processing power cloud resources via TPUs among many others.

Now it has built a customised search engine just for searching datasets.

Google Dataset search engine is an attempt to establish and open ecosystem of millions of datasets.

The objective here is to pull up most appropriate datasets with as few queries as possible.

Key Challenges

It is really difficult to list out all the dataset repositories even if it is in a single domain say, medical or trading.

One primary challenge for the search engine would be to target the right datasets. To identify something as a dataset, the developers at Google began with an assumption that whenever a data owner uploads some data and calls it a dataset, it IS a dataset; tables or files or images or binary files etc.

With this, the first hurdle of distinguishing the right data is addressed.

Next comes metadata searches. The search keywords can also consist of data like titles, time, and other data within a dataset. It need not be a dataset. The quality of this metadata varies and lot can go downhill provided the scale at which the engine operates.

The format in which data gets published and the format in which some metadata is searched varies. For example, format of date.

The searches can be so similar yet the success of finding one can depend on something as trivial as a space.

Most of the developers attach metadata to each dataset in search-result listing but not to the profile page. This can result in picking up of large number of copies of metadata for the same dataset.

The copies of metadata descriptions of same dataset in different repositories are treated as replicas. Identifying these replicas as cluster can give more options to the users.

There is also a problem of surfacing of stale links during searches. To steer users away from these links, the team at Google deletes on an average 3% of the datasets from their index.

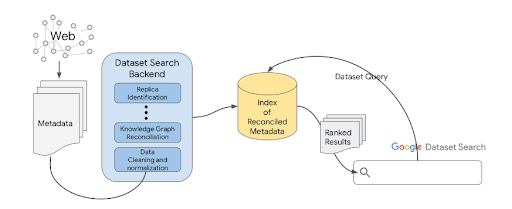

Source: Google

Google crawler(user agents) collects the metadata from the Web; Dataset Search backend normalizes and reconciles the metadata; then reconciled metadata is indexed and results are given ranking for user queries.

To improve the ranking of datasets, the result users on a given query is of great significance. So, the team plans on improving the coverage by encouraging the growth of explicit metadata and using existing metadata to train methods to extract new metadata.

Future Direction

Google’s open ecosystem of datasets looks promising in encouraging and building a reliable community of providers and publishers. Since the field of data science is interdisciplinary, a statistician or a journalist need not feel left out on accessing data from other domains.

The quality of metadata still remains a challenge that the team aims to improve gradually by linking from existing metadata to resources such as academic publications.

Know more about the Search engine here.