The lack of machine learning datasets that include people with disabilities has proved to be a major roadblock for developing technological solutions customised to their needs. This is often referred to as ‘data desert’. It is a common practice for organisations building technology products and services to use data at an aggregate level, which leads to stereotyping and being exclusionary in the process.

Earlier this week, Microsoft, in a lengthy blog, revealed its roadmap to deal with this data desert which has become a major hindrance in making artificial intelligence accessible to people with disability. The tech giant Microsoft has revealed its various collaborations to ‘shrink this data desert’ as discussed below.

Seeing AI

Just recently, Microsoft introduced the Seeing AI app for iOS. This app, designed specifically for visually impaired persons, uses the device camera to identify people and objects and then describes them audibly. The company believes that its use can be further enhanced to recognise objects that are specific to that particular user. If this is truly realised, then such an innovation would be among the first to be used in personalised objects for people with visual disabilities.

ORBIT

Object Recognition for Blind Image Training (ORBIT) was recently launched by City, University of London, which is a Microsoft AI for Accessibility grantee. This research program aims to build large datasets by involving blind persons. Unlike previous attempts to build datasets through images, ORBIT will collect videos to acquire a richer set of information. The team is collecting videos from visually impaired persons of the most important things they use on a daily basis. The aim is to combine these videos and form a large dataset of different objects. These datasets will then be used to develop AI algorithms for building disability-inclusive apps for blind and visually impaired people all around the world. These datasets will eventually be made public.

Further, as part of this project, a curriculum for visually impaired will be developed to get them involved in the process of AI development.

The ORBIT team has also collaborated with Project Tokyo, a partnership program that was announced by Microsoft in 2016. Project Tokyo was behind the development of a modified version of HoloLens, an augmented reality headset with algorithms that provides information about people within the wearer’s immediate surroundings.

VizWiz Datasets

Another initiative towards pushing Microsoft’s AI for accessibility is the development of VizWiz datasets. These datasets are developed by Danna Gurari, an AI for Accessibility grantee. These datasets are originated from blind persons to develop algorithms for assistive technologies. These datasets are built with data submitted by users of mobile applications (developed by researchers at Carnegie Mellon University) who took pictures of commonly used objects and recorded corresponding information about them.

Gurari and team use these original VizWiz datasets and make it usable for training machine learning algorithms. This process includes the removal of inappropriate images, translating audio questions to the text, sourcing labels, and hiding personal information. These datasets are then used to train, validate, and test image captioning algorithms. Currently, it includes over 39,000 images taken by blind participants and five possible captions for each.

Project Insight

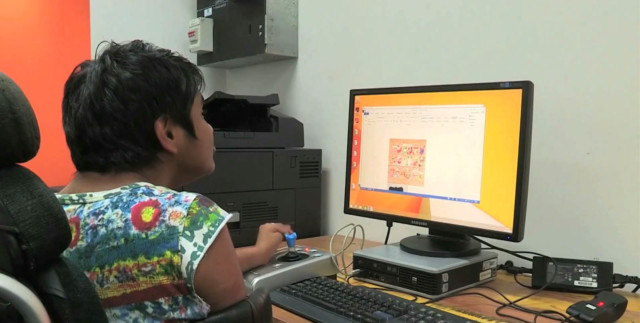

Born out of a collaboration between Microsoft Health Next Enable Team and Gleason Foundation, Project Insight is utilising advanced artificial intelligence techniques to build deep neural networks, ML algorithms, and inclusive designs to develop ‘hardware-agnostic gaze-trackers’ for accessible technology. As part of the project, the team tracks where a person is looking on the screen, using the data from the front-facing camera present on most screens. With conventional computer vision and ML datasets, it is difficult to identify breathing masks, ptosis, epiphora, and dry eyes in ALS patients. The team is gathering images of ALS patients looking at computer screens to train the AI system to broaden the input potential for communication.

Wrapping Up

The AI for Accessibility initiative was announced by Microsoft in May 2018. The company had then vowed $25 million for AI development projects by universities, philanthropic organisations, and others to develop technology for people with disabilities.

For the longest time, AI-based technology has been very generalised and hence very exclusionary with respect to catering to persons with disabilities. The rigid definition of ‘normal’ has led to ousting of a considerable chunk of society from the benefits of AI. With initiatives as described above, this will hopefully change for the better.