While convolutional neural networks (CNNs) have dominated the field of object recognition, it can easily be deceived by creating a small perturbation, also known as adversarial attacks. This can lead to the failure of the computer vision models and make it susceptible to cyberattacks. CNN’s vulnerability to image perturbations has become a pressing concern for the machine learning community while researchers and scientists are working towards building computer vision models that generalise images like humans.

To address this vulnerability, researchers from MIT, Harvard University and MIT-IBM Watson AI Lab have proposed VOneNets — a new class of hybrid CNN vision models — in a recent paper. According to the researchers, this novel architecture leverages “biologically-constrained neural networks along with deep learning techniques” to create more model robustness against white-box adversarial attacks.

Also Read: Guide To Adversarial Robustness Toolbox: Protect Your Neural Networks Against Hacking

How Does It Work?

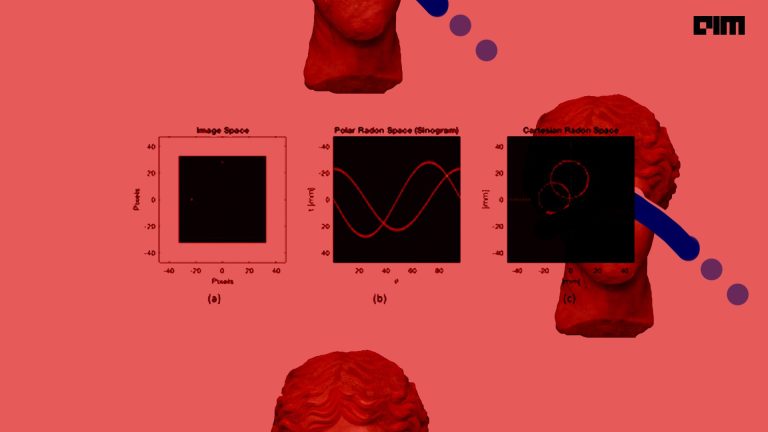

Explaining the process, the researchers said, VOneNets is a new class of CNNs, that “contains a biologically-constrained neural network that simulates the primary visual cortex of primates.” The researchers noted that VOneNet replaces the first few layers with the VOneBlock, known as V1 front-end or primary visual cortex of primates.

The susceptibility of convolutional neural networks to small image perturbations suggests that these neural networks rely on visual features that are not used by the primates. Inspired by these studies that showcased high correlation between the explained variance of the brain’s primary visual cortex, aka V1 front-end, and CNN’s’ robustness to white-box attacks, the researchers’ team developed VOneNet architecture.

As a matter of fact, V1 front-end, with its “fixed-weight, simple and complex cell nonlinearities, and neuronal stochasticity,” is what characterises VOneNet. Alongside, it can easily be adapted to different CNN-based architectures such as ResNet, CORnet-S and AlexNet.

Comparison of the accuracy of these architectures with VOneNet version.

According to the researchers, the VOneNet was not developed to compete with state-of-the-art data-fitting models of V1 with thousands of parameters. Instead, the team leveraged available empirical distributions to constrain a Gabor filter bank model, generating a neuronal space that mimics primate’s V1. This showed simulating a V1 front-end can significantly improve their robustness to image perturbations.

To evaluate the robustness to white-box attacks, the researchers focused on ResNet50 architecture and compared VOneResNet50 with two training-based defence methods — adversarial training with a constraint and adversarial noise with Stylised ImageNet training.

Considering the white box adversarial attacks are only a tiny aspect of model robustness, the team used a larger image perturbation panel. For evaluating model performance on corruptions, the team used the ImageNet-C dataset composed of 15 different corruption types, each at five levels of severity, divided into four categories — noise, blur, weather, and digital. Despite its simplicity, the VOneResNet50 outperformed all the models on different perturbation types and showed substantial improvements for all the white box attack constraints.

“These results are particularly remarkable since VOneResNet50 was not optimised for robustness and did not benefit from any computationally expensive training procedure like the other defence methods,” stated the researchers. “When compared to the base ResNet50, which has an identical training procedure, VOneResNet50 improves 18% on perturbation mean and 10.7% on the overall mean.”

Also Read: Turbulence Modelling Based On An Approach Of Artificial Neural Network

Wrapping Up

In conclusion, experimenting with VoOneNet showcased that neuroscience still has a lot of untapped potentials to solve critical AI problems. The model requires “less training to achieve human intelligence,” which can advance more neuroscience-inspired machine learning algorithms.

The work holds a lot of promise in identifying malicious and abusive uses of computer vision, particularly in the form of discrimination or invasion of privacy. “As computer vision models are deployed in real-world situations, they must behave with the same level of stability as their human counterparts,” concluded the researchers.

Read the paper here.