Recently, researchers from DeepMind, UC Berkeley and the University of Oxford introduced a knowledge distillation strategy for injecting syntactic biases into BERT pre-training in order to benchmark natural language understanding.

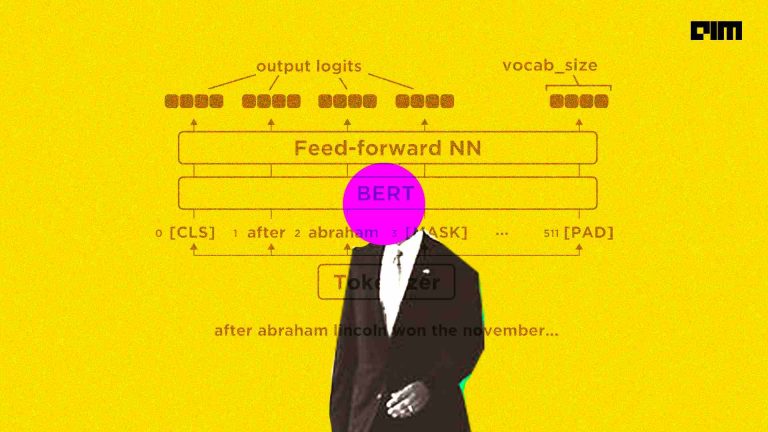

Bidirectional Encoder Representation from Transformers or BERT is one of the most popular neural network-based techniques for natural language processing (NLP) while pre-training. At the current scenario, while we search for anything on Google search engine, BERT provides us with the answers to our queries.

Google has been using this model to make search better and improve featured snippets since last year. However, developers from the tech giant stated that this model has some flaws and does not always provide the exact answer we are looking for.

Large-scale textual representation learners trained on a colossal amount of data have achieved notable success on downstream tasks as well as challenging tests of syntactic grammaticality judgment tasks. However, there are some points that remain questionable, such as whether scalable learners like Bidirectional Encoder Representations from Transformers (BERT) can become completely proficient in the syntax of natural language on account of data scale or whether they still benefit from more precise syntactic biases.

The researchers worked towards answering these questions by devising a new pretraining strategy that injects syntactic biases into a BERT learner that works well at scale. Since BERT models concealed words in bidirectional context, the researchers proposed to distil the approximate marginal distribution over the words in context from the syntactic language model.

Behind the Distillation Strategy

The structure-distilled BERT model differs from the standard BERT only in its pretraining objective, and hence it retains the scalability afforded by Transformer architectures as well as specialised hardware like TPUs. This approach also maintained the complete compatibility with the standard BERT pipelines. Furthermore, the structure-distilled BERT models can simply be loaded as pre-trained BERT weights, which can be fine-tuned in the exact same fashion.

The structure-distilled BERTs have been evaluated on six diverse structured prediction tasks that encompass phrase-structure parsing (in-domain and out-of-domain), dependency parsing, semantic role labelling, coreference resolution, and a CCG super-tagging probe, along with the GLUE benchmark.

According to the researchers, the contributions of this research are mentioned below:

- Showcasing the benefits of syntactic biases, even for representation learners that leverage large amounts of data

- This research help better understand where syntactic biases are the most helpful

- According to the researchers, this research made a demonstration for designing approaches, which will not only work well at scale but also integrate stronger notions of syntactic biases

Limitations

According to the researchers, this approach includes two limitations as mentioned below:

- Firstly, the researchers assumed the existence of decent-quality “silver grade” phrase-structure trees to train the Recurrent Neural Network Grammars (RNNG). Due to the existence of accurate phrase-structure parsers, this assumption holds true for the English language, while this is not necessarily the same case for other natural languages.

- Secondly, pretraining the BERT student in the naive implementation is about half as fast on TPUs compared to the baseline due to I/O bottleneck. This overhead only applies at pretraining and can be reduced through parallelisation.

Wrapping Up

The researchers used Recurrent Neural Network Grammars (RNNG) as the teacher model to train the structure-distilled BERT models. Recurrent Neural Network Grammars (RNNG) is a probabilistic model of phrase-structure trees that can be trained generatively and used as a Language Model (LM) or as a parser.

The structure-distilled BERT models outperformed the baseline on a diverse set of 6 structured prediction tasks and reduced the relative error by 2-21% on a diverse set of structured prediction tasks. The researchers achieved this outcome by pretraining the BERT student with Recurrent Neural Network Grammars (RNNG) teacher model.

The findings in this research suggest that the syntactic inductive biases are advantageous for a diverse array of structured prediction tasks, which also includes tasks that are not explicitly syntactic in nature. Moreover, these biases are helpful for improving the fine-tuning sample techniques’ efficiency on downstream tasks.

Read the paper here.