Pandas is one of the most popular libraries on Python. The data manipulation and storing options it provides made it a go-to option for Kaggle competition. Pandas dataframes have more than 280 methods and more than 40 APIs. The number of options it has serves almost all the needs of a data scientist. Python with pandas is in use in a wide variety of academic and commercial domains, including Finance, Neuroscience, Economics, Statistics, Advertising, Web Analytics, and more.

But Pandas can get clumsy when dealing with large datasets such as those of genomics. The data is trimmed using big data tools and then run on pandas.

So few data scientists at UC Berkeley came up with a new library, Modin which is a multi-process DataFrame library with an API identical to pandas.

What Gives Modin The Edge

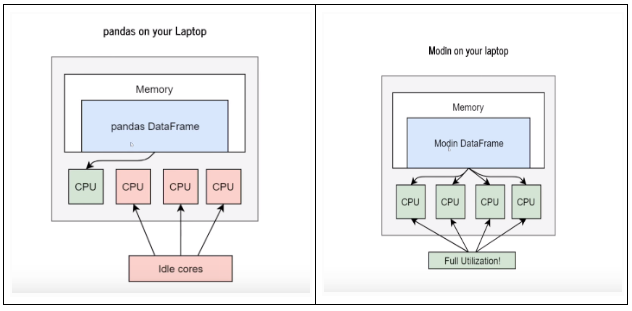

In pandas, one can only use one core at a time when doing computation but Modin, enables the user to use all of the CPU cores on the machine.

Unlike other parallel DataFrame systems, Modin is an extremely light-weight, robust DataFrame. It provides speed-ups of up to 4x on devices with 4 physical cores.

Modin uses Ray to provide an effortless way to speed up the pandas notebooks, scripts, and libraries. Unlike other distributed DataFrame libraries, Modin provides seamless integration and compatibility with existing pandas code.

By testing for read through actions like read_csv, large gains could be witnessed by efficiently distributing the work across the entire machine.

With Modin, the developers tried to bridge the gap between handling of small and large data sets.

Installing Modin:

pip install modin

Using Modin:

import modin.pandas as pd #that ONE line#

The Modin.pandas DataFrame is an extremely light-weight parallel DataFrame. Modin transparently distributes the data and computation.

The Modin DataFrame architecture follows in the footsteps of modern architectures for database and high performance matrix system

Query Compiler

The Query Compiler receives queries from the pandas API layer. The API layer’s responsibility is to ensure clean input to the Query Compiler. The Query Compiler must have knowledge of the in-memory format of the data (currently a pandas DataFrame) in order to efficiently compile the queries.

Partition Manager

The Partition Manager is responsible for the data layout and shuffling, partitioning, and serializing the tasks that get sent to each partition

Partition

Partitions are responsible for managing a subset of the DataFrame. As is mentioned above, the DataFrame is partitioned both row and column-wise.

What Is Ray

Ray is a another system under development. It can be used for parallel and distributed Python to unify the ML ecosystem for low latency and high performance.

Pandas on Ray is the component of Modin that runs on the Ray execution Framework. Currently, the in-memory format for Pandas on Ray is a pandas DataFrame on each partition. Currently, Ray is the only execution framework supported on Modin.

The optimization that improves the performance the most is the pre-serialization of the tasks and parameters. This is primarily applicable to map operations.

Modin will use all of the resources available on the machine and this usage, if required can be limited as follows:

import ray

ray.init(num_cpus=4)

import modin.pandas as pd

The above figure illustrates how Modin edges Pandas on 4 core CPU for better performance.

Key Takeaways

- Modin takes care of all the partitioning and shuffling of the data.

- Performance of ‘read_csv’ shows more than a gigabyte per second of read-through which is far better compared to what Pandas does(1GB/25 sec).

- The architecture allows Modin to exploit potential optimizations across framework and in-memory format of the data.

Know more about Modin here