Deep learning has broad applications in sentiment analysis, natural language understanding, computer vision, etc. The technology is growing at a breakneck speed on the back of rapid innovation. However, such innovations call for a higher number of parameters and resources. In other words, the model is as good as the metrics.

To that end, Google researcher Gaurav Menghani has published a paper on model efficiency. The survey covers the landscape of model efficiency from modelling techniques to hardware support. He proposed a method to make ‘deep learning models smaller, faster, and better’.

Excited to share a survey of the vast landscape of efficiency in deep learning on how to make your deep learning models smaller, faster, and better!https://t.co/WtRvvx6uUm (1/n)

— Gaurav Menghani (@GauravML) June 18, 2021

Challenges

Menghani argues that while larger and more complicated models perform well on the tasks they are trained on, they may not show the same performance when applied to real-life situations.

Following are the challenges practitioners face while training and deploying models:

- The cost of training and deploying large deep learning models is high. The large models are memory-intensive and leave a bigger carbon footprint.

- A few deep learning applications need to run in real-time on IoT and smart devices. This calls for optimisation of models for specific devices.

- Building training models with as little data as possible when the user data might be sensitive.

- Off the shelf models may not always be able to address the constraints of new applications.

- Training and deployment of multiple models on the same infrastructure for different applications may exhaust available resources.

A mental model

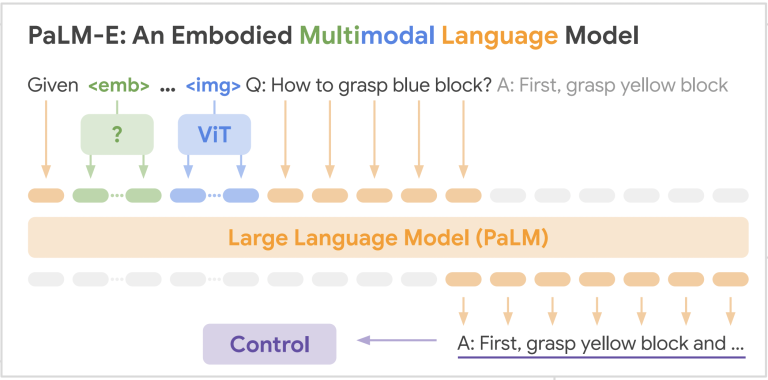

Menghani presents a mental model complete with algorithms, techniques, and tools for efficient deep learning. It has five major areas: compression techniques, learning techniques, automation, efficient architectures, and infrastructure (hardware).

Mental model

Compression techniques: These algorithms and techniques optimise the model’s architecture, typically by compressing its layers. One of the most popular examples of compression technique is quantisation, where the weight of a layer is compressed by reducing its precision with minimum loss in quality.

Learning techniques: These algorithms are focused on training the model to make fewer prediction errors, require less data, and converge faster. It also gives scope for trimming the parameters for obtaining a smaller footprint or a more efficient model. One example of this is distillation that allows accuracy improvement of a smaller model by teaching it to mimic a larger one.

Automation: It helps in improving the core metrics of a given model. Hyper-parameter optimisation (HPO) is an example of an automation tool where optimising hyper-parameters increases accuracy. Another technique is architecture search, where the model architecture is tuned and the search helps find a model that optimises both loss and accuracy.

Efficient Architectures: These are fundamental blocks designed from scratch. Such models are superior to the baseline methods such as connected, fully connected layers and RNNs.

Infrastructure: Building an efficient model also needs a strong foundation of infrastructure and tools. It includes model training frameworks such as TensorFlow, PyTorch, etc.

Guide to efficiency

Menghani said the ultimate goal is to build Pareto-optimal models. Pareto efficiency means the resources are allocated in the most economically efficient way. To build Pareto-optimal models, practitioners must achieve the best possible result in one dimension while holding the other constant. Typically, one of these dimensions is quality (metrics such as accuracy, precision, and recall) and the other one is footprint (metrics such as model size, latency, RAM)

He suggests two methods to achieve the Pareto-optimal model:

Shrink and improve footprint sensitive models: This is a useful strategy for practitioners who want to reduce the footprint of the model without compromising on the quality. Shrinking can be useful for on-device deployment and server-side optimisation. Ideally, it should be minimally lossy, but in a few cases, reducing capacity can be compensated by the improve phase.

Grow-improve and shrink for quality sensitive models: This strategy can be used when a practitioner wants to deploy better quality models while keeping the same footprint. Here the capacity is first added by growing the model and then improved via learning techniques, automation etc; post this, the model is shrunk back.

Read the full paper here.