Hard coding a robot to perform all the mundane manual jobs even poorly, will take a lot of computational heavy lifting. It takes an ingenious constraint assumption to make the robot perform decently when put under unstructured, real-world situations.

Asking a robot to run, do a cartwheel or bowl a yorker would have sounded like a chapter from a sci-fi novel until a decade ago. But now, with the advancement of hardware acceleration and the optimisation of machine learning algorithms, techniques like reinforcement learning are being put into practical use every day.

However, the existing evaluation techniques to evaluate the ground truth with a physical robot are inefficient.

For selectively testing and checking which models perform or to identify those which suit the job, off-policy evaluation is a good candidate. When the resources available to gauge a robot’s performance are scanty, off-policy reinforcement algorithms provide the necessary goods the agents need.

An agent can be called as the unit cell of reinforcement learning. An agent receives rewards from the environment, it is optimised through algorithms to maximise this reward collection. And, complete the task. For example, when a robotic hand moves a chess piece or does a welding operation on automobiles, it is the agent, which drives the specific motors to move the arm.

In order to develop a foolproof, inexpensive performance testing for robotic systems, Google AI researchers propose a new off-policy evaluation method, called off-policy classification (OPC), that evaluates the performance of agents from past data by treating evaluation as a classification problem, in which actions are labelled as either potentially leading to success or guaranteed to result in failure.

How OPC Works

An example of how simulated experience can differ from real-world experience. Here, simulated images (left) have much less visual complexity than real-world images (right).Training of a robot is done through simulations and evaluating still needs real robot. With off-policy classification, evaluation is done using available real-world data and the learnings are then transferred to a real robot.

Off-Policy: an agent is trained using a combination of data collected by other agents (off-policy data) and data it collects itself to learn generalisable skills like robotic walking and grasping.

Fully off-policy RL: is a variant in which an agent learns entirely from older data, which is appealing because it enables model iteration without requiring a physical robot.

OPC relies on two assumptions:

- No randomness is involved in how states change

- The agent either succeeds or fails at the end of each trial

The “success or failure” assumption is natural for many tasks, such as picking up an object, solving a maze, winning a game, and so on.

OPC utilises a Q-function, learned with a Q-learning algorithm, that estimates the future total reward

Q-learning is a model-free reinforcement learning algorithm, which tells an agent what action to take under what circumstances. It does not require a model of the environment, and it can handle problems with stochastic transitions and rewards, without requiring adaptations.

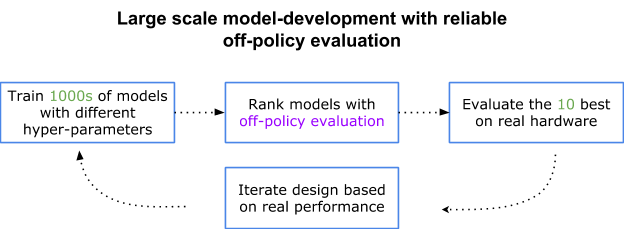

A diagram for real-world model development. Assuming 10 models per day can be evaluated, without off-policy evaluation, one would need 100x as many days to evaluate these models.

The researchers also leveraged techniques from semi-supervised learning, positive-unlabeled learning, in particular, to get an estimate of classification accuracy from partially labelled data. This accuracy is the OPC score.

Conclusion

The results show that OPC is good at predicting generalisation performance across several scenarios critical to robotics. Effective off-policy evaluation in a real-world reinforcement learning provides an alternative to expensive real-world evaluations during algorithm development.

Though the results of this OPE framework looks promising, it does come with a flaw. This framework assumes that one has an off-policy evaluation method that accurately ranks performance from old data. But agents fed with past experiences may act very differently from newer learned agents, which makes it hard to get good estimates of performance.

Promising directions for future work include developing a variant of this OPE method that is not restricted to binary(success or failure) reward tasks and extending the analysis to stochastic tasks.

The authors believe that even in the binary setting, this method can provide a substantially practical pipeline for evaluating transfer learning and off-policy reinforcement learning algorithms

For further reading on off-policy reinforcement learning, check here.