The sklearn is a Python-based machine learning package that provides a collection of various data transformations for modifying the data as per need. Many simple data cleaning processes, such as deleting columns etc, are often done manually on the data, so we need to use custom code. The sklearn package provides a mechanism to standardise these unique data transformations so that they can be used like any other transformation, either directly on the data or as part of the modelling pipeline. In this article, you will learn how to create and apply custom data transformations for sklearn. Following are the articles to be covered.

Table of contents

- How is a custom data transformer built using sklearn?

- Creating Custom transformer

Let’s start with the understanding of the custom data transformer.

How is a custom data transformer built using sklearn?

The sklearn which is a Python-based machine learning package directly provides many various data preparation strategies, such as scaling numerical input variables and modifying variable probability distributions. The process of modifying raw data to make it fit for machine learning algorithms is known as data preparation.

When assessing model performance using data sampling approaches such as k-fold cross-validation, these transformations will allow fitting and applying the transformations to a dataset without leaking data.

While the data preparation techniques provided by sklearn are comprehensive, it may be necessary to perform additional data preparation processes. These additional processes are often conducted manually before modelling and need the creation of bespoke code. The danger is that these data preparation stages will be carried out inconsistently.

The approach is to use the FunctionTransformer class to construct a custom data transform in sklearn. This class lets the user define a function that will be invoked to change the data. Defining the function and making any valid alteration, such as modifying the values or eliminating data columns (not removing rows). The class may then be used in sklearn just like any other data transform, for example, to directly convert data or in a modelling pipeline.

Before moving on to creating a Custom Transformer, here are a couple of things worth being familiar with:

- Using scikit-learn Transformers in Pipelines or using the fit transform() technique.

- Class creation, inheritance, and the super() method in Python.

Are you looking for a complete repository of Python libraries used in data science, check out here.

Creating Custom transformer

We simply need to fulfil a few fundamental parameters to develop a Custom Transformer:

- Initialize a transformer class.

- The BaseEstimator and TransformerMixin classes from the sklearn.base modules are inherited by this class.

- The instance methods fit() and transform() are implemented by the class (). To be compatible with Pipelines, these methods must have both X and Y arguments, and transform() must return a pandas DataFrame or NumPy array.

Create a basic custom transformer

from numpy.random import randint

from sklearn.base import BaseEstimator, TransformerMixin

class basictransformer(BaseEstimator, TransformerMixin):

def fit(self, X, y=None):

return self

def transform(self, X, y=None):

X["cust_num"] = randint(0, 10, X.shape[0])

return X

df_basic = pd.DataFrame({"a": [1, 2, 3], "b": [4, 5, 6], "c": [7, 8, 9]})

pipe = Pipeline(

steps=[

("use_custom_transformer", basicTransformer())

]

)

transformed_df = pipe.fit_transform(df_basic)

df_basic

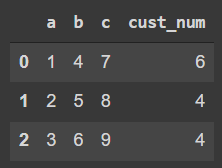

A custom class is built, in which fit and transform functions are defined. Both of these functions are necessary for the pipeline to function smoothly. The pipeline will perform all the operations which are mentioned in the class. And the output data frame would look like the below image.

Let’s build a custom transformer and apply it to the data frame and predict some values. Starting with importing necessary libraries for operations.

import numpy as np import pandas as pd from sklearn.metrics import mean_squared_error,r2_score from sklearn.pipeline import FeatureUnion, Pipeline, make_pipeline from sklearn.base import BaseEstimator, TransformerMixin from sklearn.linear_model import LinearRegression from sklearn.model_selection import train_test_split

Reading, preprocessing and data analysis:

This article uses a data set related to the insurance sector in which the cost of insurance will be predicted based on different features and observations.

df=pd.read_csv("/content/insurance.csv")

df_util=pd.get_dummies(data=df,columns=['sex','smoker','region'],drop_first=True)

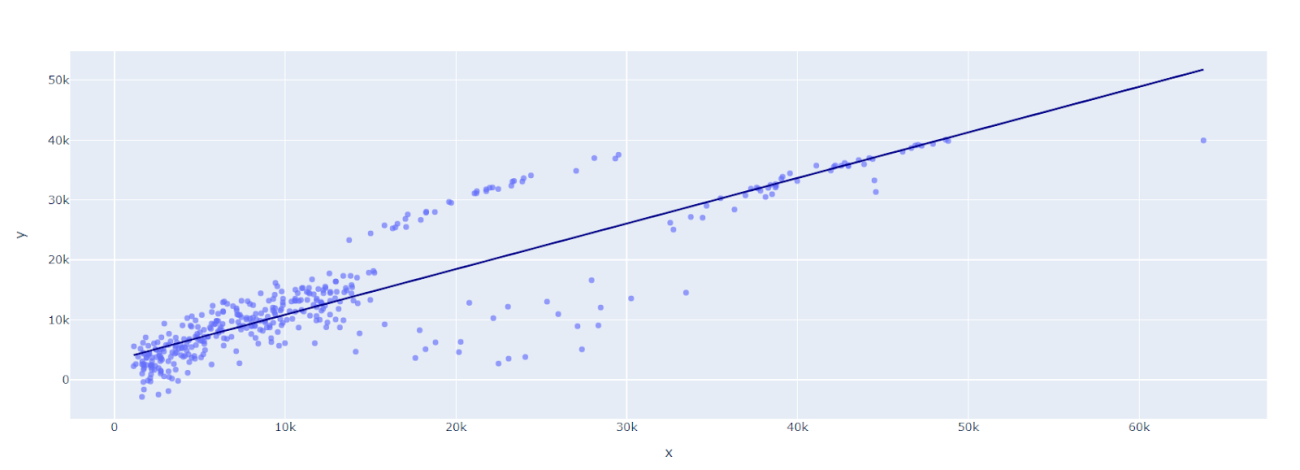

This plot is representing the distribution of insurance charges with respect to the body mass index (BMI) of customers and categorised by their age.

Splitting the data for train and validation as per the standard ratio of 70:30.

X=df_util.drop(['charges'],axis=1) y=df_util['charges'] X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.30, random_state=42)

Create a custom transformer and use the transformer for transforming the train data for learners.

class CustomTransformer(BaseEstimator, TransformerMixin):

def __init__(self, feature_name, additional_param = "SM"):

print('\n...intializing\n')

self.feature_name = feature_name

self.additional_param = additional_param

def fit(self, X, y = None):

print('\nfiting data...\n')

print(f'\n \U0001f600 {self.additional_param}\n')

return self

def transform(self, X, y = None):

print('\n...transforming data \n')

X_ = X.copy()

X_[self.feature_name] = np.log(X_[self.feature_name])

return X

print("creating second pipeline...")

pipe2 = Pipeline(steps=[

('experimental_trans', CustomTransformer('bmi')),

('linear_model', LinearRegression())

])

print("fiting pipeline 2")

pipe2.fit(X_train, y_train)

preds2 = pipe2.predict(X_test)

print(f"RMSE: {np.sqrt(mean_squared_error(y_test, preds2))}\n")

The pipeline is employed since it would be difficult to apply all of these stages sequentially with individual code blocks. Because pipelines keep sequencing in a single block of code, the pipeline itself becomes an estimator, capable of completing all operations in a single statement.

There are some additional things added in the class if compared to the above basic transformer. In this transformer, the user can mention the names of the features on which the operations needed to be performed. This process is known as passing arguments.

The linear regression model is built using the custom transformer. The transformer is converting the values to logs for the learner to decrease the bias toward larger values. This kind of bias is common in linear regression models.

The above representation is a regression plot between the observed insurance charges and the predicted insurance charges. It could be observed that the regression line is explaining the relationship adequately.

So, till now we are able to build the custom transformer and utilise it to predict the values. But what if we want to customise the existing transformer offered by sklearn. Let’s customise the ordinal encoder and implement it on the data used above.

from sklearn.preprocessing import OrdinalEncoder

from sklearn.preprocessing import OrdinalEncoder

class CustEncoder(OrdinalEncoder):

def __init__(self, **kwargs):

super().__init__(**kwargs)

def transform(self, X, y=None):

transformed_data = super().transform(X)

encoded_data = pd.DataFrame(transformed_data, columns=self.feature_names_in_)

return encoded_data

data = df[['sex','smoker','region']]

enc = CustEncoder(dtype=int)

new_data = enc.fit_transform(data)

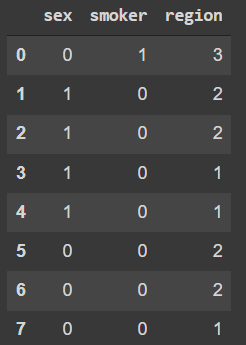

new_data[:8]

By using the super() method any predefined transformer could be customised according to the need.

Conclusion

Custom Transformers provide a high degree of freedom and control for data preprocessing. We found them particularly useful in this article for encapsulating a phase in the Data Processing process, making the code much more understandable. More of these custom transformers might be constructed based on the requirements using the scikit learn library; give it a shot; it will be worthwhile.