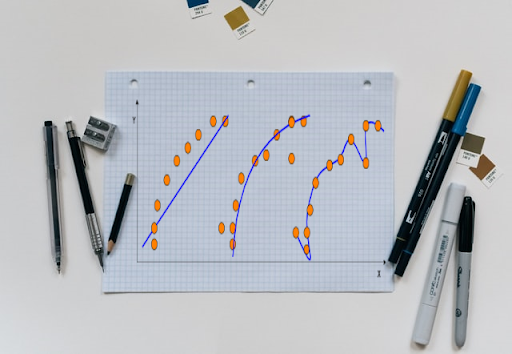

Overfitting is a concept when the model fits against the training dataset perfectly. While this may sound like a good fit, it is the opposite. In overfitting, the model performs far worse with unseen data. A model can be considered an ‘overfit’ when it fits the training dataset perfectly but does poorly with new test datasets. On the other hand, underfitting takes place when a model has been trained for an insufficient period of time to determine meaningful patterns in the training data. Both overfitting and underfitting cannot apply themselves to largely fresh and unseen datasets.

Source: IBM Cloud

Now, an optimum model may sound close to impossible to achieve; there is a formula to it. While underfitted models show less variance and more bias, overfitted models display a high variance with less bias within them. Needless to say, moving an underfitted model towards overfitting can be tricky. However, it’s the conjunction between variance and bias at a strategic point that produces an optimum model.

Source: IBM Cloud

Detection

Since overfitting is a common problem, it is essential to detect it. To detect overfitted data, the prerequisite is that it must be used on test data. The first step in this regard is to divide the dataset into two separate training and testing sets. If the model performed exponentially better on the training set than the test set, it is clearly overfitted.

The model’s performance is then evaluated on a different test set. This measurement is completed through the Train-Test split method of Scikit-learn. In the first step, a synthetic classification dataset is defined. Next, the classification function is applied to define the classification prediction problem into two, with rows on one side and columns on the other. This example is then run to create the dataset.

Then, the dataset is split into train subsets and test subsets, with 70 per cent for training and 30 per cent for the evaluation. Next, an ML model is chosen for overfitting. In the end, a note is made of the model’s accuracy scores compared to its performance with the test sets. The example is allowed to run a few times so as to get an average outcome.

Having detected overfitting, now the model needs to be rid of it. There are various ways in which overfitting can be prevented. These include:

- Training using more data: Sometimes, overfitting can be avoided by training a model with more data. A model could be fed with more data so that algorithms can detect the signal better and not be overfitted. However, this isn’t a guaranteed method. Simply adding more data, especially when it is not clean, can do more harm than good.

- Early Stopping: When a model is being trained through rounds of repetition, every repeat of the model can be evaluated. There is a pattern of diminishing returns that is associated with repeats. Initially, a model’s performance keeps improving until it reaches a plateau post which the overfitting starts increasing. Early stopping is to pause the process before this point arrives.

,

Source: Elite data science

- Regularisation or data simplification: At times, even with large volumes of data, a model can overfit a set when it is too complex. This can be solved by removing the number of parameters, or pruning down, say, a decision tree model, or using dropout on a neural network.

- Removing features: This is with regards to algorithms that have a built-in feature selection. Removing irrelevant input features can improve the generalisability of models.

- Ensembling: Ensembling is combining predictions made by multiple separate models. The most commonly used techniques for ensembling are bagging and boosting. Bagging is a way to reduce overfitting in models by training a large number of weak learners that are set in a sequence. This helps each learner in the sequence to learn from the mistakes of the one preceding it.

Boosting is to put together all the weak learners into a single sequence for one strong learner to emerge. While bagging works with complex base models and smooths their predictions, boosting is to use basic models and then increase their aggregate complexity.

- Data augmentation: Data augmentation is the cheaper option as compared to training models using more data. Instead of trying to acquire more data, this method simply tries to make the datasets appear more diverse instead so that the model is prevented from learning the dataset. This way, every time the model repeats a dataset, it appears different.

Another method that is similar to data augmentation is adding noise to the output data. Adding a moderate amount of noise to input data stabilises it, while adding noise to the output data makes the dataset more diverse. This is tricky since a lot of noise can also disturb the dataset.

- Cross-validation: In this technique, the initial training dataset is split into several mini-train test splits. The model’s hyperparameters are then tuned using the splits. This keeps the test set unseen before finalising the model.