Recommender systems are used in a variety of domains, from e-commerce to social media to offer personalized recommendations to customers. The benefit of recommendations for customers, such as reduced information overload, has been a hot topic of research. However, it’s unclear how and to what extent recommender systems produce commercial value. It’s challenging to create a reliable product suggestion system. However, defining what it means to be reliable is also a challenging task. Measuring the success of any recommender system is very necessary from a business point of view. In this post, we go through the most important and popularly used evaluation parameters to measure the success of a recommendation system. The major points to be discussed in this article are outlined below.

Table of Contents

- Challenges Faced by Recommendation Systems

- Common metrics used

- Business Specific Measures

Let’s start the discussion by understanding the challenges that are faced by recommendation systems.

Challenges Faced by Recommendation Systems

Any predictive model or recommendation systems with no exception rely heavily on data. They make reliable recommendations based on the facts that they have. It’s only natural that the finest recommender systems come from organizations with large volumes of data, such as Google, Amazon, Netflix, or Spotify. To detect commonalities and suggest items, good recommender systems evaluate item data and client behavioral data. Machine learning thrives on data; the more data the system has, the better the results will be.

Data is constantly changing, as are user preferences, and your business is constantly changing. That’s a lot of new information. Will your algorithm be able to keep up with the changes? Of course, real-time recommendations based on the most recent data are possible, but they are also more difficult to maintain. Batch processing, on the other hand, is easier to manage but does not reflect recent data changes.

The recommender system should continue to improve as time goes on. Machine learning techniques assist the system in “learning” the patterns, but the system still requires instruction to give appropriate results. You must improve it and ensure that whatever adjustments you make continue to move you closer to your business goal.

Common Metrics Used

Predictive accuracy metrics, classification accuracy metrics, rank accuracy metrics, and non-accuracy measurements are the four major types of evaluation metrics for recommender systems.

Predictive Accuracy Metrics

Predictive accuracy or rating prediction measures address the subject of how near a recommender’s estimated ratings are to genuine user ratings. This sort of measure is widely used for evaluating non-binary ratings.

It is best suited for usage scenarios in which accurate prediction of ratings for all products is critical. Mean Absolute Error (MAE), Mean Squared Error (MSE), Root Mean Squared Error (RMSE), and Normalized Mean Absolute Error (NMAE) are the most important measures for this purpose.

In comparison to the MAE metric, MSE and RMSE employ squared deviations and consequently emphasize bigger errors. The error is described by MAE and RMSE in the same units as the obtained data, whereas MSE produces squared units.

To make results comparable among recommenders with different rating scales, NMAE normalizes the MAE measure to the range of the appropriate rating scale. In the Netflix competition, the RMSE measure was utilized to determine the improvement in comparison to the Cinematch algorithm, as well as the prize winner.

Classification Accuracy Metrics

Classification accuracy measures attempt to evaluate a recommendation algorithm’s successful decision-making capacity (SDMC). They are useful for user tasks such as identifying nice products since they assess the number of right and wrong classifications as relevant or irrelevant things generated by the recommender system.

The exact rating or ranking of objects is ignored by SDMC measures, which simply quantify correct or erroneous classification. This type of measure is particularly well suited to e-commerce systems that attempt to persuade users to take certain actions, such as purchasing products or services.

Rank Accuracy Metrics

In statistics, a rank accuracy or ranking prediction metric assesses a recommender’s ability to estimate the correct order of items based on the user’s preferences, which is known as rank correlation measurement. As a result, if the user is given a long, sorted list of goods that are recommended to him, this type of measure is most appropriate.

The relative ordering of preference values is used in a rank prediction metric, which is independent of the exact values assessed by a recommender. A recommender that consistently overestimates item ratings to be lower than genuine user preferences, for example, might still get a perfect score as long as the ranking is correct.

Mean Average Precision @ K and Mean Average Recall @ K

For each user in the test set, a recommender system normally generates an ordered list of recommendations. MAP@K indicates how relevant the list of recommended items is, whereas MAR@K indicates how well the recommender can recall all of the items in the test set that the user has rated positively.

Business Specific Measures

The way businesses evaluate the effects and business value of a deployed recommender system is influenced by a number of factors, including the application domain and, more crucially, the company’s business strategy. Ads can be used in part or entirely to support such business strategies (e.g., YouTube or news aggregation sites). The goal in this scenario could be to increase the amount of time people spend using the service. Increased engagement is also a goal for firms with an at-rate subscription model (e.g., music streaming services).

The underlying business models and objectives govern how firms judge the value of a recommender in all of the examples above. The diagram below depicts the basic measuring methodologies identified in the literature, which we are going to discuss further one by one.

Click-Through Rates

The click-through rate (CTR) is a metric that measures how many people click on the recommendations. The basic notion is that if more people click on the recommended things, the recommendations are more relevant to them.

In news recommendations, the CTR is a widely used metric. Das et al. discovered that personalized suggestions resulted in a 38 percent increase in clicks compared to a baseline that merely recommends popular articles in an early paper on Google’s news personalization engine. However, on some days, when there was a lot of attention to celebrity news, the baseline actually fared better.

Adoption and Conversion

Click-through rates are often not the final success measure to pursue in recommendation scenarios, unlike online business models dependent on adverts. While the CTR can measure user attention or interest, it can’t tell you whether users liked the recommended news article they clicked on or if they bought something based on a recommendation.

As a result, alternative adoption measures are frequently utilized, which are ostensibly more suited to determining the effectiveness of the suggestions and are ostensibly based on domain-specific considerations. YouTube employs the idea of “long CTRs,” in which a user’s clicks on suggestions are only tallied if they view a particular percentage of a video. Similarly, Netix utilizes a metric called “take-rate” to determine how many times a video or movie was actually watched after being recommended.

Salves and Revenue

In many cases, the adoption and conversion measures outlined in the previous section are more telling of a recommender’s prospective business value than CTR measures alone. When customers choose more than one item from a list of suggestions, This is a good indicator that a new algorithm was successful in identifying later purchases or views. stuff that the user is interested in.

Nonetheless, determining how such improvements in adoption translate into greater business value remains difficult. Because a recommender may provide numerous suggestions to consumers that they would buy otherwise, the rise in company value may be lower than what we can expect based on adoption rate increases alone. Furthermore, if the relevance of suggestions was already poor, i.e., nearly no one clicked on them, raising the adoption rate by 100% could result in very little absolute additional value for the company.

User Behavior and Engagement

Higher levels of user engagement are thought to contribute to increased levels of user retention in various application domains, such as video streaming, which, in turn, often immediately converts into corporate value. A number of real-world tests of recommender systems have found that having a recommender increases user activity. Depending on the application domain, different measurements are used.

In the field of music recommendation, researchers compared various recommendation strategies and discovered that a recommendation strategy that combines usage and content data (dubbed Mix) not only led to higher acceptance rates but also to a 50% higher level of activity in terms of playlist additions than the individual strategies.

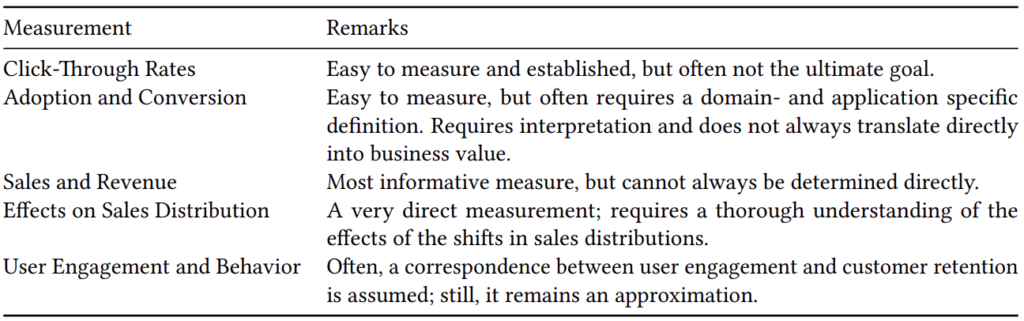

Overall, the use of a specific measure is not limited; it just depends on the type of business problem that the system is being used to solve. An overview of some of our findings may be found in the table below.

Apart from all of this, we can also leverage our standard ML evaluation metrics to evaluate ratings and predictions that are as follows.

- Precision

- Recall

- F1-measure

- False-positive rate

- Mean average precision

- Mean absolute error

- The area under the ROC curve (AUC)

Conclusion

Through this post, we have learned what different metrics are used when it comes to evaluating the performance of a recommendation system. Firstly we have seen what are some common challenges that are involved with the recommendation system. Later we have seen some commonly used performance metrics and lastly, we have seen how well established businesses like Netflix, YouTube have defined these evaluation strategies.