Increasing number of parameters, latency, resources required to train etc have made working with deep learning tricky. Google researchers, in an extensive survey, have found common challenging areas for deep learning practitioners and suggested key checkpoints to mitigate these challenges.

For instance, deep learning practitioner might face the following challenges when deploying a model:

- Training could be a one-time cost, deploying and letting inference run for over a long period of time could still turn out to be expensive in terms of consumption of server-side RAM, CPU, etc.

- Using as little data as possible for training is critical when the user-data might be sensitive.

- New applications come with new constraints (around model quality or footprint) that existing off-the-shelf models might not be able to address.

- Deploying multiple models on the same infrastructure for different applications might end up exhausting the available resources.

Most of these challenges boil down to lack of efficiency. According to Gaurav Menghani of Google Research, if one were to deploy a model on smartphones where inference is constrained or expensive due to cloud servers, attention should be paid to inference efficiency. And if a large model has to be trained from scratch with limited training resources, models that are designed for training efficiency would be better off. According to Menghani, practitioners should aim to achieve pareto-optimality i.e. any model we choose should have the best of tradeoffs. And, one can develop a pareto-optimal model using the following mental model.

Image credits: Gaurav Menghani, Google

Model compression

For very large models, maximising training efficiency can be achieved using models with small hidden sizes or few layers as the models run faster and use less memory. As illustrated above, a common practice would be to train small models until they converge and then run a compression technique lightly. Another example is quantisation: the weight matrices of a layer are compressed by reducing its precision from 32-bit floating point values to 8-bit unsigned integers without sacrificing quality. The most compute-efficient training strategy is to counterintuitively train extremely large models but stop after a small number of iterations. Here are a few popular compression techniques:

- Parameter Pruning And Sharing

- Low-Rank Factorisation

- Transferred/Compact Convolutional Filters

- Knowledge Distillation

Learning techniques

Learning algorithms focus on training the mode (to make fewer prediction errors), require less data and converge faster. According to Menghani, the improved quality can then be exchanged for a more efficient model by trimming the number of parameters if needed. An example of such learning technique is distillation, where the accuracy of a smaller model is improved by learning to mimic a larger model. Earlier this year, Facebook announced Data-efficient Image Transformer (DeiT), that leverages distillation. It is a vision Transformer built on a transformer-specific knowledge distillation procedure to reduce training data requirements. Distillation allows neural networks to learn amongst themselves. Whereas, a distillation token is a learned vector that flows through the network along with the transformed image data to significantly enhance the image classification performance with less training data.

Automation

A good example of improving efficiency is through hyper-parameter optimization (HPO), an automated way of optimising the hyper-parameters to increase the accuracy, which could then be exchanged for a model with lesser parameters. Given the vast search space of features, evaluation of each configuration can be expensive. A model’s performance depends heavily on the hyperparameter optimization. HPO tools like Amazon’s Sagemaker offer an automatic model tuning module and all one would need is just a model and related training data for a HPO task. Auto tune modules support optimisation for complex models and datasets with parallelism on a large scale. Microsoft’s Neural Network Intelligence (NNI), an open-source toolkit for both automated machine learning (AutoML) and HPO, provides a framework to train a model and tune hyper-parameters along with the freedom to customise.

ENAS

Neural architecture search (NAS) falls in this category too. In his case, the architecture itself is tuned and search components skim through the results to help find a model that optimises both the loss and accuracy, and some other metric such as model latency, model size, etc. NAS methods have already outperformed many manually designed architectures on image classification tasks. For example, Efficient Neural Architecture Search (ENAS), a fast and inexpensive approach for automatic model design. In this approach, a controller (RNN), trained with policy gradients, learns to discover neural network architectures by searching for an optimal subgraph within a large computational graph.

Architectures

Neural architectures are fundamental blocks designed from scratch. One of the classical examples of efficient layers in the Vision domain are the Convolutional layers. These layers are improved over Fully Connected (FC) layers in Vision models. The same filter is used everywhere in the image, regardless of where the filter is applied. Whereas, the Transformer architecture, proposed back in 2017, demonstrated that Attention layers could be used to replace traditional RNN based Seq2Seq models. As a result, BERT and GPT architectures beat the state-of-the-art in several NLU benchmarks.

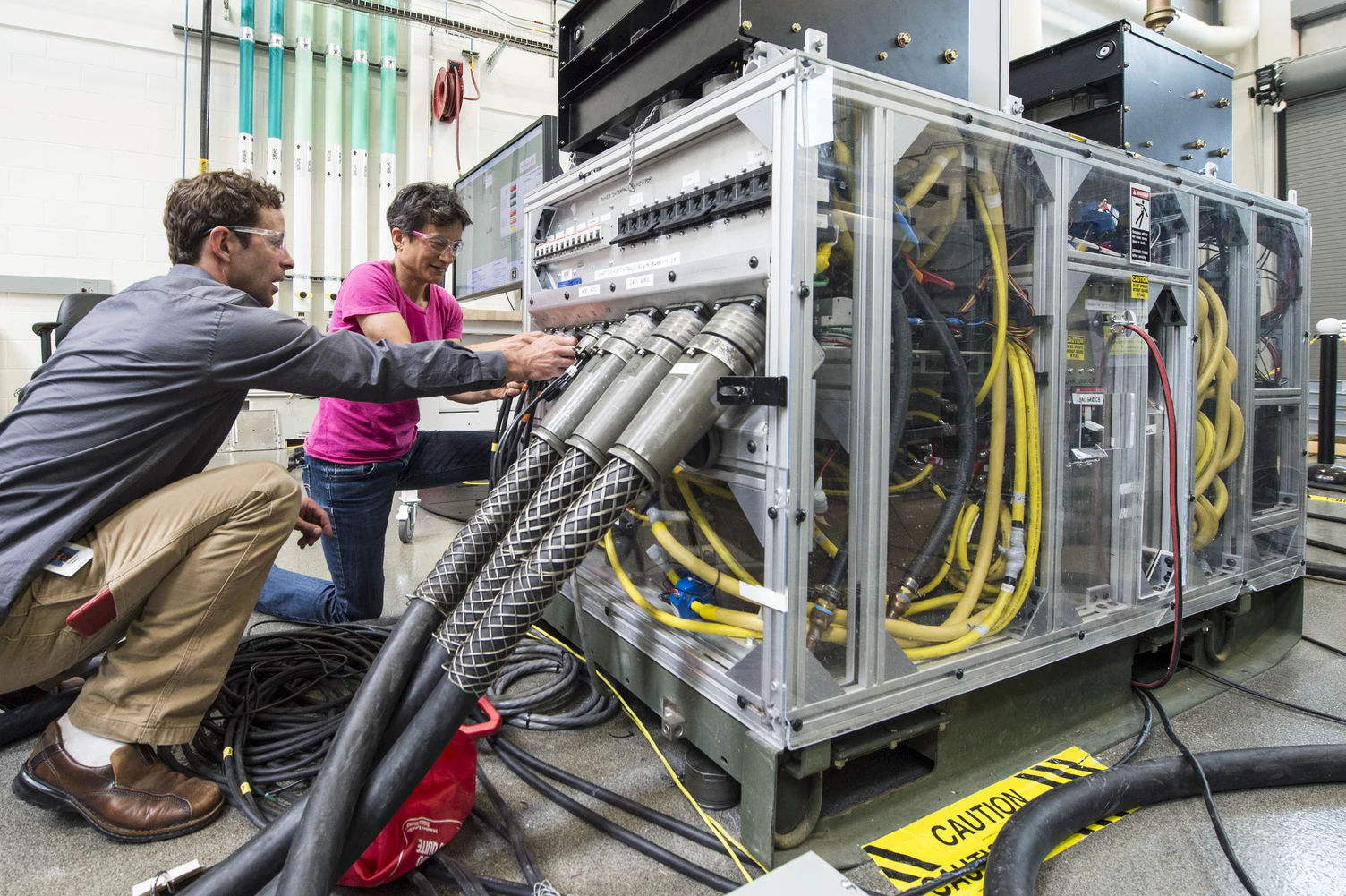

Infrastructure

Efficiency eventually boils down to how well software is integrated with hardware. A great algorithm with poor compute set-up can falter. This is where selecting frameworks, GPUs and TPUs become crucial. For example, Tensorflow Lite is designed for inference in a low-resource environment. PyTorch also has a light-weight interpreter that enables running PyTorch models on Mobile, with native runtimes for Android and iOS. Similar to TFLite, PyTorch offers post-training quantization, and other graph optimization steps for optimizing convolutional layers. When it comes to hardware, choosing a suitable GPU or TPU also plays into the overall efficiency. Over the years, Nvidia has released several iterations of its GPU microarchitectures with increasing focus on deep learning performance. It has also introduced Tensor Cores designed for Deep Learning applications.

The aforementioned checklist offers a framework to monitor the efficiency of ML pipelines.

Thumb rules:

- Can the model graph be compressed?

- Can the training be better?

- Using automated search for better models

- Using efficient layers and architectures

Read the original survey here.