In recent years, if you have explored Data Science, you must have heard or come across the term “Natural Language Processing” and “How Natural Language Processing is changing the face of Data Analytics”. But, what exactly is Natural Language Processing? Natural language refers to the way humans communicate and connect. Today, we are surrounded by text and voice, and text is the two most essential ways of communicating with each other and the society around us. Hence a lot of unstructured data in the form of Voice and Text is generated daily. Noticing the sheer importance of such data types, we must have methods to understand and reason about the natural language present in it, just like we have for other types of data. Natural language processing or NLP refers to the branch of computer science and artificial intelligence, both of which can give computers the ability to understand the text and spoken words the same way human beings can.

NLP combines the power of computational linguistics with rule-based modeling of human language, wrapped further with statistical, machine learning, and deep learning models. These technologies combined enable computers to process human language in the form of text or voice data and ‘understand’ the meaning behind it or the writer’s intent and sentiment. NLP can also be broadly defined as the automatic manipulation of natural language by software or an algorithm. Machine learning practitioners and Data Scientists are getting interested in working with text data more and more and trying to uncover the tools and methods for working in Natural Language Processing. Data generated from conversations, forms or tweets are potential examples of unstructured data. Unstructured data is the kind of data that doesn’t fit neatly into any traditional row and column structure of the relational databases. It fairly represents the vast majority of data that is available in the actual world. It is messy and hard to manipulate. But thanks to the advances in disciplines like machine learning, there has been a big revolution.

Natural language processing helps computers communicate with humans in their language and bring aid to language-related tasks. NLP makes it possible for computers to read text, hear speech, interpret it, measure sentiment and determine which parts are important and whatnot. Today’s machines are becoming more intelligent with time and can analyze more language-based data than humans and in an unbiased way. Considering the vast amount of unstructured data generated every day, from medical records to social media, automation has been a critical aspect of fully analyzing text and speech data efficiently. NLP algorithms are typically based on machine learning algorithms. Instead of hand-coding large sets of rules, NLP relies on machine learning to automatically learn these rules by analyzing a large corpus, collecting sentences, and making statistical inferences from it. In general, the more data analyzed and trained upon, the more accurate the model will be.

Pegasus Transformer for NLP

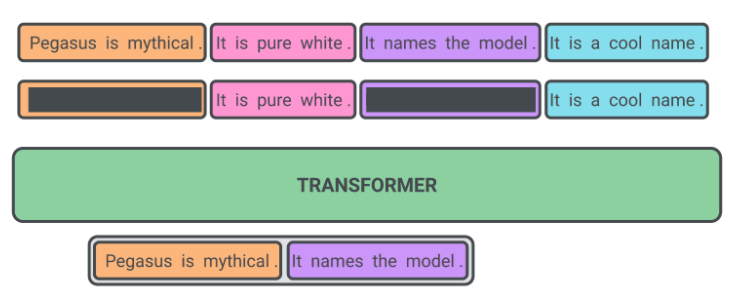

Transfer learning and pretrained language models in Natural Language Processing have pushed forward language understanding and generation limits. Transfer learning and applying transformers to different NLP tasks have become a main trend of the latest research advancements. Transformer encoder-decoder models have recently become favoured as they seem more effective at modeling the dependencies present in the long sequences encountered during the summarization process. The PEGASUS model’s pre-training task is very similar to summarization, i.e. important sentences are removed and masked from an input document and are later generated together as one output sequence from the remaining sentences, which is fairly similar to a summary. In PEGASUS, several whole sentences are removed from documents during pre-training, and the model is tasked with recovering them. The Input for such pre-training is a document with missing sentences, while the output consists of the missing sentences being concatenated together. The advantage of this self-supervision is that you can create as many examples as there are documents without any human intervention, which often becomes a bottleneck problem in purely supervised systems.

Getting Started With Paraphrase Text Model

In this article, we will create an NLP Paraphrase prediction model using the renowned PEGASUS model. The model will derive paraphrases from an input sentence, and we will also be comparing how it is different from the input sentence. The following code execution is inspired by the creators of PEGASUS, whose link to different use cases can be found here.

Installing the Dependencies

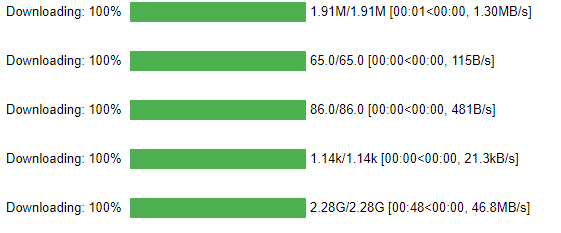

The first step would be to install the required dependencies for our model. Here we are using sentence-splitter, which will help split our paragraphs into sentences and SentencePiece which will offer encoding and decoding of sentences.

#Installing dependencies

!pip install sentence-splitter

!pip install transformers

!pip install SentencePiece

Setting Up the PEGASUS Model

Next up, we will set up our PEGASUS transformer model and make the required settings such as maximum length of sentences and more.

#importing the PEGASUS Transformer model

import torch

from transformers import PegasusForConditionalGeneration, PegasusTokenizer

model_name = 'tuner007/pegasus_paraphrase'

torch_device = 'cuda' if torch.cuda.is_available() else 'cpu'

tokenizer = PegasusTokenizer.from_pretrained(model_name)

model = PegasusForConditionalGeneration.from_pretrained(model_name).to(torch_device)

#setting up the model

def get_response(input_text,num_return_sequences):

batch = tokenizer.prepare_seq2seq_batch([input_text],truncation=True,padding='longest',max_length=60, return_tensors="pt").to(torch_device)

translated = model.generate(**batch,max_length=60,num_beams=10, num_return_sequences=num_return_sequences, temperature=1.5)

tgt_text = tokenizer.batch_decode(translated, skip_special_tokens=True)

return tgt_text

Testing the Model

Now, let’s input of test sentence and check if the created model works,

#test input sentence

text = "I will be showing you how to build a web application in Python using the SweetViz and its dependent library."

#printing response

get_response(text, 5)Output :

['I will show you how to use the SweetViz and its dependent library to build a web application.',

'I will show you how to use the SweetViz library to build a web application.',

'I will show you how to build a web application using the SweetViz and its dependent library.',

'I will show you how to use the SweetViz and its dependent library to build a web application in Python.',

'I will show you how to build a web application in Python using the SweetViz library.']

As we can notice, because we had set the number of responses to 5, we got five different paraphrase responses by the model.

Let’s try the same on a long paragraph.

# Paragraph of text

context = "I will be showing you how to build a web application in Python using the SweetViz and its dependent library. Data science combines multiple fields, including statistics, scientific methods, artificial intelligence (AI), and data analysis, to extract value from data. Those who practice data science are called data scientists, and they combine a range of skills to analyze data collected from the web, smartphones, customers, sensors, and other sources to derive actionable insights."

print(context)We will now perform some further operations on the input paragraph, making use of the sentence splitter library,

#Takes the input paragraph and splits it into a list of sentences

from sentence_splitter import SentenceSplitter, split_text_into_sentences

splitter = SentenceSplitter(language='en')

sentence_list = splitter.split(context)

sentence_list

Output :

['I will be showing you how to build a web application in Python using the SweetViz and its dependent library.',

'Data science combines multiple fields, including statistics, scientific methods, artificial intelligence (AI), and data analysis, to extract value from data.',

'Those who practice data science are called data scientists, and they combine a range of skills to analyze data collected from the web, smartphones, customers, sensors, and other sources to derive actionable insights.']

Now lets do it through a loop to iterate through the list of sentences and paraphrase each sentence in the iteration,

paraphrase = []

for i in sentence_list:

a = get_response(i,1)

paraphrase.append(a)

# Generating the paraphrased text

paraphrase

Output :

[['I will show you how to use the SweetViz and its dependent library to build a web application.'],

['Data science combines multiple fields, including statistics, scientific methods, and data analysis, to extract value from data.'],

['Data scientists combine a range of skills to analyze data collected from the web, smartphones, customers, sensors, and other sources to derive actionable insights.']]

#creating the second split

paraphrase2 = [' '.join(x) for x in paraphrase]

paraphrase2

Output :

['I will show you how to use the SweetViz and its dependent library to build a web application.',

'Data science combines multiple fields, including statistics, scientific methods, and data analysis, to extract value from data.',

'Data scientists combine a range of skills to analyze data collected from the web, smartphones, customers, sensors, and other sources to derive actionable insights.']

# Combine the above splitted lists into a paragraph

paraphrase3 = [' '.join(x for x in paraphrase2) ]

paraphrased_text = str(paraphrase3).strip('[]').strip("'")

paraphrased_text

Output :

I will show you how to use the SweetViz and its dependent library to build a web application. Data science combines multiple fields, including statistics, scientific methods, and data analysis, to extract value from data. Data scientists combine a range of skills to analyze data collected from the web, smartphones, customers, sensors, and other sources to derive actionable insights.

Comparing the generated paraphrase paragraph to our original paragraph,

# Comparison of the original (context variable) and the paraphrased version (paraphrase3 variable)

print(context)

print(paraphrased_text)

Output :

Original :

I will be showing you how to build a web application in Python using the SweetViz and its dependent library. Data science combines multiple fields, including statistics, scientific methods, artificial intelligence (AI), and data analysis, to extract value from data. Those who practice data science are called data scientists, and they combine a range of skills to analyze data collected from the web, smartphones, customers, sensors, and other sources to derive actionable insights.Generated Paraphrase :

I will show you how to use the SweetViz and its dependent library to build a web application. Data science combines multiple fields, including statistics, scientific methods, and data analysis, to extract value from data. Data scientists combine a range of skills to analyze data collected from the web, smartphones, customers, sensors, and other sources to derive actionable insights.We can clearly notice the difference between the two and the ease of understanding in the language in our model generated paragraph!

EndNotes

In this article, we tried to understand how NLP methods can create a Text Paraphrase model through the use of NLP methods. We also learned about the PEGASUS transformer model and explored its main components for NLP and how it simplifies the process. The following implementation can be found as a colab notebook through the link here.

Happy Learning!