Biases in AI models is a pervasive problem. Many businesses and institutions make decisions based on AI systems where a series of algorithms learn from massive amounts of data. These models determine how much credit financial institutions offer customers, who the healthcare prioritises for vaccines and which candidates are eligible for a job. Biases in AI and ML can amplify and reinforce harmful prejudices.

According to Appen’s 2020 State of AI and Machine Learning Report, only 24% companies considered unbiased and diverse AI as critically important, implying that many companies have made no commitment to overcoming biases in AI, ML. Further, 15% of companies reported data diversity and bias reduction as irrelevant.

Representative training

The underlying data of the models is the main source of the issue. Hence understanding the training data is paramount. The method and collection of data can also introduce bias. For example, oversampling specific areas that are overpoliced can result in more recorded crime. It’s critical to ensure the training data is representative and inclusive.

ML models will also reflect biases of those who built them including designers, data scientists, engineers and developers. A study by Latania Sweeney found statistically significant discrimination in ad delivery based on searches of 2184 racially associated personal names across two websites.

Establishing a debiasing strategy

Strategies can be incorporated to help identify potential sources of biases, labels that reveal traits that can affect the accuracy of the model. Organisational strategies where metrics and processes are transparent are also key. Data collection can be improved using internal red teams and third-party auditors. Training datasets should get frequent updates and include newer possibilities to keep it time relevant.

Tools to reduce bias

AI fairness 360: IBM has released an awareness and debiasing tool to detect and eliminate biases in unsupervised learning algorithms under the AI Fairness project. The open-source library can help developers test models and datasets with a comprehensive set of metrics and eliminate bias with 12 packaged algorithms like Reject Option Classification and Disparate Impact Remover, among others. The test, however, has a practical limitation- the bias detection and mitigation algorithm is only designed for binary classification problems.

Watson Open Scale: IBM’s Watson Open Scale checks real-time bias as the AI makes decisions. It allows businesses to automate AI irrespective of how they were built and is available via IBM Cloud.

Google’s What-If Tool: Google’s WIT allows for performance testing in hypothetical situations, to analyse the significance of data features and to visualise model behaviour across subsets of input data and ML fairness metrics.

Aequitas: Developed by the Center of Data Science and Public Policy, Aequitas is an open source bias audit toolkit which can be used to audit predictions of ML-based risk-assessment tools.

Feedback loop

A common, but major, mistake is deploying an AI model without a way for end-users to give feedback on how the model is working in real life. Opening discussion forums or conducting surveys for feedback could ensure optimal performance of the model.

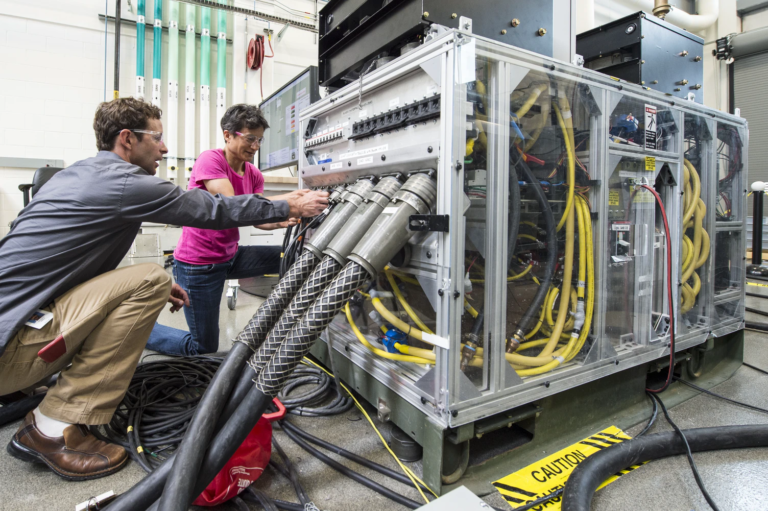

Rigorous testing

Each component of the system should be tested in isolation. Integration tests need to be conducted to get a good idea of individual ML components and their interactions with other parts of the system. Proactive detection of input drift by testing statistics of inputs to check whether they are changing in unexpected ways is also important. Employing gold standard dataset to test the model and applying quality engineering principle (poka-yoke) so that unintended failures do not occur or trigger immediate responses is another box to check.

Model limitations

A comprehensive understanding of an AI model will ensure its proper use and also assess its drawbacks. For example, a model trained for direct correlations should not be used for causal inferences. Users should be communicated about the model’s limitations wherever possible.