In the neural network, we use various kinds of layers which are designed for different predefined functions. These functions perform mathematical operations on the data to reach the goal of the network. We see various examples of the layers like input, output, dense, flatten, etc. Similarly, the lambda layer is also a layer that helps in the data transformation between the layers of a neural network. In this article, we are going to discuss an overview of the lambda layer with its importance and how it can be applied to the network. The major points that we will discuss here are listed below.

Table of Contents

- What is a Lambda Layer?

- Building a Keras Model

- Keras Model with Lambda Layer

- Defining Function

- Lambda Layer in Network

- Difference Between the Models

What is a Lambda Layer?

In the neural networks, we see many kinds of layers like convolutional layers, padding layers, LSTM layers, etc. these all layers have their own predefined functions. Similarly, the lambda layer has its own function to perform editing in the input data. Using the lambda layer in a neural network we can transform the input data where expressions and functions of the lambda layer are transformed. Keras has provided a module for the lambda layer that can be used as follows:

keras.layers.Lambda(function, output_shape = None, mask = None, arguments = None)

More simply we can say that using the lambda layer we can transform the data before applying that data to any of the existing layers. For example, we want to square a value inside the input data we can apply lambda with the following expression

lambda x: x ** 2

The function of the lambda layer can be considered as the data preprocessing but traditionally we perform the data preprocessing before the modelling. But using the lambda layer we can process the data in between the modelling. In this article, we will see an overview of the lambda layer, how it can be applied to neural network build using Keras.

Building a Keras Model

When we talk about the approaches to building networks using the Keras we can use three types of APIs:

- Functional API

- Sequential API

- Model Subclassing API

In this article, we are using the Functional API for making a custom API. Also, we will try to build a model which can recognize images using the MNIST dataset.

Before starting building a model we are required to know that in a neural network we stack layers on top of one another. We can make it available from the layers module of the Keras, Also, we can use TensorFlow as a backend for Keras.

Let’s start the modelling by importing the required modules.

import numpy as np

import matplotlib.pyplot as plt

from tensorflow.keras import layers, models, datasets, utils,optimizersBefore the modelling procedure, we are required to load and preprocess the data. As we have discussed before we are using the MNIST data here which can call from the datasets module of the Keras.

(X_train, Y_train), (x_test, y_test) = datasets.mnist.load_data()

The image under the data can be visualized using the matplotlib.

plt.figure(figsize=(20, 4))

print("Train images")

for i in range(10,20,1):

plt.subplot(2, 10, i+1)

plt.imshow(X_train[i,:,:], cmap='gray')

plt.show()Output:

Here we can see that the images under the data are images of handwritten numbers. The size of images in the data is 28 x 28 = 784. Before putting the data into the model we are required to preprocess the data so that it can behave properly according to the model. It is suggested to make the values under the MNIST data to reshape in a vector of 784 and change the data type into float64 so that it will be easier for the model to process all the values in the data set.

x_train = X_train.astype(np.float64) / 255.0

x_test = x_test.astype(np.float64) / 255.0

x_train = X_train.reshape((x_train.shape[0], np.prod(x_train.shape[1:])))

x_test = x_test.reshape((x_test.shape[0], np.prod(x_test.shape[1:])))

x_test = x_test.reshape((x_test.shape[0], np.prod(x_test.shape[1:])))Now we start building the network using the layers given under the module layers of the Keras.

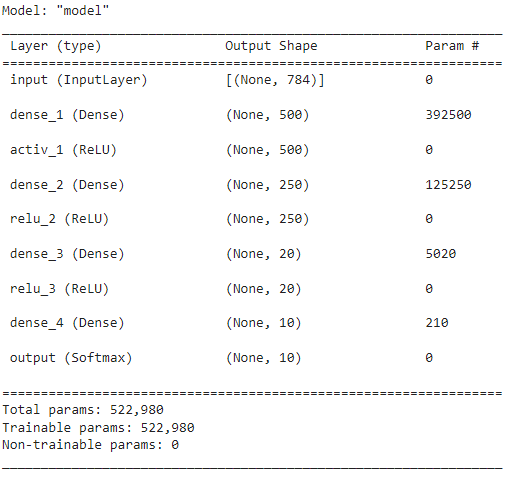

In this section of the article, we are building a model using some dense layers and activation layers with input and output layers where the input layers require an argument shape which defines the shape of the input where the dense layer specifies the number of neurons in the using the argument units. The dense layer can be followed by the activation layer.

We can simply stack these layers using the following codes:

input = layers.Input(shape=(784), name="input")

dense_1 = layers.Dense(units=500, name="dense_1")(input)

activ_1 = layers.ReLU(name="activ_1")(dense_1)After this we can also stack some more layers on the network:

dense_2 = layers.Dense(units=250, name="dense_2")(activ_1)

activ_2 = layers.ReLU(name="relu_2")(dense_2)

dense_3 = layers.Dense(units=20, name="dense_3")(activ_2)

activ_3 = layers.ReLU(name="relu_3")(dense_3)

A dense layer is required to add at the second last place of the network which acquires the neuron according to the classes in the data set. Since we have 10 classes in the data set we can set the dense layer like the following:

dense_4 = layers.Dense(units=10, name="dense_4")(activ_3)

After all the stacking layers we can add an output layer to the network. Where the output layer,

output = layers.Softmax(name="output")(dense_4)

After building the model we are ready to connect them all and using the model module from the Keras we can define the input and output layer in the model. And all other layers of the model will be in the middle of the output and input layers. Also, we can compile the model using the compile function and check the summary of the model as:

model = models.Model(input, output, name="model")

model.compile(optimizer=optimizers.Adam(lr=0.0005), loss="categorical_crossentropy")

model.summary()Output:

Here in the summary, we can check the architecture of the model. Now we can fit the model on the MNIST data. Using the fit function,

model.fit(x_train, y_train, epochs=5, batch_size=256, validation_data=(x_test, y_test))

Output:

Here we can see the architecture of the model using the existing layer from the Keras. In the next section of the article, we will see how we can perform custom operations using the lambda layer.

Keras Model with Lambda Layer

In the above section, we have seen that in the model architecture we have 4 dense layers, and let’s say we are required to perform some operation on the data where we are going to add 3 to each element. The layers we used in the previous architecture are not capable of performing this, so now we will need a lambda layer because this layer is specifically destined for such a task.

Defining Function

To build a model with a lambda layer we are required to define a function that can add 3 to each value of the tensor.

def Function(tensor):

return tensor + 3We can use this above-defined function in place of the argument function of the lambda layer module of Keras. Like the following:

lambda_l = layers.Lambda(Function, name="lambda_l")(dense_3)

Lambda Layer in Network

So now we can put a lambda layer in the network and the code for the network will look like the following:

input = layers.Input(shape=(784), name="input")

dense_1 = layers.Dense(units=500, name="dense_1")(input)

activ_1 = layers.ReLU(name="activ_1")(dense_1)

dense_2 = layers.Dense(units=250, name="dense_2")(activ_1)

activ_2 = layers.ReLU(name="relu_2")(dense_2)

#lambda layer

dense_3 = layers.Dense(units=20, name="dense_3")(activ_2)

def Function(tensor):

return tensor + 2

lambda_l = layers.Lambda(Function, name="lambda_l")(dense_3)

activ_3 = layers.ReLU(name="relu_3")(dense_3)

dense_4 = layers.Dense(units=10, name="dense_4")(activ_3)

output = layers.Softmax(name="output")(dense_4)After compiling the model summary of the model will look like the following:

Here we can see that there is a lambda layer between the layers after the third dense layer.

Difference Between the Models

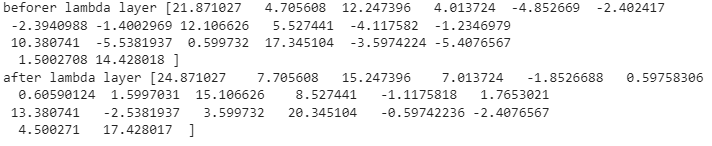

We can also find the difference between the data before and after applying the lambda layer by creating two new models where in the first model we will use the layers from input to the third dense layer and in the second model we will compile layers from input to the lambda layers like the following :

before_lambda = models.Model(input, dense_3, name="before_lambda")

after_lambda = models.Model(input, lambda_l, name="after_lambda")After this, we can fit the models on the data and predict the values using the test data.

model.fit(x_train, y_train, epochs=5, batch_size=256, validation_data=(x_test, y_test))

pred = model.predict(x_train)

before = before_lambda.predict(x_train)

after = after_lambda.predict(x_train)Lets print the data before and after the lambda layer.

print("beforer lambda layer",before[0, :])

print("after lambda layer",after[0, :])Output:

Here in the predictions we can see the difference between the data used in the network. We can easily say before and after the results there is a difference of 3.

In many places we see that in the neural network between the training because of reshaping and neuron in the layers makes the data values faded to the next layer which cause the differences in the learning of the neurons of the layer. Due to this, the performance of the model decreases. Using the lambda layers we can reshape the data for better modeling and extract better results from the network.

Final Words

Here in the article, we have seen the function of the lambda layer and also we have seen how we can apply it into the network build using the keras. Lastly, we see how internally it transforms the data according to the requirement. By just defining a function and putting it into the argument we can change the data in between the network. I encourage readers to use the lambda layers to customize an operation which depends on more than two layers.

References: